What Is A/B Testing? The Complete 2025 Guide

A/B testing is a must for any growth-driven organization. Whether you’re refining a landing page or optimizing a CTA, it provides the data you need to make smart, scalable changes that drive conversions.

A/B testing is part of a larger conversion rate optimization strategy, where you collect qualitative and quantitative data to improve visitor actions across the customer lifecycle. Understanding A/B testing fundamentals reduces optimization guesswork so you can make data-driven decisions that improve performance.

Knowing what to test, how to set up reliable experiments, and how to interpret your results will transform you into a more effective CRO practitioner.

What Is A/B Testing?

A/B testing is a research method that compares two different versions of a web page, app, or other digital marketing asset to determine which one performs better against a specific goal. “A” refers to the original version (the control), while “B” is the variation designed to challenge it. Website visitors are randomly split between the two versions, and their behavior reveals which is more effective for meeting your target metric.

You can run an A/B test on different pieces of your website or app, including call-to-action buttons, messaging, page headlines, and placement for things like forms, graphics, or text elements.

A/B testing is helpful because it provides valuable insights into what improves marketing performance and what doesn’t. It gives you data to support decision-making, whether you’re launching a new campaign or optimizing an existing one.

How Does A/B Testing Work?

A/B testing splits your audience to compare two versions of a page or element. By tracking user behavior, you can determine which version drives better results based on metrics like clicks, sign-ups, or purchases.

While A/B testing always involves comparing two variations, you can use different types of A/B tests, statistical analyses, and metrics to measure the specific outcomes that matter to your goals.

What’s the Difference Between Bayesian vs. Frequentist Statistical Approaches?

A/B testing often relies on one of two statistical models: Bayesian or frequentist. Bayesian analysis builds on prior knowledge and updates the probability of an outcome as new data comes in. Frequentist analysis assumes no prior belief and focuses on long-run probabilities based solely on current data.

Neither approach is inherently better or faster than the other—they’re simply different ways to interpret results. Many modern A/B testing platforms allow you to choose one or the other or blend both.

Why Should You A/B Test?

If you don’t test, you don’t know. And guessing in business rarely leads to sustainable growth.

Without a structured approach to experimentation, spikes in success become difficult to replicate. Even if you guess correctly half the time, growth stalls when you don’t understand why something worked or how to repeat it.

A/B testing removes the guesswork. It gives you data-backed clarity to make smart, incremental changes that improve performance. This is especially valuable for small businesses and agencies that want to avoid high-stakes redesigns based on hunches.

A/B testing helps you:

- Increase your ROI: Make changes that improve conversion rates, lower acquisition costs, and enhance the customer experience.

- Solve your customers’ pain points: Identify what matters most to your customers so you can provide more targeted solutions.

- Create a conversion-focused website: Use tested messaging and CTAs to reduce bounce rates and turn visitors into customers.

- Optimize across user touchpoints: Build strong marketing strategies across print and digital assets with winning designs and headlines.

- Try new ideas with little risk: Test new theories and marketing campaign ideas with minimal investment before committing.

What Are the Different Types of A/B Tests?

There are several different types of A/B tests. The best option for you will depend on the outcome you’re trying to achieve.

A/A Testing

An A/A test compares two identical versions of an element against each other. These tests are used for quality control to establish baselines and ensure the accuracy of your A/B testing software. Since both versions are the same, there should be no difference in their performance.

A/A testing can also help you determine the appropriate sample size for your A/B testing and benchmark the performance of your campaigns and funnels. It’s best to test a page with high traffic where visitors can sign up or buy something. This is why teams often test home pages using A/A experiments.

A/B/n Testing

A/B/n tests compare more than two variations simultaneously. “N” represents the total number of variants and is three or greater. Version A serves as the control, while B, C, D, and so on, represent different variations.

These tests are useful when you have multiple ideas you want to test at once. They can save time and help you get the highest-converting page up quickly. However, they require more traffic to reach statistical significance.

Multivariate Testing (MVT)

While A/B tests change one element at a time, multivariate tests change many elements simultaneously to see how different combinations perform together. For example, you might test different headlines, button colors, and images all in one test to find the best-performing combination.

Split URL Testing

Instead of testing two versions of an element on the same page, split URL testing compares totally different pages hosted at different URLs. This is helpful if you’re testing major redesigns or entirely different page concepts.

One benefit of split URL testing is that it can detect big performance differences more easily, which means you might reach statistical significance faster, even without tons of traffic. It’s also generally easier to interpret the results, since one URL will be the clear “winner.”

Multipage Testing

Also called funnel testing, this test considers changes across multiple connected pages, like those in a checkout flow. It’s useful for seeing how edits to one or more pages in a sequence affect the overall conversion rate through the entire funnel.

There are two ways to perform a multipage test:

- You can create new variants for each page of your funnel.

- You can change specific elements within the funnel and see how they affect other parts of your funnel.

How to Conduct an A/B Test

As with any experiment, running a successful A/B test requires a step-by-step approach. By following a consistent process, you can extract meaningful insights from your results. Here’s how to get started.

Step 1: Collect Data on Your Current Performance

Before testing anything, analyze your current performance using tools like Google Analytics. Look at the conversion goals for each page and use quantitative and qualitative tools to understand what’s happening and why.

Questions to Ask Yourself Before You A/B Test

To analyze your data, ask questions like:

- What are the top-performing pages, and could they perform even better?

- Where are users dropping off in the funnel?

- Which pages get traffic but have high bounce rates? What changes would encourage people to stay longer?

Leverage the 80-20 rule to make small tweaks (20%) that could drive big results (80%). Pair quantitative data (e.g., traffic, bounce rate, conversion rate) with qualitative insights (e.g., user feedback, survey responses) to form a complete picture of where to start.

Step 2: Set a Clear Goal

Once you’ve done your research, you need to define a clear, measurable A/B testing goal. Do you want more clicks on a CTA, or are you looking to increase email sign-ups? Whatever it is, make sure it’s specific.

Define your goal with these steps:

- Start with your business objective: Figure out what you want to achieve. For example, if you’re a for-profit organization, this may be revenue growth.

- Find goals that align with business objectives: Identify milestones to help you achieve the business objective. For example, a news outlet that wants to make more money could aim to have its marketing team increase newsletter sign-up rates.

- Choose KPIs and metrics: Define exactly how you’ll measure success, such as the number of new sign-ups or percentage increase in conversion rate.

Step 3: Formulate a Hypothesis

With your goal in place, it’s time to generate a hypothesis for your A/B test. This gives your test direction by predicting what change will improve performance and why.

If you aren’t sure what to test, you can use a free hypothesis-generating tool like Convert’s Hypothesis Generator. If you come up with multiple hypotheses for the same observation, the next step is to prioritize them.

Prioritization in A/B Testing

When you have multiple hypotheses you want to test, prioritization in A/B testing can help you decide where to start.

Consider prioritization frameworks like:

- PIE: The PIE model scores your hypothesis based on potential, importance, and ease.

- ICE: ICE looks at impact, confidence, and ease.

- PXL, RICE, or KANO: These models look at more attributes and are a bit more complex.

You can create your own A/B testing prioritization model that’s unique to your organization or use tools like Convert to rank your hypotheses using frameworks like PIE or ICE.

Step 4: Determine Your Sample Size and Target Audience

Before you can test your hypothesis, you need to identify your sample size, which is the number of visitors needed for accurate results.

Even if your A/B testing software calculates this for you, understanding this statistic can help you spot testing abnormalities. For example, if you estimate you need 20,000 visitors to reach statistical significance but your test is still running with 120,000 visitors, you’ll want to investigate what’s wrong.

Two other factors to determine upfront are:

- Test duration: This is the pre-specified amount of time you’ll be running your test to reach valid conclusions. If your test is set to run for 28 days but shows significance after only 14 days, that’s a red flag.

- Minimum detectable effect (MDE): The minimum detectable effect is the minimum difference between versions that your A/B test can reliably find. It essentially defines your test’s sensitivity, because if the real difference is smaller than the MDE, your test probably won’t notice it.

Step 5: Run the Test, Analyze Results, and Act

When it’s time to run the test, split your traffic between version A (control) and version B (variation), and let the data tell the story.

With a platform like Convert, you can easily create variations using a visual editor or custom code, whether you’re changing a headline, tweaking your CTA button color, or hiding an element.

Once all your A/B tests are complete, analyze your results to decide which actions to take next. Use these insights to iterate further, scale the change, or test a new idea.

If you aren’t quite sure how to interpret the results, this guide on learning from your A/B testing results can help.

What Can You A/B Test?

You can A/B test nearly every user-facing element. By being intentional about what you test and why you’re testing it, you can run tests that serve different business goals, such as improving user engagement or increasing click-through rate.

Here are some examples of high-impact areas to consider:

- Page elements: Test landing pages, product pages, your homepage, CTAs, forms, and other core conversion points.

- Navigation: Adjust layout, labels, or menu structure to improve ease of use.

- Messaging and offers: Try different headlines, subheads, and body copy to see what resonates.

- Value propositions and social proof: Experiment with testimonials, reviews, and benefit-driven language to build trust.

- Social media and email campaigns: Vary send and post times, fonts, email subject lines, and visuals to see what captures attention.

- Visual and user experience (UX) components: Compare different website designs, page layouts, graphics, colors, animations, and spacing to optimize for usability and performance.

- Images and videos: Swap thumbnails, captions, images with and without people, and color palettes to test how users respond.

Starting Your A/B Testing Program

There’s a difference between running an occasional basic test and building a sustainable, scalable A/B testing program. If you’re looking for the latter, your organization will need to make some cultural changes as well.

For example, you’ll likely need to place more emphasis on cross-functional alignment and an overall commitment to an experimentation culture. You’ll also need to collaborate with internal groups to select the right A/B testing tools and roadmap.

The process often begins with bringing in additional expertise to help lay a foundation for long-term success.

Hiring A/B Testing Talent

If you want to hire an A/B testing specialist, you have three options:

- Get a freelancer

- Hire a conversion rate optimization agency

- Hire a conversion rate optimizer or CRO specialist to join your company

While the first two options have straightforward approaches, the last requires some recruitment. You’ll want to know which qualities to look for, what interview questions to ask, and how to prepare your organization to receive the talent you decide to hire.

Your ideal A/B testing talent should be:

- Curious: A star optimizer always wants to know why things are the way they are. They’re always looking for answers and have a scientific approach to searching for those answers.

- Data-driven and analytical: In search of answers, they aren’t satisfied with opinions and hearsay. They will tear a problem into atoms and search for its data-backed solutions.

- Empathetic: They are eager to understand people and learn why they do what they do, so that they can communicate as effectively as possible.

- Creative: They can discover innovative solutions to problems and confidently carve a fresh path to get things done.

- Eager to always learn: A great A/B tester isn’t stuck in their ways. They’re willing to challenge their beliefs and welcome new ideas.

A/B Testing Interview Questions

Once you have some promising candidates, asking a few specific interview questions can help you learn:

- How well they understand conversion rate optimization

- What hands-on experience they have with testing

- How they think about experimentation

- How they approach their work

- Whether they understand the limits of what A/B testing can and can’t do

Questions to assess their CRO expertise:

- How important do you think qualitative and quantitative data are to A/B testing?

- When should you use multivariate tests over A/B tests?

- How do you optimize a low-traffic site?

- What do you think most CROs aren’t doing right with A/B testing?

- Do you think A/B tests on a web page affect search engine results?

Questions to learn about their personal experience with testing:

- What was your biggest challenge with A/B testing?

- What is the most surprising thing you’ve learned about customer behavior?

- What is your favorite test win (and test failure)? Why?

- When should you move from free A/B testing tools to paid ones?

Questions to understand their testing philosophy:

- What are the limitations of A/B testing?

- Why do you think most sites don’t convert?

- How would you encourage a culture of experimentation in our organization?

- What’s your take on audience segmentation and how it affects testing accuracy?

Questions to see how they work:

- What is your process for coming up with test ideas?

- How do you prioritize your list of test ideas?

- What tools do you prefer for running A/B tests?

- How would you start using experimentation to optimize our website?

- What would you do if an executive suggests A/B testing when you think it isn’t necessary?

- How do you get baseline metrics of a page before testing?

Best Practices for Picking and Using A/B Testing Tools

Once you have the right people in place, it’s time to settle on your tools. It’s important to choose a tool that offers flexibility, such as testing options on both the client-side and server-side, as well as transparent reporting.

Other things to consider when looking for the top A/B testing tools include:

- Cost: Does the pricing of the A/B testing tool you’re considering justify the results you’re expecting?

- Compliance features: Does the tool warn you of potential privacy violations in your targeting setup?

- Segmentation: Does the software let you divide your data into segments after your test to see how different users responded to test variations?

- Integrations: Can the tool easily integrate with other platforms you already use, such as analytics tools, ecommerce platforms, and CRMs?

- Learning curve: Is the tool easy to navigate, or will it take hours of valuable time just to figure out the basics?

A/B Test Analysis with Google Analytics

Google Analytics 4 (GA4) can still support testing workflows, even post-Google Optimize. You just need to integrate it with a third-party A/B testing platform.

Convert Experiences integrates with GA4 to help you get the most out of your tests. Learn more.

Common A/B Testing Challenges and Mistakes

Challenges and mistakes are inherent in all kinds of testing, including A/B testing. Knowing what to watch for and how to use A/B testing tools to avoid issues can save you time, resources, and missteps.

Here are some common pitfalls and how to stay ahead of them:

- Miscalculating sample size: An inaccurate sample size leads to unreliable results. Use a testing calculator or your platform’s built-in tools to ensure you’re collecting enough data for statistically valid conclusions.

- Misinterpreting test results: Poor interpretation of results can lead to bad decisions and flawed strategies. Take time to understand the data in context before making changes based on your test.

- Lack of alignment with CRO goals: If your test doesn’t support a broader CRO goal, it’s easy to get off course. Tie each test to a clear objective that supports business growth.

- Testing too much at once: Testing too many elements at once can make it hard to pinpoint what caused a change. Prioritize your tests and isolate variables for clarity. With tools like Convert, you can run multiple tests across separate pages without overlap.

- Not reaching statistical significance: Allow every test you run to reach statistical significance. Even if one version looks to be a clear winner, stopping your test early wastes effort and undermines your results.

A/B Testing Examples

To help inspire ideas for your first experiments, here are two A/B testing examples from other brands.

Csek Creative

Csek, a digital agency, wanted to decrease its home page bounce rate, so it decided to test a new tagline at the top of the page. Tagline A was the original, and tagline B was the variant.

Hypothesis: Changing the tagline to something more explanatory and service-specific will improve bounce rate and click-throughs to other pages on the site.

Tagline A: Csek Creative is a Kelowna-based digital agency that delivers the results that make business sense.

Tagline B: Csek Creative is a digital agency that helps companies with their online and offline marketing needs.

Result: The updated text was shown to more than 600 site visitors and resulted in an 8.2% increase in click-throughs to other pages.

Takeaway: Clarity about what you do or offer within the first seconds of visiting your site is vital. Online audiences don’t want to work to figure that out.

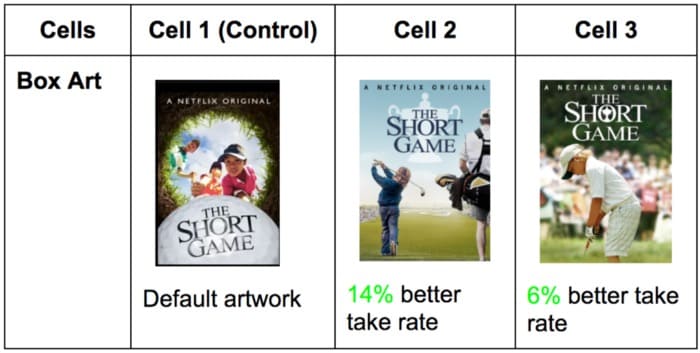

Netflix

Netflix selects the best artwork for its videos using A/B testing. For the movie The Short Game, it tested different artwork options to see whether artwork that better reflected the film’s story would help reel in a larger audience.

Hypothesis: Artwork that illustrates the movie is about kids competing in golf will help increase engagement and attract a wider audience.

Results: The variant in cell two resulted in a 14% better take rate than the control, and the one in cell three increased it by 6%.

Takeaway: Optimizing visual storytelling can increase conversions. Ask yourself whether your graphics are saying exactly what you want them to say or if they’re holding you back.

Put Your A/B Testing Knowledge into Action

A/B testing is a low-cost and low-risk way to try out new ideas that can boost conversions, customer engagement, and ROI. With the right testing strategy and tools, you can better understand your customer base and deliver better experiences.

Convert’s helpful and trustworthy experimentation tool supports teams that need flexibility, privacy compliance, and efficiency when testing multiple elements at once. It enables ethical, high-performance testing through features like advanced audience targeting, segmentation, and a developer-friendly setup, making it a strong choice for in-house optimization teams and CRO agencies alike.

Start your 15-day free trial to begin making scalable changes that drive business growth.

Written By

Alex Birkett

Edited By

Carmen Apostu