Testing Mind Map Series: How to Think Like a CRO Pro (Part 66)

Interview with Tom van den Berg

Every couple of weeks, we get up close and personal with some of the brightest minds in the CRO and experimentation community.

We’re on a mission to discover what lies behind their success. Get real answers to your toughest questions. Share hidden gems and unique insights you won’t find in the books. Condense years of real-world experience into actionable tactics and strategies.

This week, we’re chatting with Tom van den Berg, Conversion Specialist at De Bijenkorf, Owner of the CROweekly newsletter, and co-founder of Experimentation Jobs, the #1 Job Board for Experimentation & CRO roles, and co-founder of Kade 171.

Tom, tell us about yourself. What inspired you to get into testing & optimization?

In 2013, while working for T-Mobile in the Netherlands as a web analyst, I was introduced to A/B testing tools. I made some changes using the WYSIWYG editor and put my first experiment live. It did not work in all browsers, and I made more rookie mistakes. For example, I had not checked in advance whether we could even test on that page and had no idea how long the test needed to run. Still, it was a lot of fun.

However, I was immediately fascinated by the power of experiments. Not much later, when I started at Online Dialogue, I knew that I wanted to switch from web analytics to experimentation.

My focus has always been on the CRO process and streamlining it so that an A/B test can go live in the shortest possible time. As a result, an organization can perform more experiments and in the end, make a greater impact. And yes, I know, the number of experiments is not the only goal, but it is difficult to predict the impact and the number of winners. Remember what Jeff Bezos said: “Our success at Amazon is a function of how many experiments we do per year, per month, per week, per day”.

The total number of experiments performed can be predicted and planned. By analyzing all the steps, it’s often possible to discover that there is much to gain. Is it clear what exactly needs to happen in which step? And who is responsible for which step in the process?

In recent years, I started two companies to broaden my knowledge and try different things. My own tableware online shop, and, together with Kevin Anderson, I started Experimentation Jobs.

In addition, I’ve been running a newsletter in the field of Experimentation / Data / UX for 5 years: CRO weekly, where readers find an overview of interesting experimentation-related reads, events, and jobs. Companies have the opportunity to sponsor CRO weekly by sharing their logo and relevant content with the subscribers to gain brand awareness.

I’m energized by starting and trying different things with an “experimentation” mindset. Trying something, evaluating it, and then determining the next step.

How many years have you been testing for?

A little over 10 years now, since I started at T-Mobile in 2013. Over these 10 years, I have worked for different companies. Working at an agency—Online Dialogue in my case—you learn a lot from various optimization programs across different companies and industries.

At that time, there were few organizations actually running experiments. It was something new and there was little knowledge of it at most organizations. Often, there was one person in the organization working on experimentation.

A few years later at Online Dialogue, we always worked in multidisciplinary teams (data, UX, development, psychology, and conversion specialists).

Now, at De Bijenkorf (a big Dutch retailer), I am the conversion specialist and part of the tech organization. I work closely with product owners, developers, and UX designers to validate the most important changes through experiments. In addition, we work on continuously improving the website, and we still do this in multidisciplinary teams. It became a habit and “normal” in organizations.

What’s the one resource you recommend to aspiring testers & optimizers?

For those without much experience, I recommend working for an organization where learning from more experienced colleagues is possible.

I once made the mistake of working for a company where I was the only conversion specialist. At that time, I didn’t have much experience and couldn’t ask questions or consult with a colleague. You can learn a lot by doing things yourself and making mistakes, but experienced colleagues ensure that you learn faster. And it’s way more fun!

In addition, it helps to go to events and meet people in the same role from other organizations and discuss common topics.

Answer in 5 words or less: What is the discipline of optimization to you?

Learning, doing, having fun!

What are the top 3 things people MUST understand before they start optimizing?

- Statistics

I wish I paid more attention at university back in the day, it would have been useful now. Luckily, I now understand the basics of statistics within experiments. These concepts should be understood by everyone who is involved in experimentation:- When you can apply A/B testing as a method

- How long an experiment should run

- How to interpret the results of an A/B test

- How to deal with segmentation within an A/B test result

- How important false positives and false negatives are

And much more…

- Data, research & hypothesis

Make sure you understand how to interpret data (both quantitative and qualitative). If you can also do research yourself, even better. Log in to Google Analytics and write down your findings, also do a usability study and run a survey.

These activities give so many insights, which you can then use to write a hypothesis. It is important to understand the importance of a good hypothesis and why (HARKing: Hypothesizing After the Results are Known) is a big issue. - Not all research is equal.

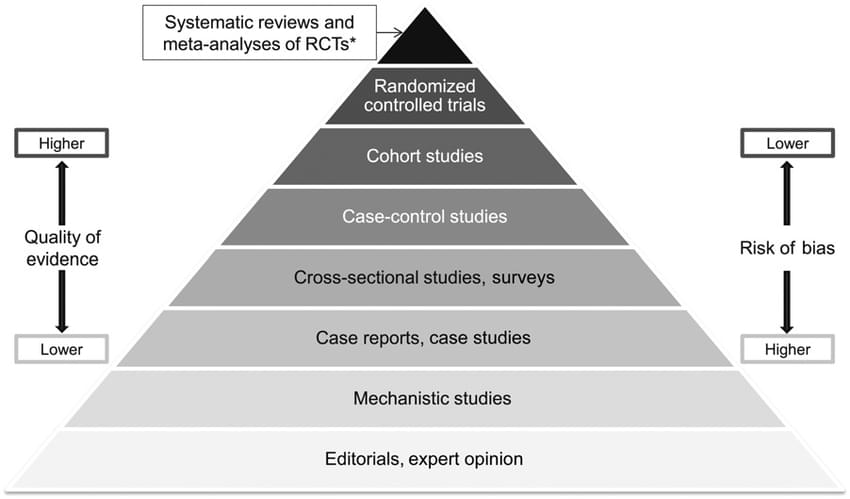

I still see it happen often enough that all research is given the same weight. As you can see in the Hierarchy of Evidence below, there is a difference in the quality of the research. The higher up in the pyramid, the higher the quality of the evidence and the lower the risk of bias. For example, experiments are above surveys, but above that is a meta-analysis of more experiments.

Bonus:

Have the ability to explain experimentation and its importance to other colleagues. There are plenty of people who have a lot of knowledge about experimentation but still find it difficult to get people in the organization, who aren’t directly involved, on board. It is therefore an important skill to be able to explain this simply while considering your colleagues’ interests.

How do you treat qualitative & quantitative data to minimize bias?

By using proper statistical methods and ensuring that the sample size is large enough. I recommend starting with quantitative analysis (in Google Analytics, for example) to understand user behavior (online marketing sources, landing pages, funnels from the most important landing pages, pages with the highest drop-off, etc). After this analysis, use qualitative data. Ask targeted questions to understand why users exhibit certain behaviors. Be aware that users can behave differently from what they say. Therefore pay attention to what they do and not what they say.

Tip: For a survey with a relatively high response rate, place it on the confirmation page. Visitors who have made a purchase are more likely to give feedback.

How (to you) is experimentation different from CRO?

CRO is a part of experimentation, in my opinion. It is more about small changes made to improve the conversion %. It is more of an online marketing activity, similar to SEA and SEO.

Experimentation encompasses everything—including big features tested within the tech department, supply chain adjustments, or even in offline stores. This may not always be based on data, but measuring the impact properly is important.

Talk to us about some of the unique experiments you’ve run over the years.

- Over the last few months, I’ve been conducting some experiments with our supply chain team around delivery time. While I can’t share the results, I highly recommend exploring this area.

- Starting experiments in email marketing is relatively easy and can have a lot of impact. Things like adjusting the subject line, testing different sending times, or adjusting the landing pages.

- Removing distractions in the checkout process. This is something I have tested many times and it generally shows positive results.

Cheers for reading! If you’ve caught the CRO bug… you’re in good company here. Be sure to check back often, we have fresh interviews dropping twice a month.

And if you’re in the mood for a binge read, have a gander at our earlier interviews with Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, Steph Le Prevost, Nils Koppelmann, Danielle Schwolow, Kevin Szpak, Marianne Stjernvall, Christoph Böcker, Max Bradley, Samuel Hess, Riccardo Vandra, Lukas Petrauskas, Gabriela Florea, Sean Clanchy, Ryan Webb, Tracy Laranjo, Lucia van den Brink, LeAnn Reyes, Lucrezia Platé, Daniel Jones, May Chin, Kyle Hearnshaw, Gerda Vogt-Thomas, Melanie Kyrklund, Sahil Patel, Lucas Vos, David Sanchez del Real, Oliver Kenyon, David Stepien, Maria Luiza de Lange, Callum Dreniw, Shirley Lee, Rúben Marinheiro, Lorik Mullaademi, Sergio Simarro Villalba, Georgiana Hunter-Cozens, Asmir Muminovic, Edd Saunders, Marc Uitterhoeve, Zander Aycock, Eduardo Marconi Pinheiro Lima, Linda Bustos, Marouscha Dorenbos, Cristina Molina, Tim Donets, Jarrah Hemmant, and Cristina Giorgetti.

Written By

Tom van den Berg

Edited By

Carmen Apostu