Testing Mind Map Series: How to Think Like a CRO Pro (Part 22)

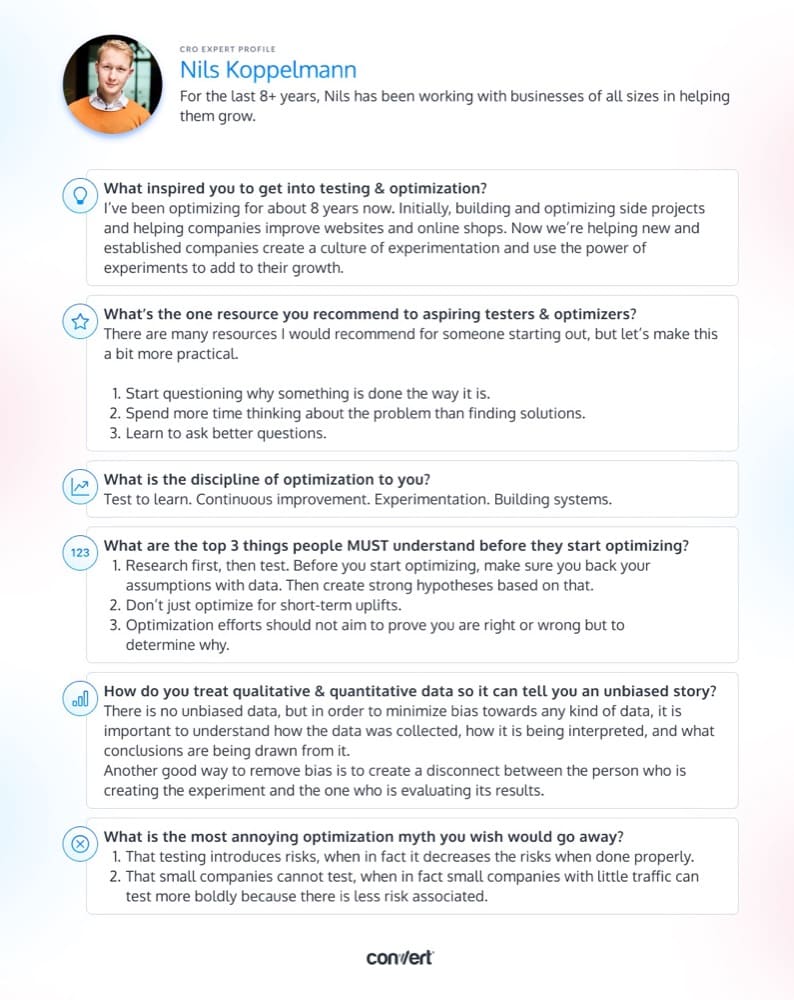

Interview with Nils Koppelmann

Nils Koppelmann is a passionate advocate for the benefits of experimentation and data-driven decision-making. He understands that successful A/B testing isn’t just about finding out if something works, but why it does – and he’s here to dispel two common myths about this practice.

That A/B testing introduces risks and that small companies cannot test effectively. On the contrary, Nils believes that A/B testing can help reduce risks by providing insights into what works and what doesn’t. And while small companies may have less traffic than large companies, they can actually test more boldly because there is less at stake.

So the next time you think A/B testing sounds too risky or expensive, read this interview with Nils for tips on how to test effectively on your site without introducing any unnecessary risks.

Nils, tell us about yourself. What inspired you to get into testing & optimization?

Over the last 8+ years, I have been building websites and online shops to help clients big and small “optimize” their online presence.

Some time ago, my thoughts turned to how to ensure that our designs really have the desired impact.

When I first encountered the term Conversion Rate Optimization about 3 ½ years ago, I wondered why it wasn’t something we already focused on. From that point onward, I shifted my focus from delivering design and technology to providing insights and results.

The world of optimization bears so much potential that is still largely unexplored by the majority of companies online. We should take advantage of the vast amount of data available as well as learn from it so that we can continuously improve.

One of the most striking things for me is how much fun it is to learn again. Never did I think that I would voluntarily open up a book about statistics (shoutout to Georgi Georgiev and his great book Statistical Methods of Online A/B Testing) and actually read it. This and many other aspects keep on inspiring me to test to learn.

How many years have you been optimizing?

The desire to optimize stems from being unsatisfied with the status quo, the curiosity about what’s more, and the certainty that anything can be improved.

In a professional context, I’ve been optimizing for about 8 years now. Initially, building and optimizing side projects and helping companies improve websites and online shops. Now we’re helping new and established companies create a culture of experimentation and use the power of experiments to add to their growth.

Thinking back, I don’t recall ever not optimizing. Already as a kid, I always questioned the way things were done. I remember my dad saying that I asked “too many” questions, something that in retrospect I’m really happy that I did and still do.

Even in my personal life, I am known to track and optimize most aspects of my life.

What’s the one resource you recommend to aspiring testers & optimizers?

There are many resources I would recommend for someone starting out, but let’s make this a bit more practical.

To get started, here are a few suggestions:

- Be more curious, and start questioning why something is done the way it is. This alone will open up a whole new view of the world.

- Spend more time thinking about the problem than finding solutions. You first need to really understand the problem, then the solutions will come more easily.

As Albert Einstein put it, “If I had an hour to solve a problem, I’d spend 55 minutes thinking about the problem and 5 minutes thinking about solutions.”

That said, it is important to think outside the box, which means not only thinking within the parameters of the problem but also considering outside angles and possibilities.

The key is to find a balance between the two. - Learn to ask better questions. This is one of the most helpful tools any optimizer can have in their arsenal because it enables and taps into curiosity.

Also, I share interesting articles, resources, and tools in my weekly experimentation newsletter, which is addressed to experimentation beginners and veterans alike.

Answer in 5 words or less: What is the discipline of optimization to you?

Test to learn. Continuous improvement. Experimentation. Building systems.

What are the top 3 things people MUST understand before they start optimizing?

Research first, then test. Before you start optimizing, make sure you back your assumptions with data, qualitative and quantitative. Then create strong hypotheses based on that.

Don’t just optimize for short-term uplifts – while it is vitally important for the program to have a positive ROI, it should not just focus on that but also consider the huge bandwidth of learning opportunities and risk capping that experimentation brings.

Optimization efforts should not aim to prove you are right or wrong, but to determine why – in either case. There is no point in optimizing anything if you don’t understand how you got there and how to replicate getting there. For long-term success in A/B testing, it is crucial to have good systems in place.

How do you treat qualitative & quantitative data so it tells an unbiased story?

There is no unbiased data, but in order to minimize bias towards any kind of data, it is important to understand how the data was collected, how it is being interpreted, and what conclusions are being drawn from it.

To classify how reliable the data you’re talking about is, you should check out the Hierarchy of Evidence.

We use quantitative data to pre-filter, then use qualitative data and scientific resources to go deeper, and then again quantitative data to prove or disprove initial assumptions and hypotheses.

At the top of our efforts is the so-called meta analysis, allowing us to look for patterns across previous experiments and align further research and experimentation efforts.

Another good way to remove bias is to create a disconnect between the person who is creating the experiment and the one who is evaluating its results. This minimizes the bias towards the success of an experiment.

What is the most annoying optimization myth you wish would go away?

I would like to dispel two myths:

- That testing introduces risks, when in fact it decreases the risks when done properly

- That small companies cannot test, when in fact small companies with little traffic can test more boldly because there is less risk associated / less at stake.

Sometimes, finding the right test to run next can feel like a difficult task. Download the infographic above to use when inspiration becomes hard to find!

Hopefully, our interview with Nils will help guide your experimentation strategy in the right direction!

What advice resonated most with you?

And if you haven’t already, check out our past interviews with CRO legends Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, and our latest with Steph Le Prevost.

Written By

Nils Koppelmann

Edited By

Carmen Apostu