Testing Mind Map Series: How to Think Like a CRO Pro (Part 39)

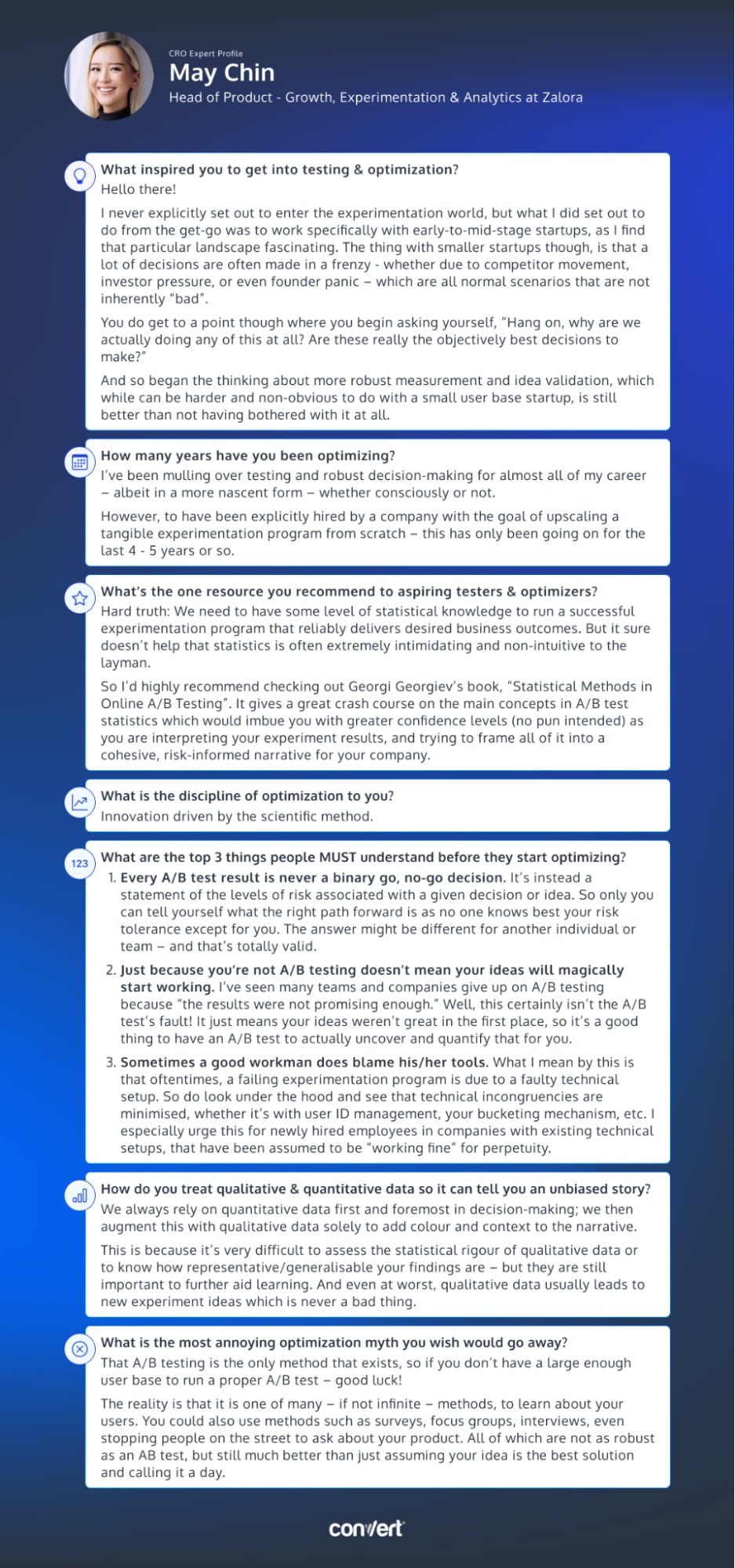

Interview with May Chin

May Chin’s path to experimentation wasn’t a straight shot. Instead, she gravitated towards the fast-paced world of startups, where decisions are often made on the fly. Amidst this chaos, she started to question the method behind the madness which led her to the realm of robust measurement and idea validation.

May says optimization is all about “Innovation driven by the scientific method”, where every A/B test result isn’t a binary go, no-go decision, but a statement of risk. Instead of categorizing outcomes as successes or failures, this approach encourages us to view optimization from the perspective of insight and discernment.

May’s insights, anecdotes, and advice will guide you through the exciting world of testing and optimization. So buckle up and let’s dive right in…

May, tell us about yourself. What inspired you to get into testing & optimization?

Hello there!

I never explicitly set out to enter the experimentation world, but what I did set out to do from the get-go was to work specifically with early-to-mid-stage startups, as I find that particular landscape fascinating. The thing with smaller startups though, is that a lot of decisions are often made in a frenzy – whether due to competitor movement, investor pressure, or even founder panic – which are all normal scenarios that are not inherently “bad”.

You do get to a point though where you begin asking yourself, “Hang on, why are we actually doing any of this at all? Are these really the objectively best decisions to make?”

And so began the thinking about more robust measurement and idea validation, which while can be harder and non-obvious to do with a small user base startup, is still better than not having bothered with it at all.

How many years have you been optimizing for?

I’ve been mulling over testing and robust decision-making for almost all of my career – albeit in a more nascent form – whether consciously or not.

However, to have been explicitly hired by a company with the goal of upscaling a tangible experimentation program from scratch – this has only been going on for the last 4 – 5 years or so.

What’s the one resource you recommend to aspiring testers & optimizers?

Hard truth: We need to have some level of statistical knowledge to run a successful experimentation program that reliably delivers desired business outcomes. But it sure doesn’t help that statistics is often extremely intimidating and non-intuitive to the layman.

So I’d highly recommend checking out Georgi Georgiev’s book, “Statistical Methods in Online A/B Testing”. It gives a great crash course on the main concepts in A/B test statistics which would imbue you with greater confidence levels (no pun intended) as you are interpreting your experiment results, and trying to frame all of it into a cohesive, risk-informed narrative for your company.

Answer in 5 words or less: What is the discipline of optimization to you?

Innovation driven by the scientific method

What are the top 3 things people MUST understand before they start optimizing?

- Every A/B test result is never a binary go, no-go decision. It’s instead a statement of the levels of risk associated with a given decision or idea. So only you can tell yourself what the right path forward is as no one knows best your risk tolerance except for you. The answer might be different for another individual or team – and that’s totally valid.

- Just because you’re not A/B testing doesn’t mean your ideas will magically start working. I’ve seen many teams and companies give up on A/B testing because “the results were not promising enough.” Well, this certainly isn’t the A/B test’s fault! It just means your ideas weren’t great in the first place, so it’s a good thing to have an A/B test to actually uncover and quantify that for you.

- Sometimes a good workman does blame his/her tools. What I mean by this is that oftentimes, a failing experimentation program is due to a faulty technical setup. So do look under the hood and see that technical incongruencies are minimised, whether it’s with user ID management, your bucketing mechanism, etc. I especially urge this for newly hired employees in companies with existing technical setups, that have been assumed to be “working fine” for perpetuity.

How do you treat qualitative & quantitative data to minimize bias?

We always rely on quantitative data first and foremost in decision-making; we then augment this with qualitative data solely to add colour and context to the narrative.

This is because it’s very difficult to assess the statistical rigour of qualitative data or to know how representative/generalisable your findings are – but they are still important to further aid learning. And even at worst, qualitative data usually leads to new experiment ideas which is never a bad thing.

What is the most annoying optimization myth you wish would go away?

That A/B testing is the only method that exists, so if you don’t have a large enough user base to run a proper A/B test – good luck!

The reality is that it is one of many – if not infinite – methods, to learn about your users. You could also use methods such as surveys, focus groups, interviews, even stopping people on the street to ask about your product. All of which are not as robust as an AB test, but still much better than just assuming your idea is the best solution and calling it a day.

Download the infographic above and add it to your swipe file for a little inspiration when you’re feeling stuck!

Thank you for joining us for this exclusive interview with May! We hope you’ve gained some valuable insights from their experiences and advice, and we strongly encourage you to put them into action in your own optimization efforts.

Check back once a month for upcoming interviews! And if you haven’t already, check out our past interviews with CRO pros Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, Steph Le Prevost, Nils Koppelmann, Danielle Schwolow, Kevin Szpak, Marianne Stjernvall, Christoph Böcker, Max Bradley, Samuel Hess, Riccardo Vandra, Lukas Petrauskas, Gabriela Florea, Sean Clanchy, Ryan Webb, Tracy Laranjo, Lucia van den Brink, LeAnn Reyes, Lucrezia Platé, and our latest with Daniel Jones.