Testing Mind Map Series: How to Think Like a CRO Pro (Part 77)

Interview with Mike Fawcett

There’s the experimentation everyone talks about. And then there’s how it actually happens.

We’re hunting for signals in the noise to bring you conversations with people who live in the data. The ones who obsess over test design and know how to get buy-in when the numbers aren’t pretty.

They’ve built systems that scale. Weathered the failed tests. Convinced the unconvincible stakeholders.

And now they’re here: opening up their playbooks and sharing the good stuff.

This week, we’re chatting with Mike Fawcett, CRO Lead & Founder at Mammoth Website Optimisation.

Mike, tell us about yourself. What inspired you to get into testing & optimization?

I used to work in SEO, back in the good old days of paid link building. But as Google got smarter, the job got harder, and it got increasingly difficult to attribute your work to any performance changes you might have seen.

While all that was happening, a friend of mine was doing this funny thing called “CRO”. He was having a big impact, he had reliable data, and could show clear cause & effect. It felt like the total opposite of SEO at the time. So yeah, that’s what originally inspired my move into testing & optimisation.

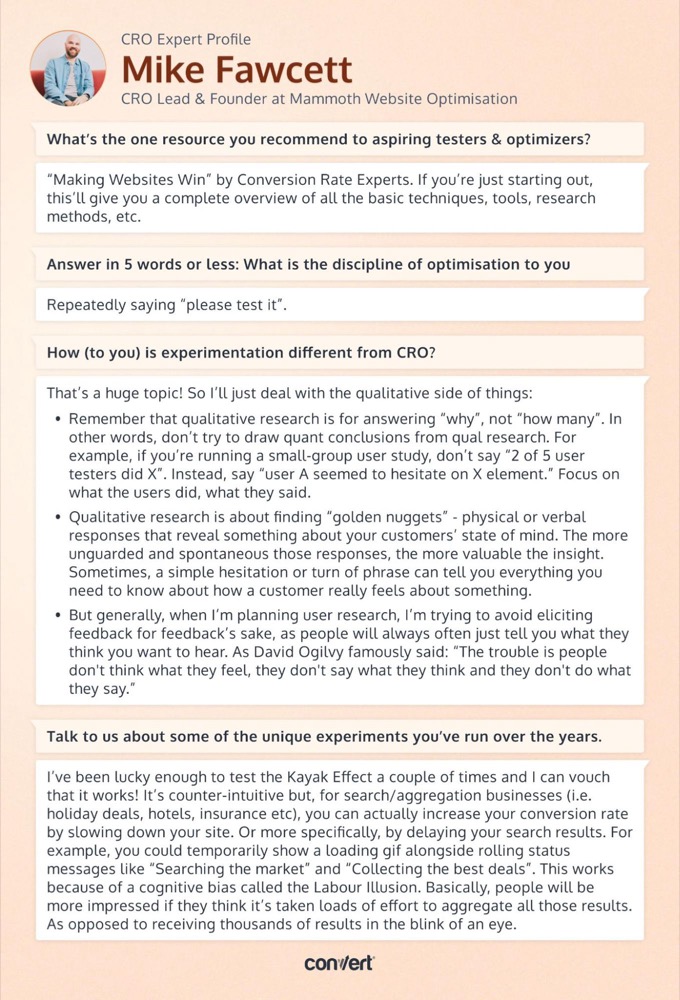

What’s the one resource you recommend to aspiring testers & optimizers?

“Making Websites Win” by Conversion Rate Experts. If you’re just starting out, this’ll give you a complete overview of all the basic techniques, tools, research methods, etc.

Answer in 5 words or less: What is the discipline of optimization to you?

Repeatedly saying “please test it”.

What are the top 3 things people MUST understand before they start optimizing?

I don’t think there’s anything you MUST understand before you get started, but here are 3 things I wish I’d known at the beginning:

- Don’t optimise on a “hunch”. Speak to customers, learn their motivations, anxieties & preconceptions. This is the way.

- Misinterpreting test results is the easiest way to waste money and resources. So get a grasp of concepts like “sample size” and “power”. Then you won’t look foolish popping champagne over false-positives, and you’ll have more chances of finding true winners.

- It’s ok to test multiple changes at once. Sure, we’d all love to test every variable independently, but that just isn’t practical for everyone and it can drain resources quickly. The more interventions you throw at a problem, the higher your chances of moving the needle! Just remember that, with this approach, you won’t know why the needle moved (if it did) and you run the risk of moving it the wrong way.

How do you treat qualitative & quantitative data to minimize bias?

That’s a huge topic! So I’ll just deal with the qualitative side of things:

- Remember that qualitative research is for answering “why”, not “how many”. In other words, don’t try to draw quant conclusions from qual research. For example, if you’re running a small-group user study, don’t say “2 of 5 user testers did X”. Instead, say “user A seemed to hesitate on X element.” Focus on what the users did, what they said.

- Qualitative research is about finding “golden nuggets” – physical or verbal responses that reveal something about your customers’ state of mind. The more unguarded and spontaneous those responses, the more valuable the insight. Sometimes, a simple hesitation or turn of phrase can tell you everything you need to know about how a customer really feels about something.

- But generally, when I’m planning user research, I’m trying to avoid eliciting feedback for feedback’s sake, as people will always often just tell you what they think you want to hear. As David Ogilvy famously said: “The trouble is people don’t think what they feel, they don’t say what they think and they don’t do what they say.”

How (to you) is experimentation different from CRO?

Conversion rate isn’t the only metric we optimise, so I appreciate the industry re-branding towards ‘experimentation’, but I think “causal inference” is a better title. We’re in the business of establishing causality between our interventions and our data, whether that’s through a traditional “experiment” or not. Techniques like Difference-in-Differences and Regression Discontinuity Design are just two of the many other methods available to us.

How do you see AI’s role in optimization & experimentation?

We all know it’s revolutionising the way we work. I use it almost daily for things like writing & de-bugging code, content inspiration and research support. However, I want to pour a little water on the “CRO is dead” rhetoric:

- Apple’s recent paper (Illusion of Thinking) proves LLMs are still a long way from true logical reasoning. As Rand Fishkin says, an LLM is still just “spicy autocomplete”. So I think we’ve got a while yet before the AI agents come for our CRO jobs.

- And for those worried about the dead-internet theory: yes, an ever-increasing portion of shoppers already start their searches on AI platforms instead of Google. But I think it’s going to be a while before the average joe will be comfortable letting an AI agent loose on the internet with their credit card details. And that’s assuming banks have figured out how to handle agentic verification. So, in the near future at least, there will still be an internet full of websites used by humans.

Talk to us about some of the unique experiments you’ve run over the years.

I’ve been lucky enough to test the Kayak Effect a couple of times and I can vouch that it works! It’s counter-intuitive but, for search/aggregation businesses (i.e. holiday deals, hotels, insurance etc), you can actually increase your conversion rate by slowing down your site. Or more specifically, by delaying your search results. For example, you could temporarily show a loading gif alongside rolling status messages like “Searching the market” and “Collecting the best deals”. This works because of a cognitive bias called the Labour Illusion. Basically, people will be more impressed if they think it’s taken loads of effort to aggregate all those results. As opposed to receiving thousands of results in the blink of an eye.

Cheers for reading! If you’ve caught the CRO bug… you’re in good company here. Be sure to check back often, we have fresh interviews dropping twice a month.

And if you’re in the mood for a binge read, have a gander at our earlier interviews with Gursimran Gujral, Haley Carpenter, Rishi Rawat, Sina Fak, Eden Bidani, Jakub Linowski, Shiva Manjunath, Deborah O’Malley, Andra Baragan, Rich Page, Ruben de Boer, Abi Hough, Alex Birkett, John Ostrowski, Ryan Levander, Ryan Thomas, Bhavik Patel, Siobhan Solberg, Tim Mehta, Rommil Santiago, Steph Le Prevost, Nils Koppelmann, Danielle Schwolow, Kevin Szpak, Marianne Stjernvall, Christoph Böcker, Max Bradley, Samuel Hess, Riccardo Vandra, Lukas Petrauskas, Gabriela Florea, Sean Clanchy, Ryan Webb, Tracy Laranjo, Lucia van den Brink, LeAnn Reyes, Lucrezia Platé, Daniel Jones, May Chin, Kyle Hearnshaw, Gerda Vogt-Thomas, Melanie Kyrklund, Sahil Patel, Lucas Vos, David Sanchez del Real, Oliver Kenyon, David Stepien, Maria Luiza de Lange, Callum Dreniw, Shirley Lee, Rúben Marinheiro, Lorik Mullaademi, Sergio Simarro Villalba, Georgiana Hunter-Cozens, Asmir Muminovic, Edd Saunders, Marc Uitterhoeve, Zander Aycock, Eduardo Marconi Pinheiro Lima, Linda Bustos, Marouscha Dorenbos, Cristina Molina, Tim Donets, Jarrah Hemmant, Cristina Giorgetti, Tom van den Berg, Tyler Hudson, Oliver West, Brian Poe, Carlos Trujillo, Eddie Aguilar, Matt Tilling, Jake Sapirstein, Nils Stotz, Hannah Davis, and Jon Crowder.

Written By

Mike Fawcett

Edited By

Carmen Apostu