Sample Ratio Mismatch (SRM): A Complete Guide with Solutions to Customer Cases

What’s worse than a failed test?

Test data quality issues that make test results unreliable.

But how can you stay away from bad data?

Checking for Sample Ratio Mismatch (SRM) is a simple way to catch potential problems early. If something is fishy, then the sooner you find out, the better.

Read on to learn more about Sample Ratio Mismatch, how to spot it, how it affects your tests, and which A/B testing platforms come with built-in SRM checks (so you don’t have to keep a spreadsheet on the side).

What Is Sample Ratio Mismatch (SRM)?

Sample Ratio Mismatch, or SRM, happens in A/B testing when the actual number of samples (or visitors in a treatment group) does not match what was expected.

Let’s illustrate this with an example.

Say a website gets around 15k visitors per week. We have 3 variations, the original (which is the unchanged page), and 2 variations. How much traffic do you expect each one to receive if traffic’s equally allocated? In an ideal world, the answer would be that each variation should receive 15,000 / 3 = 5000 visitors.

Now, it is very unlikely that each variation will actually receive 5000 visitors, but a number very close to that, like 4982, or 5021. That slight variation is normal and is due to simple randomness! But if one of the variations were to receive 3500 visitors and the others around 5000, then something might be wrong with that one!

Rather than relying on our own intuition to spot these problems, we can go for the SRM test instead. It uses the Chi-square goodness of fit test to tell us, for instance, if 4850 or 4750 visitors, compared to the other number of visitors received, are “normal” or not!

In statistical terms, the Chi-square goodness of fit test compares the observed number of samples against the expected ones. And if there is a real difference, the p-value will be inferior to the set significance level of 0.01, which corresponds to a confidence of 99%.

Watch this video with Lukas Vermeer as he dives into the specifics of SRM and more FAQs on the topic.

Does Your A/B Test Have an SRM? How to Diagnose Sample Ratio Mismatch?

In A/B testing, SRM can be a real boogeyman, causing inaccurate results and misguided conclusions. The good news is that there are tools out there that can help you avoid headaches.

Using Spreadsheets

Spreadsheets are the simplest method of calculating SRM due to the wide availability of Microsoft Excel and/or Google Products.

Let’s show you another example.

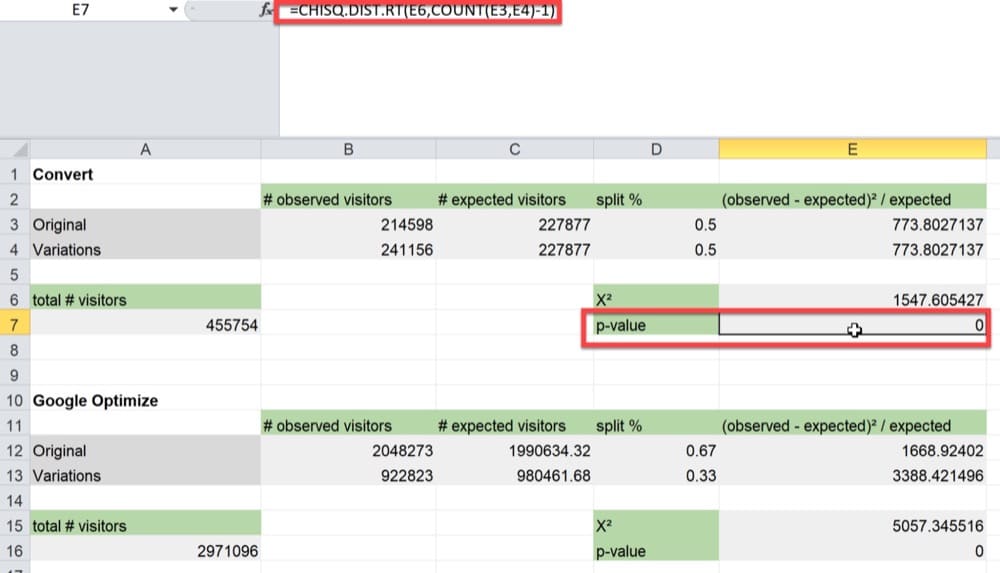

We’ll compute SRM for an A/B test with a 50/50 traffic split and observed numbers of visitors of 214,598 and 241,156 for the Original and Variation, respectively.

We’ll use the Chi-squared test to see if the observed traffic split matches the expected traffic split. In case it doesn’t, you’ll want to know if the observed values differ sufficiently from the expected values to cause concern and warrant discarding the results.

You’ll need to use the CHISQ.TEST function in your spreadsheet to calculate the p-value, as illustrated in the spreadsheet below.

In our example, the p-value is 0. With a p-value under 0.05, you have an SRM on your hands and enough evidence to dismiss the test findings in most cases.

Using Online Sample Ratio Mismatch Calculators

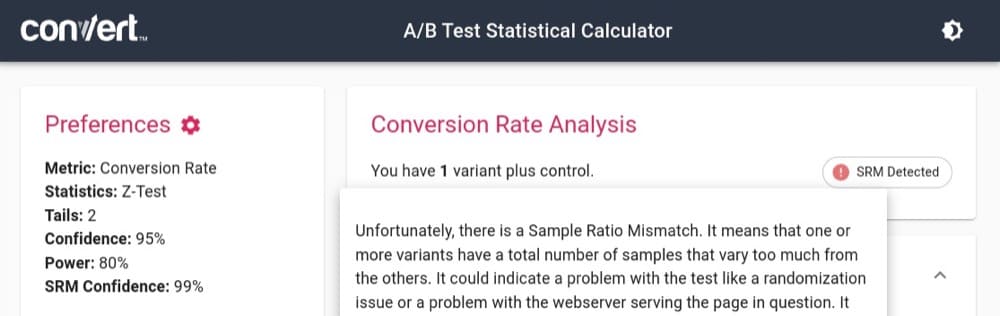

- Convert’s calculator can help with diagnosing sample ratio mismatch and it also tells you how much time you need to wait for your experiment to complete!

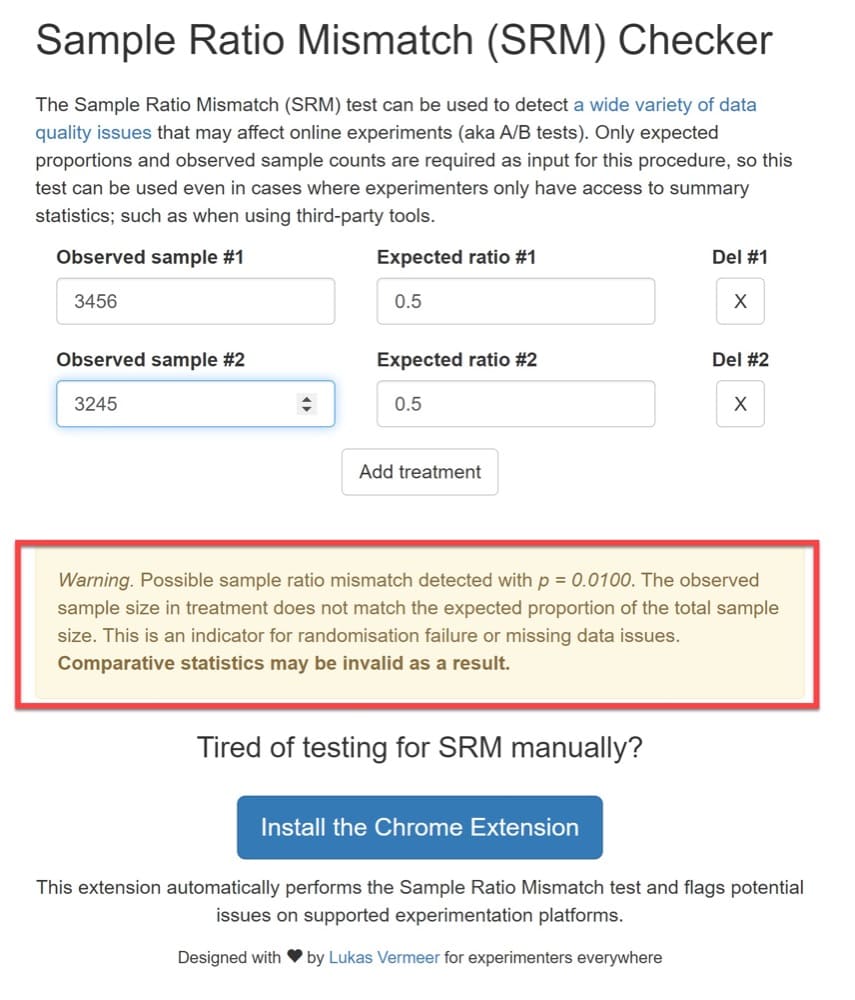

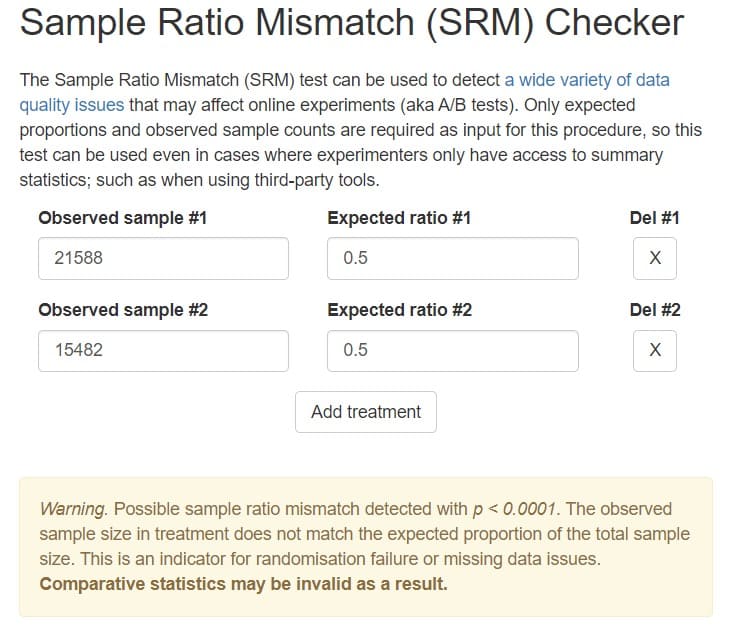

- Another sample ratio mismatch calculator is the one designed by Lukas Vermeer. This method calculates SRM in the same manner as the previous technique, so if you followed along and understood the process, you should be able to use this online SRM calculator. Just fill in the numbers for your samples and the result will show like this 👇

How Does SRM Affect A/B Tests?

It’s likely that you have looked at the traffic split between variants during an experiment and questioned how accurate it was.

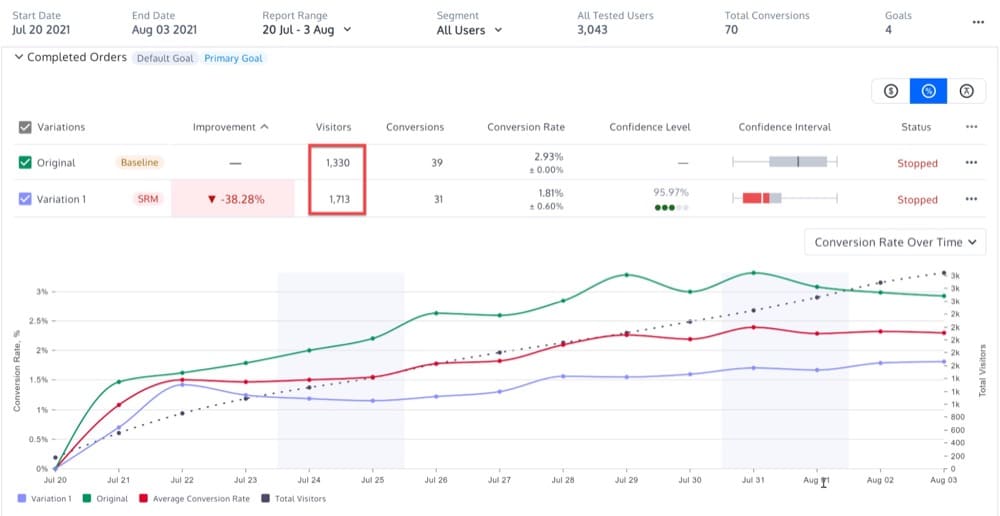

Perhaps one looking like the report below. You might look at it and wonder if it’s normal that the Original had 1330 visitors but the Variation 1713.

A short statistical calculation of the SRM ratio (using either of the two methods above) will tell you whether the variation ratio is acceptable or not.

Does the actual split between the two variations (Original and Variation 1) correspond to the expected values? If that isn’t the case, you should reject the data and relaunch the test when you’ve solved the problem.

Does SRM Affect both Frequentist and Bayesian Stats Models?

Yes.

The causes of SRM have an identical impact on the validity of an experiment’s results whether the data is analyzed with Bayesian (Google Optimize, Optimizely, VWO, A/B Tasty) or Frequentist (Convert Experiences, Dynamic Yield) approaches.

So the sample ratio mismatch calculators above can also be used to check for SRM on platforms that use Bayesian statistics.

When and Why Is SRM a Problem?

Finding a Sample Ratio Mismatch in your tests does not necessarily mean you need to discard the results.

So when is it really necessary to take the SRM diagnosis seriously?

Let’s find out with a few examples.

You run an experiment where the Original and Variation are each assigned 50% of users. You, therefore, expect to see about an equal number of users in each.

The results come back as

- Control: 21,588 users

- Treatment: 15,482 users

Let’s put them through the SRM Checker:

Is this a cause for concern?

The p-value for the sample ratio above is <0.0001, so the probability of seeing this ratio or a more extreme one, under a design that called for equal proportions, is <0.0001!

You should absolutely be worried that something is wrong, as you just observed an extremely unlikely event. It is, therefore, more likely that there is some bug in the implementation of the experiment and you should not trust any of the results.

You run another experiment, where the Original and Variation are assigned an equal percentage of users. You compute the p-value, and it’s <0.002, so a very unlikely event.

How off could the metrics be? Do you really have to discard the results?

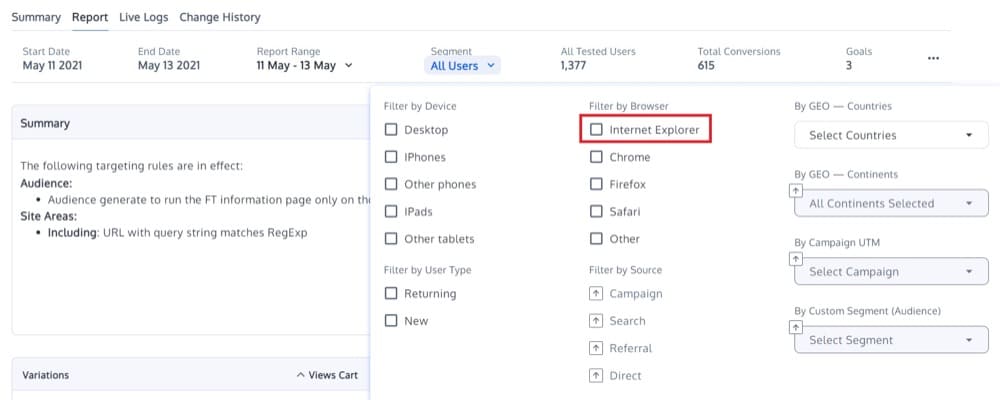

Using an experimentation platform like Convert Experiences, you can apply some post test segmentation to the results and find out that if you exclude Internet Explorer users, the SRM is gone.

In this case, the excluded users most probably use an old IE browser, which was the cause of the SRM; a bot was not properly classified due to some changes in the Variation, causing the ratio mismatch.

Without the segment, the remaining percentage of users is properly balanced and the metrics appear normal.

Had the SRM not been discovered, the whole experiment would have been considered a major failure.

But once the SRM was spotted, a small segment could be removed, and the experiment used for proper analysis.

In a similar scenario, you can safely ignore the excluded users and the experiment can be used.

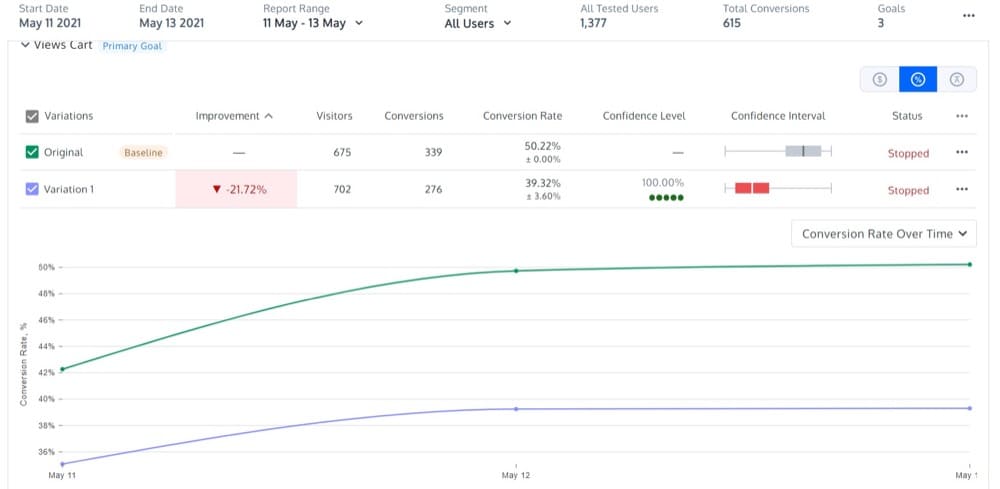

You run an experiment and you find out that there is SRM tagged on your test.

However, if you pay attention to your graphs, you will notice that conversion rate curves stay parallel and the calculated confidence is 99.99%. That pattern should provide you with enough certainty that the tests are valid.

In this case, you can safely ignore the SRM and continue to trust your data.

Where Should You Check if SRM Exists?

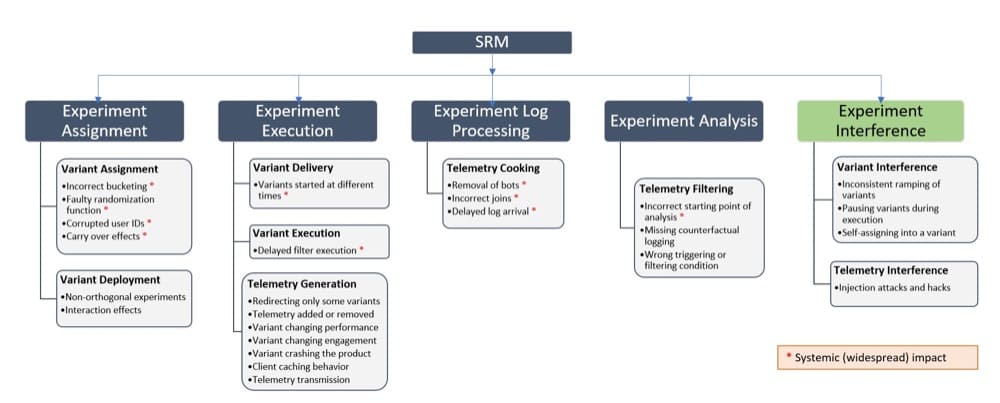

There are a few areas where SRM can occur. Let’s take a look at Lukas Vermeer’s taxonomy of causes:

- Experiment Assignment – There might have a case of incorrect bucketing (users being placed in incorrect clusters), a faulty randomization function, or corrupted user IDs.

- Experiment Execution – Variations may have begun at different times (causing discrepancies), or there may be filter execution delays (determining which groups are subjected to the experiment).

- Experiment Log Processing – Automatic bots removing real users, a delay in information arriving into the logs.

- Experiment Analysis – Wrong triggering of the variation or starting it incorrectly.

- Experiment Interference – The experiment may be subject to attacks and hacks, or another ongoing experiment’s impacts may be interfering with the current experiment.

If you have an SRM and aren’t sure where to look for an answer, the taxonomy above is a valuable place to start.

And to make things clearer, we’re now going to give you a real-life example for each of these cases.

Experiment Assignment

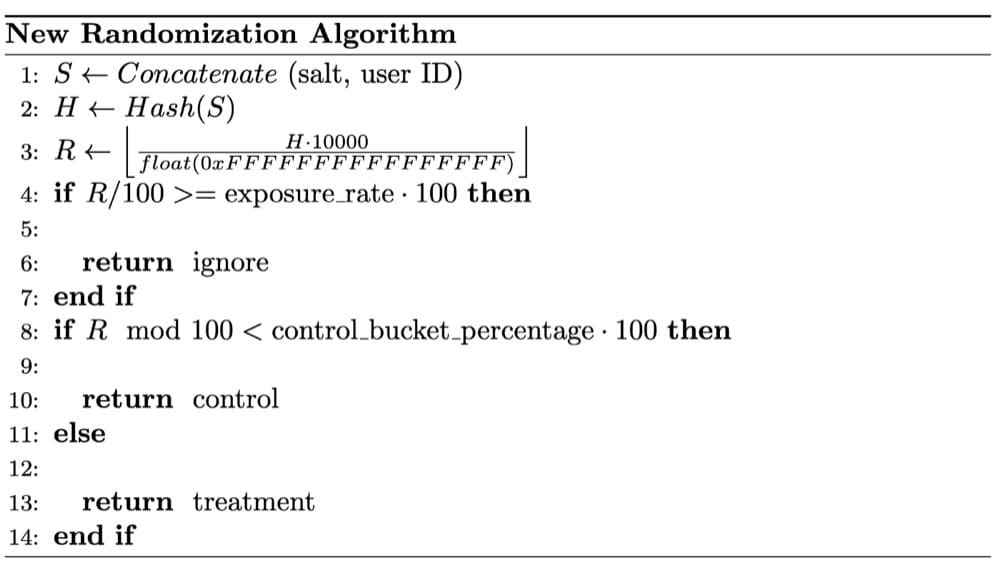

Here’s where one of the most interesting things to keep an eye on is the randomization function your A/B testing platform is using.

In the example below, data scientists at Wish discovered SRM issues on an A/A test and, after a long investigation, concluded that the SRM arose because their randomization was not completely random.

To achieve valid experiment findings, the randomization procedure is crucial.

A crucial assumption of the statistical tests used in A/B testing is the use of randomized samples. Between experiment buckets, randomization balances both observed and unobserved user attributes, establishing a causal relationship between the product feature under test and any outcome differences in trial findings.

PRO TIP: Convert has its own randomization algorithm that ensures even distribution between variations, so SRM cannot be caused by this. However, if you have implemented randomization with another tool, you can follow these steps to bucket visitors into variations.

Experiment Execution

When it comes to experiment execution, there are two main reasons that can cause SRM in your experiences.

1. The script’s not installed correctly on one of the Variations

Always check if your A/B testing platform’s script is installed correctly on the Original and the Variations.

Our customer support team recently solved a case where the Convert script was not added on one of the variations, causing an SRM on the test.

Make sure that you add the script on all pages where you want the experience to run, as shown below:

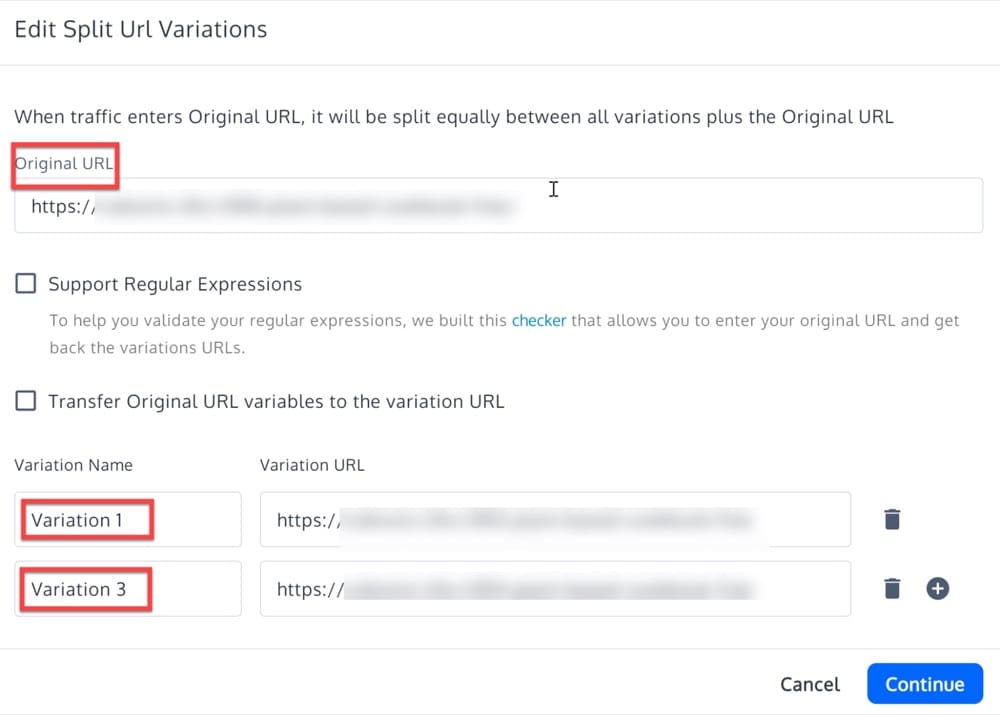

2. The page targeting is incorrectly configured

In this case, the SRM mismatch is because the targeting of the test was set up incorrectly.

With the wrong setup, some visitors are selected to be forwarded to the variation, but the redirection fails, most likely because the original URL expression does not match every URL of all visitors bucketed on the test and redirected.

To avoid this, reconfigure the experiment variation URLs expressions and rerun the test.

Here are two more scenarios showing you how to set up your page targeting with Convert Experiences to avoid SRM on Split URL tests.

Scenario 1: Only target the homepage (https://www.convert.com) with the Split URL and pass all query parameters the visitors might have

Here, in the Site Area, the Page URL needs to exactly match https://www.convert.com. In the exclude section, the Query String should contain v1=true so that you avoid any redirections (because the conditions of the experiment will still match if you end up on https://www.convert.com ?v1=true and the traffic distribution might end up uneven).

Then, when you define your variations, keep it like this:

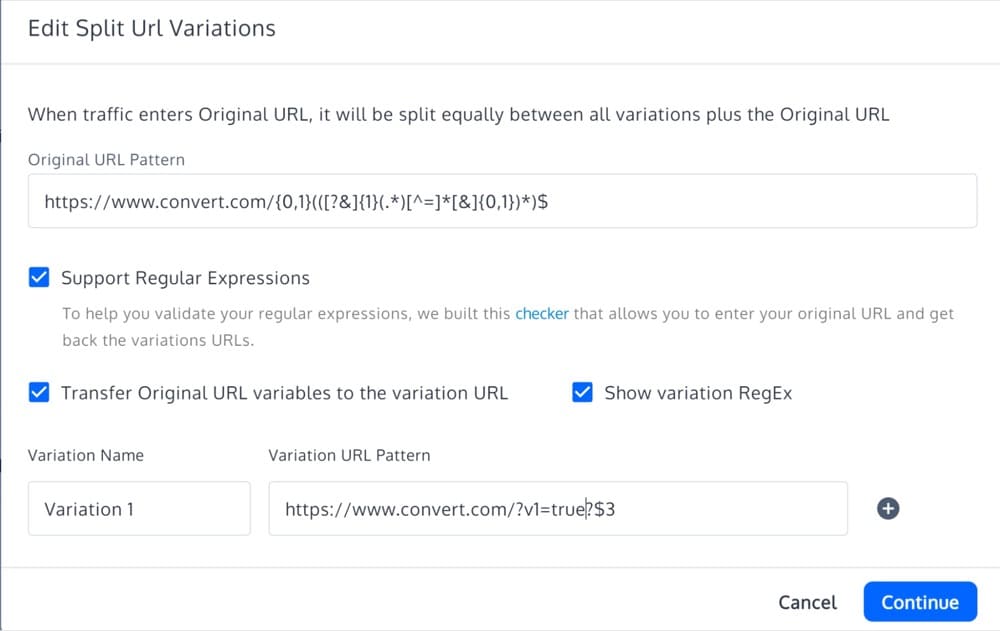

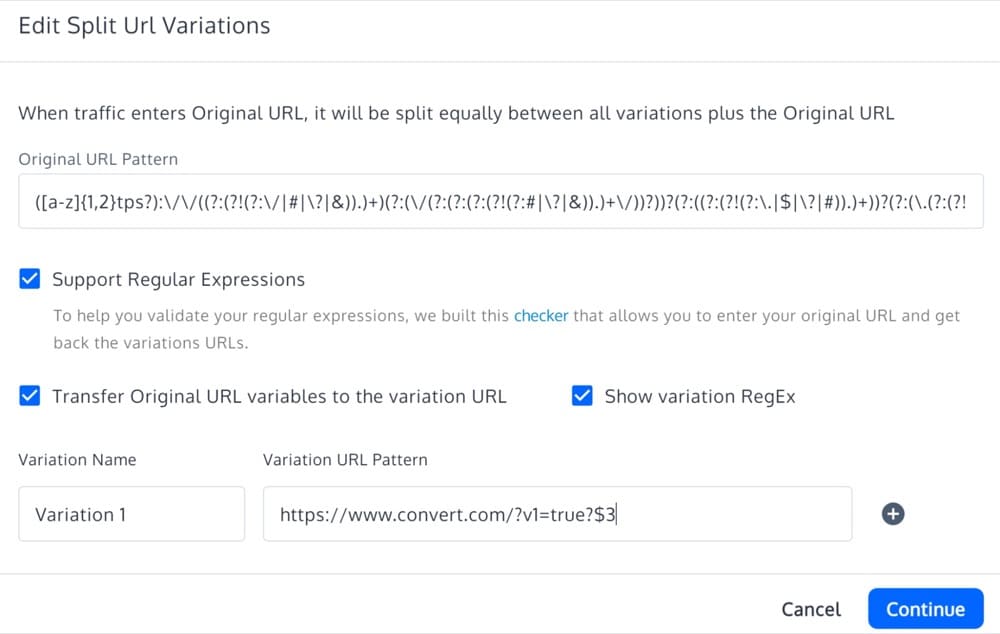

Scenario 2: Target all pages, not just the homepage (https://www.convert.com), with the Split URL and pass query parameters

Here, you need to define your Site Area with a “Page URL” that contains https://www.convert.com. In the exclude section, the query should contain v1=true.

When defining the variations, use the regex recipe below to catch all pag:

Experiment Log Processing

Here, as the main reason for SRMs, we identify the bots that can target your experience. You can contact us to check on the additional logs we keep if we can find any unusual patterns on the user agents.

For example, our support team assisted a client whose test had SRM.

In their case, when we filtered the report by Browser=Other, we saw an uneven split and SRM. But when we filtered the same report by Browser=Chrome+Safari, no SRM was detected, and no uneven distribution.

So, we checked a couple of events that had the Browser set to Other, and all of them showed a User Agent of “site24x7”. We knew immediately that this was some sort of monitoring software, which is fortunate since it’s advertising and uses a distinct user agent. If this had been hidden behind a usual User Agent, it would’ve been impossible to find it.

To solve the issue, we went ahead and added this User-Agent to the list of bots we exclude from traffic. Unfortunately, this change may have an impact on future data, after the moment we add the bot to the list, but at least it was found and fixed.

Experiment Analysis

This category mainly affects experiences set with manual triggering.

This happens for example on Single Page Applications where you need to take care of the triggering yourself.

So, whenever you have to do that manually by using a similar code to the one below, pay close attention to potential SRMs on your test.

window._conv_q = _conv_q || []; window._conv_q.push(["run","true"]);

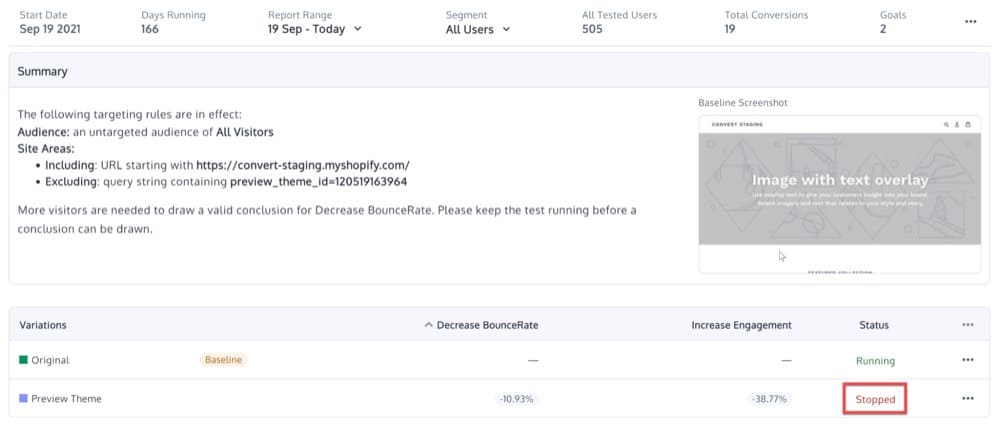

Experiment Interference

This refers to a user intervention where one of the variations is paused during the experience. Imagine you have a Split URL test that is running for some weeks and either by mistake or on purpose you pause the Variation and only leave the Original running.

Immediately after, and depending on your website traffic, you will notice SRM calculated for your test.

In this case, you can either exclude the date range when the variation was paused or reset the experience data.

Non Experiment Reasons

If none of the above categories reveal the root cause of your SRM, we suggest you add an error tracking software on your website (like Sentry) to identify deeper problems with your site.

A/B Testing Platforms that Support SRM Alerts

You may be wondering which A/B testing platforms support this SRM functionality and give you alerts without you having to calculate it for yourself.

We’re done the research and compiled a list of tools.

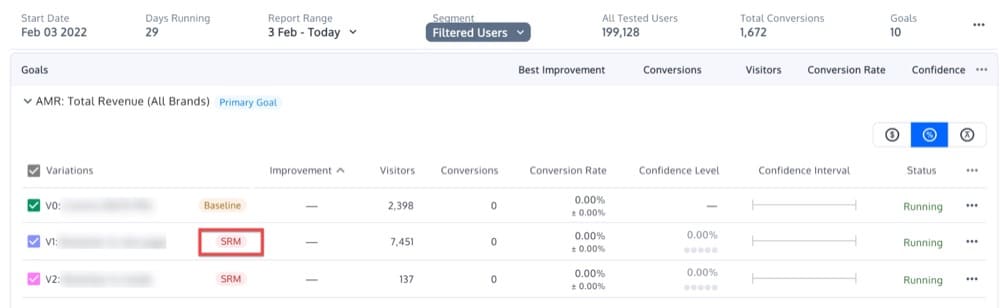

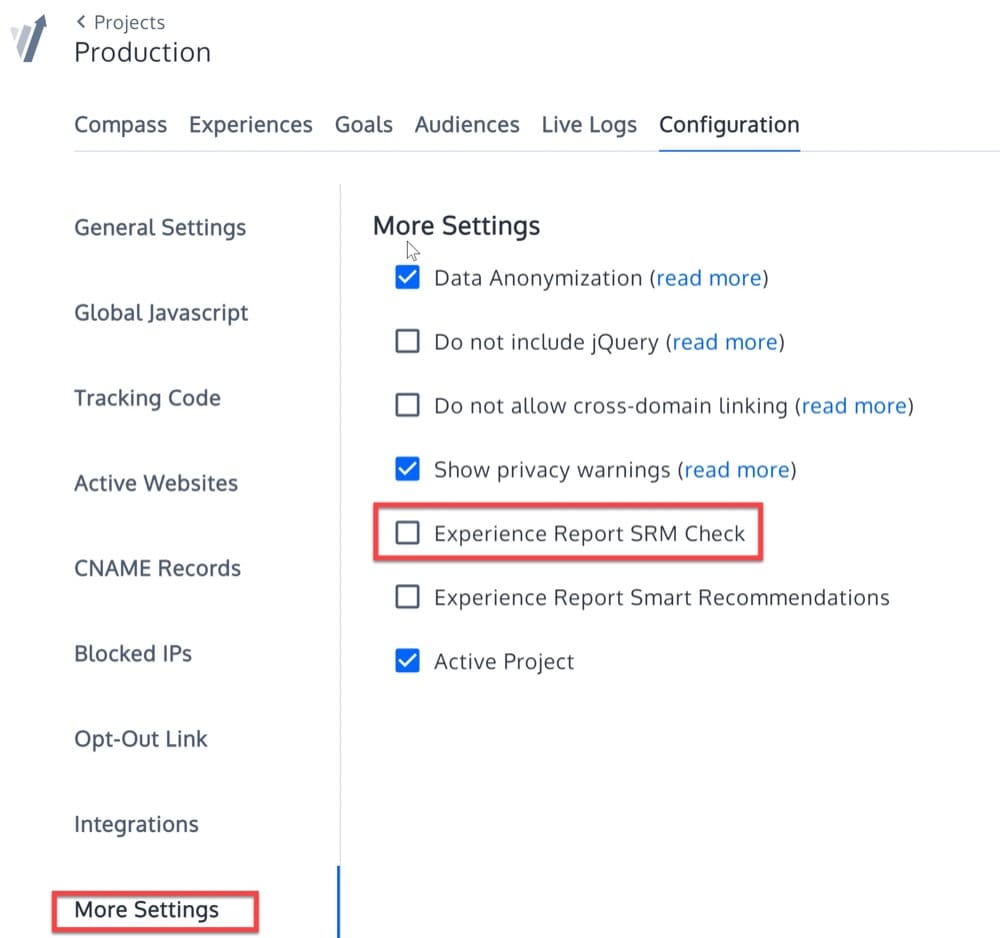

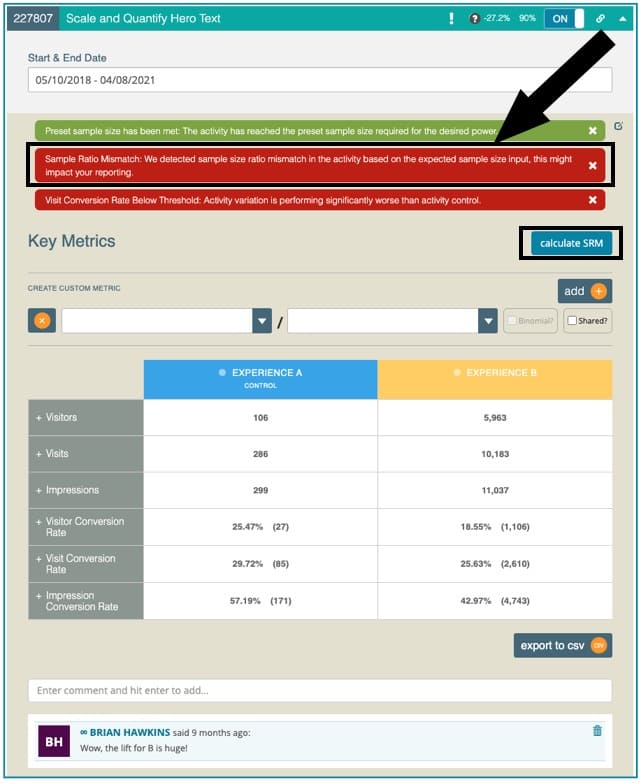

Convert Experiences

As of December 2021, we have introduced our own SRM method.

If you’re a user, you can enable SRM checks from Project Configuration > More Settings.

Then you’ll be able to see the SRM tags in the reports:

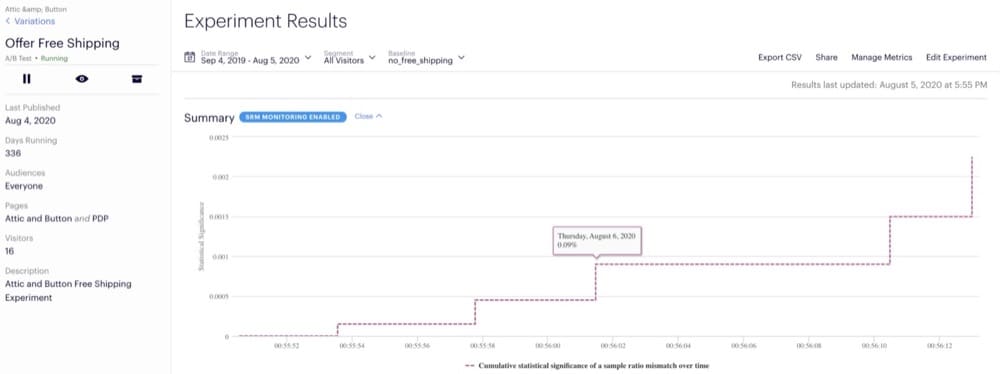

Optimizely

Optimizely open-sourced a sequential testing solution in September 2021 that anyone can implement to detect SRM.

Optimizely has turned the ssrm-test into a production-ready backend microservice that can run on all running experiments at the same time.

On Optimizely’s results page, you can set up alerts and get real-time results from the ssrm-test:

Michael Lindon, Optimizely Staff Statistician, says that SRM is a typical problem that occurs when tests are carried out poorly.

To run a product experiment, a substantial amount of infrastructure is needed, so there may be errors. For example, if website visitors are not consistently bucketed into an experiment variation and convert under both original and variation conditions, the data obtained for that user is not valid for evaluating the experiment’s impact.

The main concern is when SRM produces inaccurate data that might affect your metrics and go undetected.

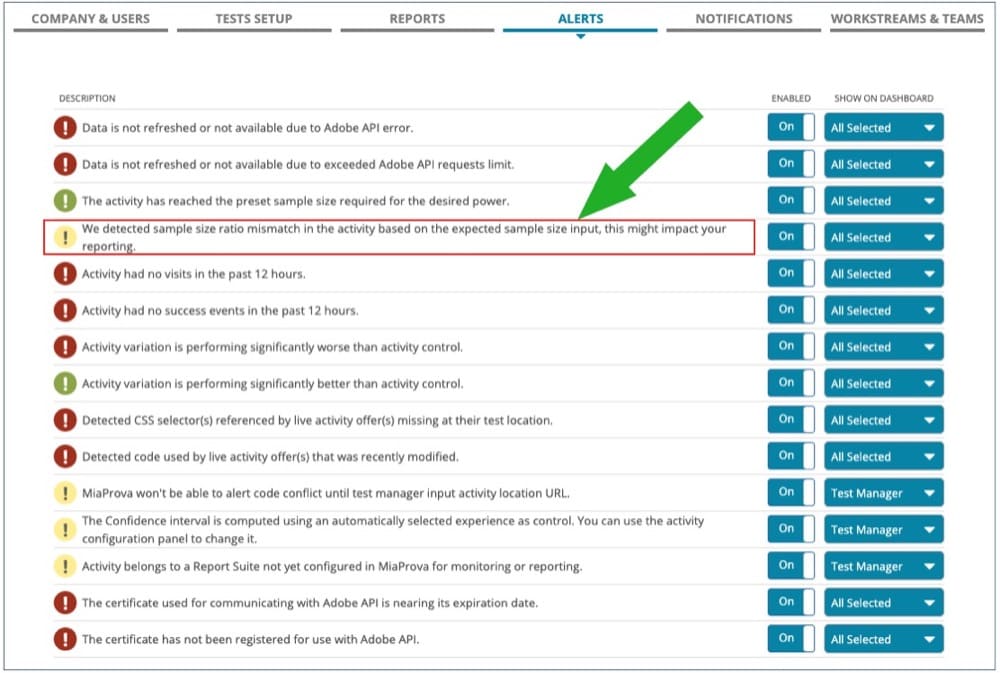

Adobe Target via MiaProva

In April 2021, Adobe Target partnered with MiaProva to provide SRM alerts on A/B activities.

These alerts notify MiaProva customers that use Adobe Target when a mismatch is detected. This approach automatically applies a Chi-Squared test to every live A/B test.

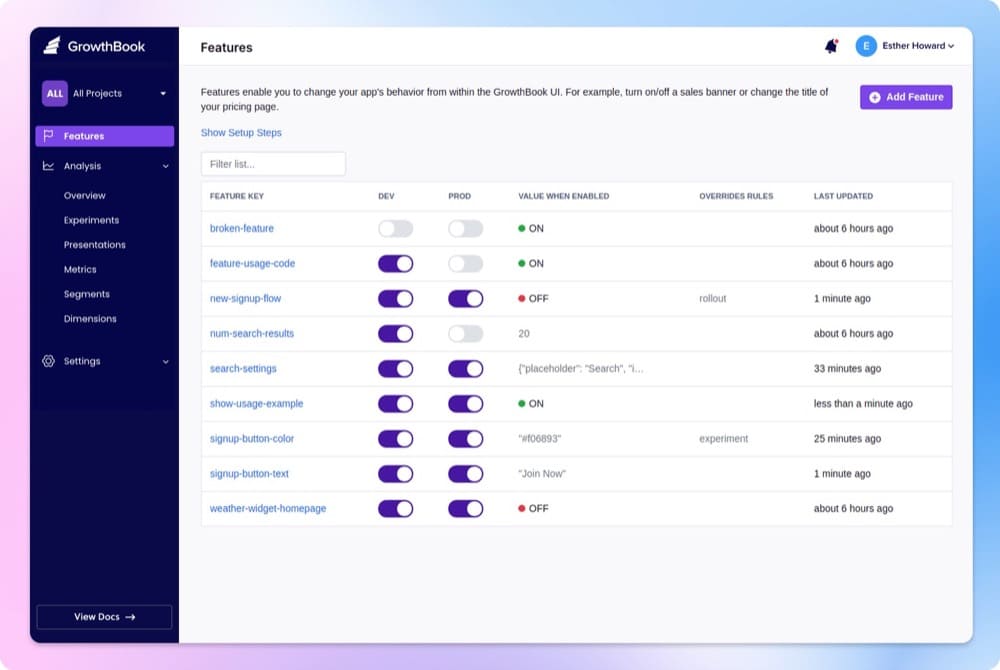

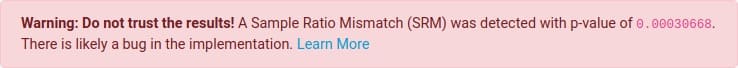

GrowthBook

GrowthBook is an open-source A/B testing platform with a Bayesian stats engine and automatic SRM checks for every experiment.

Every experiment looks for an SRM and warns users if one is identified.

When you predict a certain traffic split (e.g. 50/50), but instead see something drastically different (e.g. 40/60), you get a warning. This is only displayed if the p-value is less than 0.001, indicating that it is exceedingly unlikely to occur by coincidence.

The results of such a test should not be trusted since they are potentially deceptive, hence the warning. Instead, users should locate and correct the bug’s source before restarting the experiment.

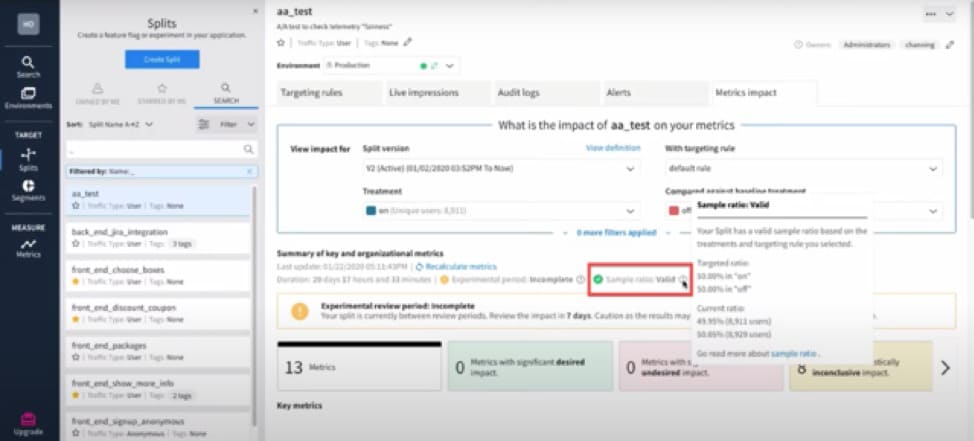

Split.io

Split is a feature delivery platform that powers feature flag management, software experimentation, and continuous delivery.

With each computation update, the Split platform checks the sample ratio to see if there is a substantial difference between the targeted and current sample ratios. This sample ratio check may be found beneath the summary of key and organization metrics, along with other important details such as duration and last updated.

Sample Size Ratio Mismatch Demystified

You might ask, how often is it “normal” to see an SRM?

Lukas Vermeer said it best. Even big tech firms observe a natural frequency of SRMs of 6% to 10% in their online controlled experiments.

Now, if the SRM repeats more frequently, that warrants a deeper investigation into the experiment design or the website.

Our team is always available to assist you if you are experiencing problems like those above! Click here to reach out to our team.