All About A/A Testing: Why and When Should You Run A/A Tests?

A/A testing allows you to test two identical pages against one another and can be extremely useful when setting up a new A/B testing tool.

A/A testing can be used to

- assess an A/B testing platform’s accuracy,

- determine whether your A/B testing platform is fully integrated with your internal analytics,

- identify possible technical issues with your A/B testing tool,

- provide a baseline conversion rate for a page or funnel,

- determine the appropriate sample size to use for your A/B testing, and

- benchmark the performance of your pages and funnels.

Convert provides both A/A and A/B testing capabilities, to ensure that you have everything you need to successfully design and develop a high converting website.

Read on to learn more about the significance of A/A testing and how to set up your first experience!

- What is A/A Testing?

- Why Run A/A Tests?

- Setting Up an A/A Experience

- How to Interpret the A/A Test Results?

- What Are the Challenges of A/A Testing?

- Convert Experiences and A/A Testing

- Do the Advantages of A/A Testing Outweigh the Disadvantages?

Perhaps something like this has happened to you before…

- You run an A/B test to see if your new CTA button and headline will increase conversions.

- Over the next month, you send the same amount of traffic to both your control and variation landing pages.

- Your software declares that your variation is the winner (with 99% confidence), so you stop.

- You then launch your “winning” design, but after several business cycles, you see that the 50% increase in your conversion rate has had little effect on your net income.

The most likely explanation is a false positive test result. Fortunately, there are various methods for dealing with incorrect tests.

One that you may have heard of is A/A testing.

What is A/A Testing?

Before we dive into A/A testing, let’s talk about A/B testing, so we can point out the differences.

In a typical A/B experience, traffic is split between two or more alternative variations.

One variation is usually designated as the “control” or “original”. All other variations of the experience are compared to the control, to determine which one produces the greatest lift in a given metric.

A/A testing, on the other hand, requires that traffic be allocated to two identical variations, usually using a 50/50 split.

In a normal A/B test, the goal is to find a higher conversion rate, whereas, in an A/A test, the purpose is usually to examine whether the variations have the same lift.

In an A/A test, traffic is randomly split, with both groups shown the same page.

Then, the reported conversion rates, click through rates, and associated statistics for each group are logged, in hopes of learning something.

A/A test = 2 identical pages tested against one another

Now, let’s take a look at some examples of where A/A experiences can be used, to determine whether they will be useful to you.

Why Run A/A Tests?

Running an A/A test may be particularly effective throughout various stages of the web design and development process, such as:

- When you’ve finished installing a new A/B testing tool,

- When your current A/B testing tool’s setup has been upgraded or changed,

- When you’re creating a new website or app,

- When you notice discrepancies between the data reports of your A/B testing and other analytics tools you use.

Let’s take a deeper dive into each of these use cases.

Check the A/B Testing Platform’s Accuracy

An A/A experience can be launched by either a company looking to acquire an A/B testing platform, or by a company looking to try out a new testing software (to confirm it is set up properly).

In an A/A experience, we compare two completely identical versions of the same page, with the goal of having similar conversion values.

The expected outcome is inconclusive if there is no difference between the control and the variation.

Even so, a “winner” is sometimes declared on two identical copies.

When this occurs, it is critical to assess the A/B testing platform, as the tool may have been misconfigured or may be ineffective.

As a next step, you should:

- Check that you installed the A/B tracking code correctly

- Check your Site Area

- Check your Audiences

- Check your Goals

- Contact the A/B testing support team to figure out if it is something that can be solved before abandoning your platform.

Hopefully, the issue is one of the above. If you are unable to figure out the problem, this likely means that the A/A test is conclusive and your A/B testing platform is inaccurate.

Determine the Extent of Integration with Your Internal Analytics

When checking the accuracy of an A/B testing platform, you can use an A/A test to assess whether the platform is fully integrated with your analytics tool.

Whether you are using Google Analytics, Heap Analytics, Adobe Analytics, Plausible, Matomo, or any other, you can compare the A/A test results with your internal analytics tool to determine whether the integration worked as expected.

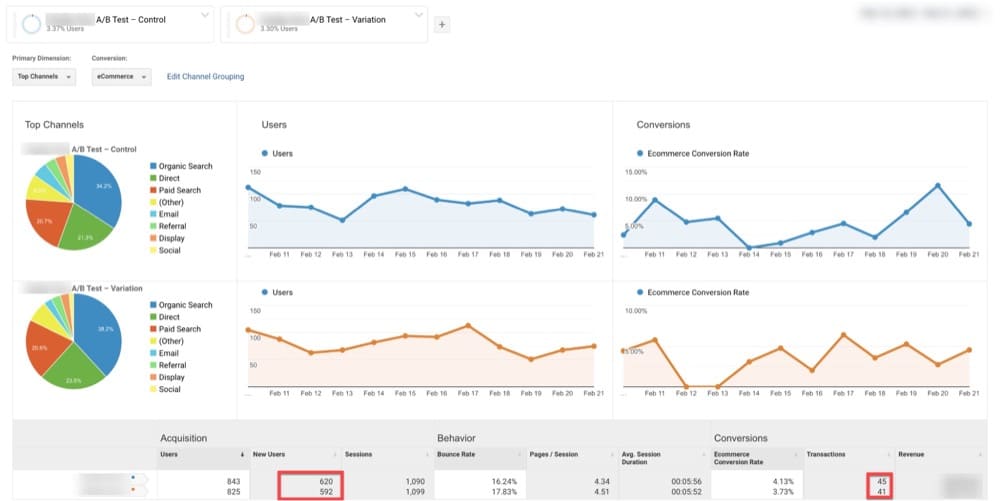

For example below, GA has identified 620 visitors on the Original and 592 on the Variation (identical page to Original).

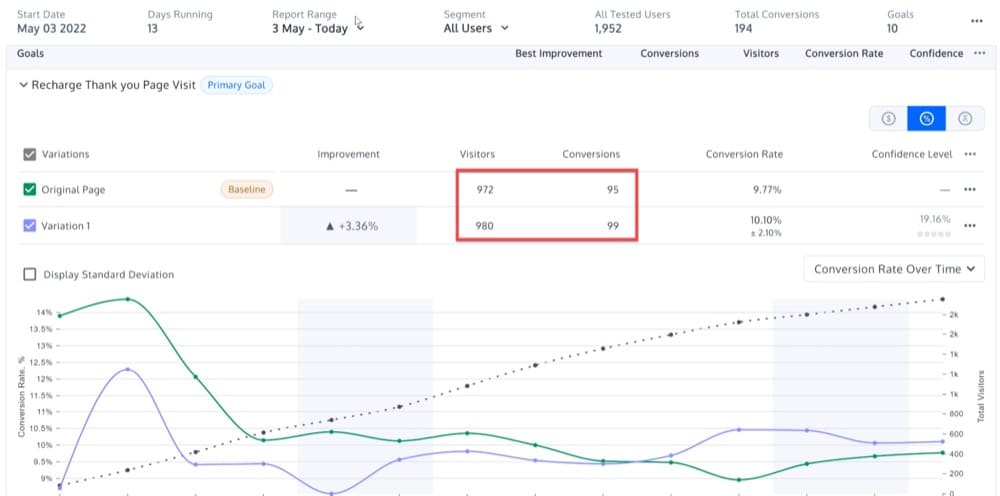

For the same date range, Convert revealed 972 visitors on the Original and 980 on the Variation (identical page to Original).

This could be a sign that the integration between the two platforms is not working as expected.

Identify Possible Technical Issues

You can also use an A/A test to identify possible technical issues.

Most A/B testing software uses methods that are somewhat different and could result in significant variations, depending on how far the program is pushed.

This may appear to be an anomaly, but it could also suggest a more serious underlying issue with one of the following:

- Math and statistical formulas

- Randomization algorithms

- Browser cookies

You can use A/A experiences to reveal the above problems.

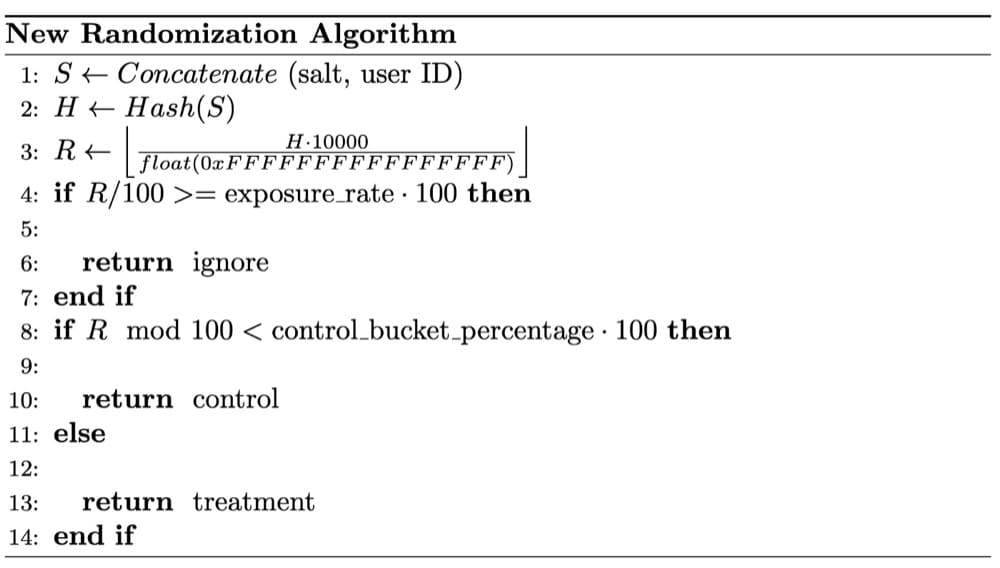

In the case below, Wish data scientists noticed SRM concerns on an A/A test. After a thorough examination, it was determined that the SRM was caused by their randomization not being completely random.

The randomization technique is critical for reliable experiment results.

The use of randomized samples is an essential assumption of the statistical tests employed in A/B testing.

Randomization balances both observed and unobserved user factors between experiment buckets. It establishes a causal relationship between the product feature being tested and any changes in trial results.

Provide the Baseline Conversion Rate for Any Page or Funnel

If you want to improve any number, you must first grasp what its baseline looks like. This could be your speed, weight, or running time.

Similarly, before you perform any A/B test, you must first determine the conversion rate against which you will compare the results. This is your baseline conversion rate.

You’ve probably heard of increased revenue coming out of a single experience, but this can be misleading. A single experience will not tell you if your website conversion has improved.

It is important to know your baseline conversion rate because if you can’t quantify the uplift of every experience, you will need to compare the overall expected and accomplished conversions on a frequent basis.

With some luck, every experience that is considered a “win” will help your conversions to exceed expectations.

And if you do this often enough, your conversions will only continue to improve!

The A/A test is what will help you to achieve that.

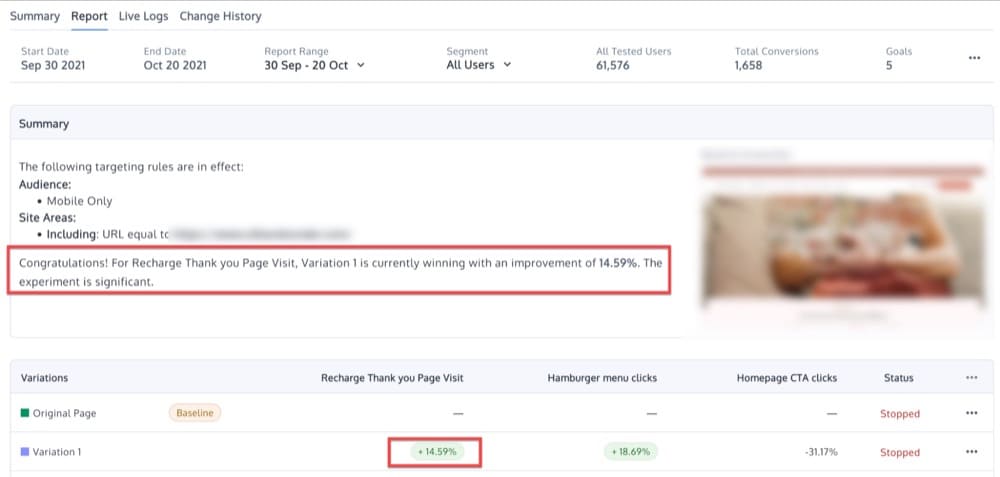

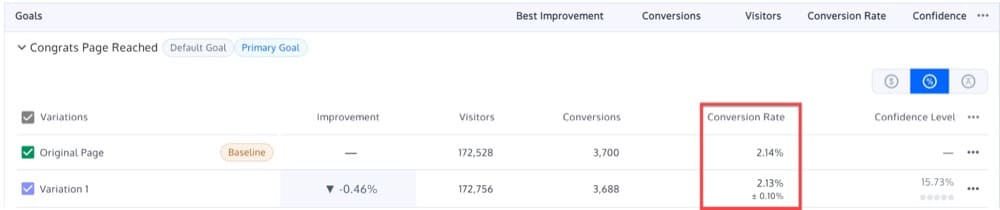

Let’s say you are running an A/A test on one of your landing pages, with Original A and Variation B providing almost identical results: 2.14% and 2.13%.

As a result, the baseline conversion rate can be set at 2.13-2.14%.

With this in mind, you can conduct future A/B tests, with the goal of exceeding this benchmark.

The result is not statistically significant if you run an A/B test on a new version of the landing page and receive a conversion rate of 2.15%.

Find the Necessary Sample Size

Before running an A/B experience, double-check your sample sizes, just as you would before going on a road trip.

You won’t observe the experience effect if there aren’t enough samples (users). On the other hand, if you have too many samples, you risk slowing your team’s progress, by continuously exposing people to a bad experience.

Ideally, you should never begin an experience without first determining how many samples you will gather.

To understand why, consider the following:

Let’s say you have a coin and your hypothesis is that it has a 50/50 chance of landing heads or tails. To prove this, you toss it a hundred times.

But let’s say you got ten tails on the first ten tosses and decided to stop the experiment there.

Rejecting the null hypothesis (that the coin is fair) might appear statistically significant, but you terminated the experiment prematurely. You have no idea how long the experiment should have taken to begin with.

If you do not estimate the sample size, you also may not be able to determine how long you’ll conduct the experience.

So how do we approach this?

A/A testing can assist you in figuring out how large of a sample size you’ll need from your website visitors.

Perhaps, your Monday morning visitors are statistically completely different from your Saturday night visitors. And maybe, your holiday shoppers are statistically different from those who shop during the non-holiday season.

Your desktop customers may differ statistically from your mobile customers. And, your customers who come via sponsored advertisements are not the same as those who come from word-of-mouth referrals.

When viewing your results, within categories like devices and browsers, you’ll be amazed at the trends you’ll uncover with the correct sample size.

Of course, if your sample size is too tiny, the results might not be reliable. You may miss a few portions, which could have an impact on your experience results.

A higher sample size increases the likelihood of including all segments that influence the test.

By running an A/A test, you’ll be able to determine which sample size allows for ideal equality between your identical variations.

In short, an A/A test helps you to determine the appropriate sample size that can then be used for future A/B testing.

Benchmark the Performance of Your Pages and Funnels

How many visitors come to your homepage, cart page, product pages, and other pages?

You are not concerned with whether or not you will find a winner when you do this. Rather, you are searching for larger patterns for a certain page.

These experiences can help you answer questions such as:

- What is the home page’s macro conversion rate?

- What is the breakdown of that conversion rate by visitor segment?

- What is the breakdown of that conversion rate by device segment?

A/A experiences provide you with a baseline against which you can compare fresh A/B experiences for any portion of your website.

One could argue that you can receive the same information through the website’s analytics.

But, this is both true and untrue.

The A/B testing tool is primarily used to declare a winner (while sending test data to Google Analytics or performing other calculations), so you’ll still want to observe website metrics when it is running.

Setting Up an A/A Experience

A/A experiences are a very important tool for conversion rate optimization.

However, the challenge with an A/A experience is deciding which page to use when conducting the experience.

Make sure that the page you choose for your A/A experience page has these two qualities:

- A high volume of traffic. The more people that visit a page, the sooner you’ll notice alignment between the variations.

- Visitors have the ability to buy or sign up. You’ll want to fine-tune your A/B testing solution all the way to the finish line.

These requirements are the reason we often conduct A/A tests on the homepage of a website.

In the next section, I’ll explain in more detail how to create an A/A test campaign, but, in a nutshell, here’s how to set up an A/A test on the homepage of a website:

- Make two identical versions of the same page: a control and a variation. After you’ve finished creating your variations, choose your audiences with identical sample sizes.

- Determine your KPI. A KPI is a metric that measures performance over time. For example, your KPI could be the number of visitors who click on a call-to-action.

- Split your audience evenly and randomly using your testing tool, sending one group to the control and the other to the variation. Run the experience until both the control and the variation reach a certain number of visits.

- Keep track of both groups’ KPIs. Because both groups are exposed to the same content, they should act similarly.

- Connect your A/B testing tool to your analytics software. This will allow you to double-check that your data is being collected accurately in your analytics program.

How to Interpret the A/A Test Results?

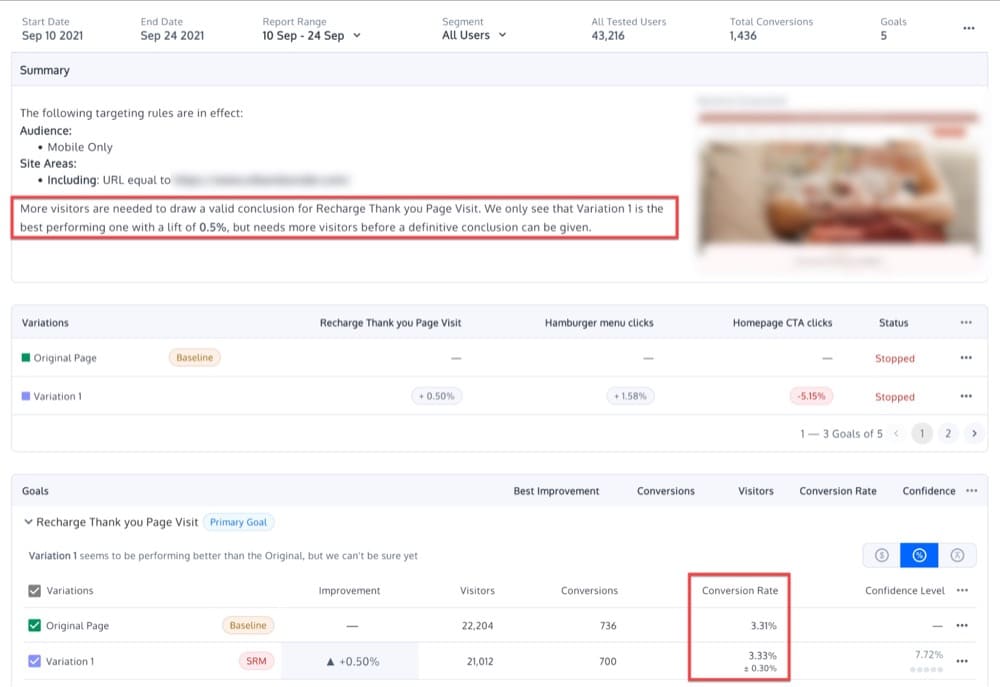

We Expect Inconclusive Results on an A/A Experience

While seasonality is unlikely to alter the results of an A/A test, one of the aims is to detect unexpected outcomes. For this reason, we recommend running the test for at least a week before reviewing the outcome.

At the end of one week, you should notice the following behavior when you examine the results of your A/A test:

- Over time, your statistical significance will settle around a given value. 10% of the time, the statistical significance will settle above 90%.

- As more data is collected, the confidence intervals for your experiment will shrink, ruling out non-zero values.

- The original and variation may perform differently at various points during the test results, but neither should be officially labeled a statistically significant winner.

Because there should be no difference between the variations, you should expect to see only modest differences and no statistically significant results. Perhaps, you’ll see something along these lines:

What Does It Mean If You Get Non-Identical Variations?

If there is a considerable difference between the two identical variations in an A/A experience, it could mean your A/B testing software was not correctly implemented or that the testing tool is inefficient.

However, it’s also possible that the experience was not conducted properly, or that the results are due to random variance. This kind of sampling error occurs naturally when a sample is measured, as opposed to measuring all of the visitors.

For example, a 95% confidence level suggests that a winning result will occur in one out of every 20 occurrences, due to sampling error rather than a meaningful difference in performance between two variations.

Another reason why a properly executed A/A experience might not validate the identity of variations is because of a target audience’s heterogeneity.

For example, let’s say we run an A/A experience on a group of women, with varied conversion rates for women of different ages.

Even if we run a test properly, using an accurate A/B testing tool, it may still reveal a significant difference between two identical variations. Why? In this example, 50% of visitors may be between the ages of 20 and 90, while the other 50% may range from 20 to 50. Rather than an error of the platform, the incongruent result is simply a sign that the two audiences are very different.

Finally, another common mistake, when running any kind of test, including an A/A test, is to keep checking the results and prematurely end the test once statistical significance is detected.

This practice of declaring a winning variation too soon is called “data peeking” and it can lead to invalid results.

Data peeking in an A/A test can lead analysts to see a lift in one variation, when the two are, in fact, identical.

To avoid this, you should decide on the sample size you want to use ahead of time. Make this decision based on:

- Minimal effect size: the minimum lift below which an effect is not meaningful to your organization

- Power

- Significance levels that you consider acceptable

The purpose of an A/A test would then be to avoid seeing a statistically significant result once the sample size has been attained.

What Are the Challenges of A/A Testing?

Apart from the many benefits an A/A test can bring to your experimentation strategy, these are two major drawbacks with A/A testing:

- An A/A experimental setup contains an element of unpredictability.

- A high sample size is necessary.

Let’s take a look at each of these challenges separately.

Randomness

As previously stated, one of the primary reasons for performing an A/A test is to evaluate the accuracy of a testing tool.

But, let’s say you discover a difference between your control and variation conversions.

The issue with A/A testing is that there is always some element of randomness involved.

In other circumstances, statistical significance is achieved solely by chance. This means that the difference in conversion rates between two variations is probabilistic rather than absolute.

Large Sample Size

When comparing similar variations, a large sample size is required to determine whether one is favored over its identical counterpart.

This requires an extensive amount of time.

Running A/A tests can eat into ‘real’ testing time.

The trick of a large scale optimisation programme, is to reduce the resource cost to opportunity ratio, to ensure velocity of testing throughput and what you learn, by completely removing wastage, stupidity and inefficiency from the process.

Running experiments on your site is a bit like running a busy Airline at a major International Airport—you have limited take-off slots and you need to make sure you use them effectively.

Craig Sullivan for CXL

Convert Experiences and A/A Testing

A/A testing frequently comes up in more “advanced” support requests.

The following suggestions from Convert support agents are based on dozens of resolved cases:

- To test your A/B testing platform, conduct an A/A experience first. If the difference between the two is statistically significant at the chosen level, your platform might be broken.

- Perform an A/A/B or A/A/B/B test (more on this below) and discard the findings if the two A variations or two B variations produce statistically significant differences at the chosen level.

- Set up many A/A tests. If more tests than expected show statistically significant differences, your platform is broken.

How to Set Up A/A Tests Within Convert Experiences?

Now let’s look at how to set up a few different types of A/A tests (yes, plural) using Convert Experiences.

The Pure A/A Experience

The most typical A/A setup is a 50/50 split between two pages that are identical.

The goal is to validate the experience configuration by ensuring that each variation has roughly the same performance.

You’re checking the same thing against itself to discover if the data contains noise rather than useful information.

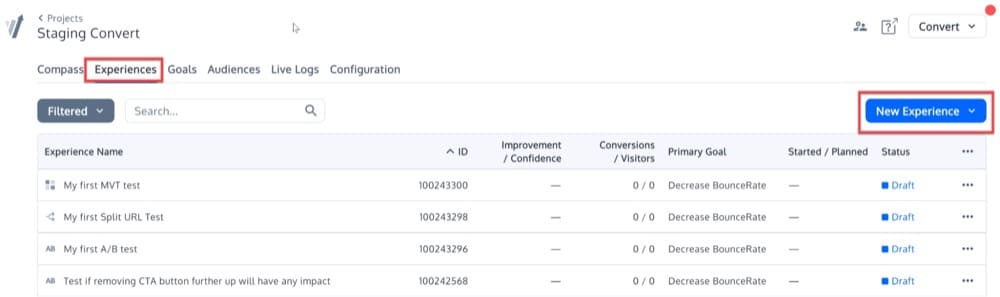

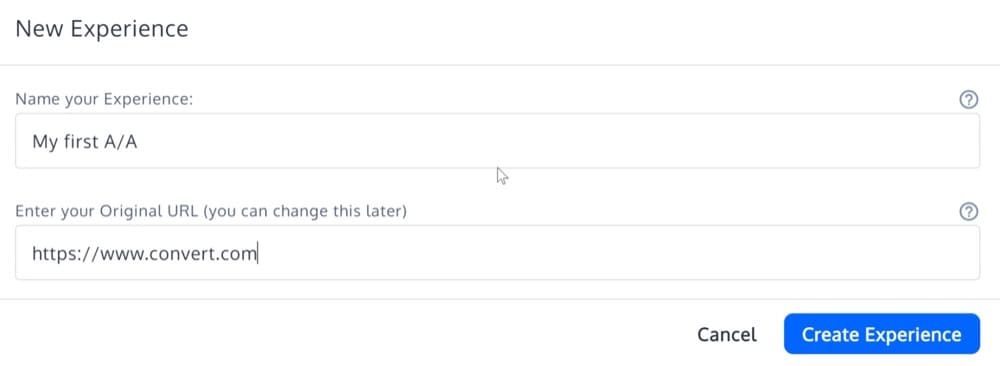

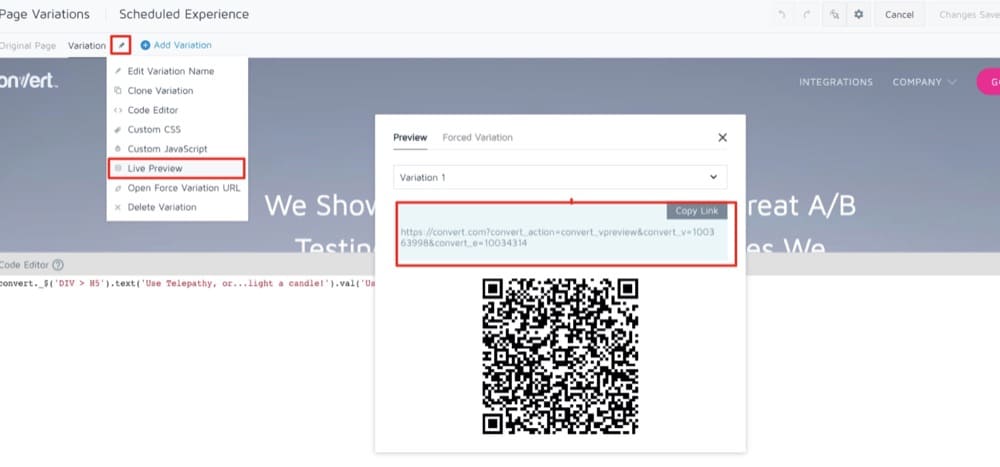

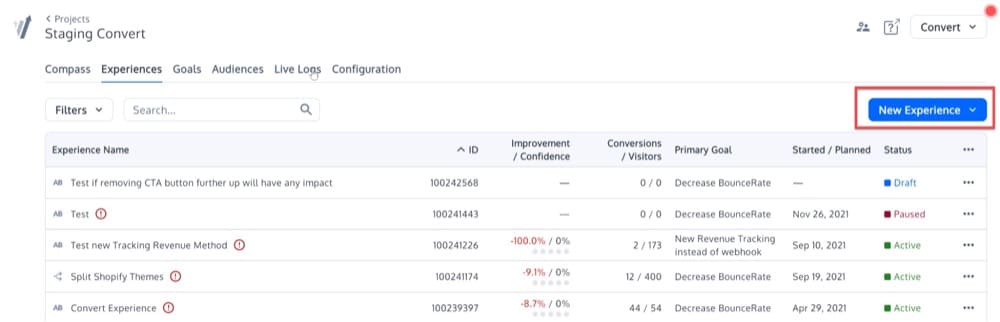

To set up this simple A/A experience, click on the Experiences menu. Then, click on the “New Experience” button on the upper right side.

Fill out the details of the “Experience Creation Wizard”, and select the “A/A Experience” experience type.

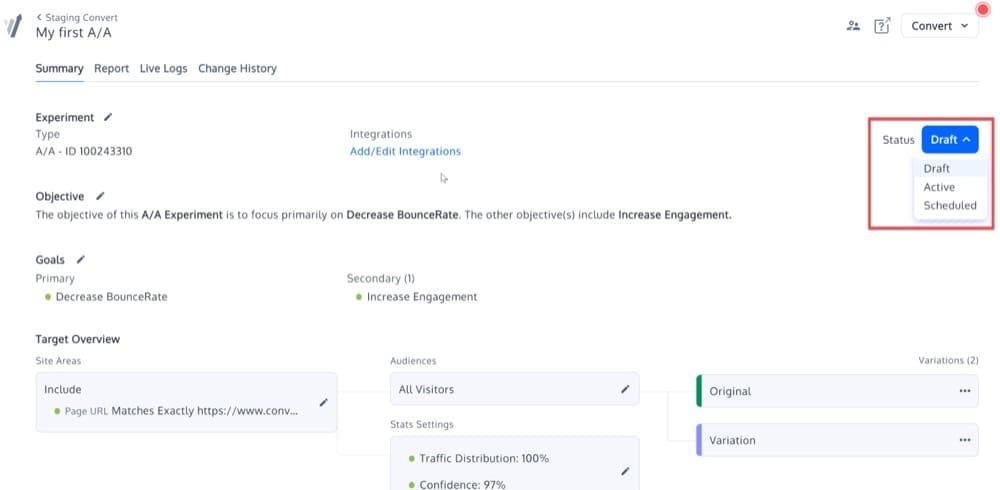

Now, your A/A Experience should be created. It will be identical to other types of experiments in the platform, aside from having no options to “Edit Variations”.

Activate the experience by changing its status:

The Calibrated A/A/B or A/A/B/B Experience

The idea behind this calibrated A/A/B or A/A/B/B test is that the replicated A or B variations provide a measure of the A/B test’s accuracy.

If the difference between A and A or B and B is statistically significant, the test is considered invalid and the results are discarded.

In order to set up such a test, you will need to start an A/B experience, rather than an A/A.

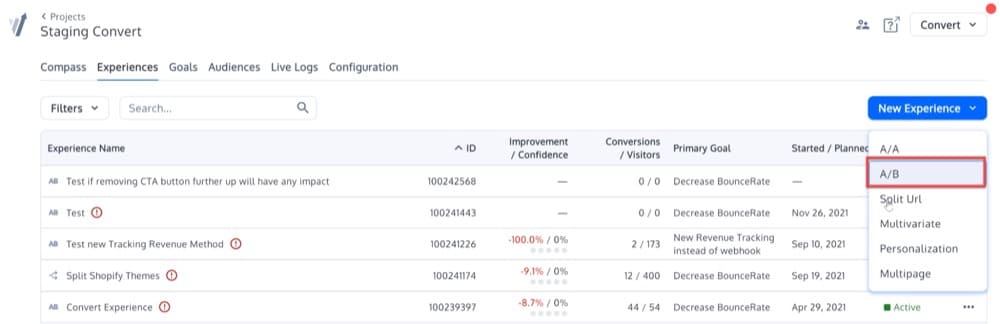

Click on the “New Experience” button on the right of the screen, to begin creating a new experience.

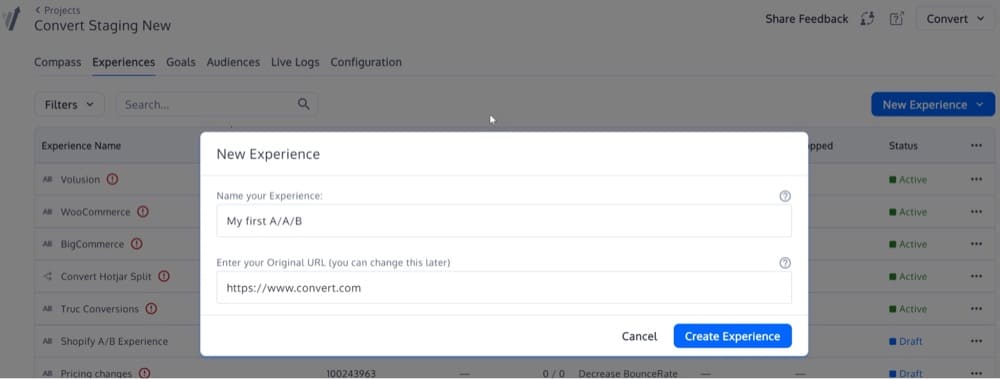

Once you click that button, you will see this popup menu. Select the option A/B:

Then, enter your URL in the second box.

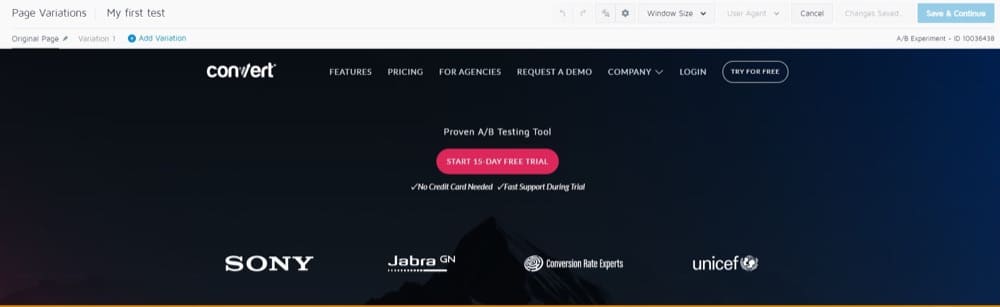

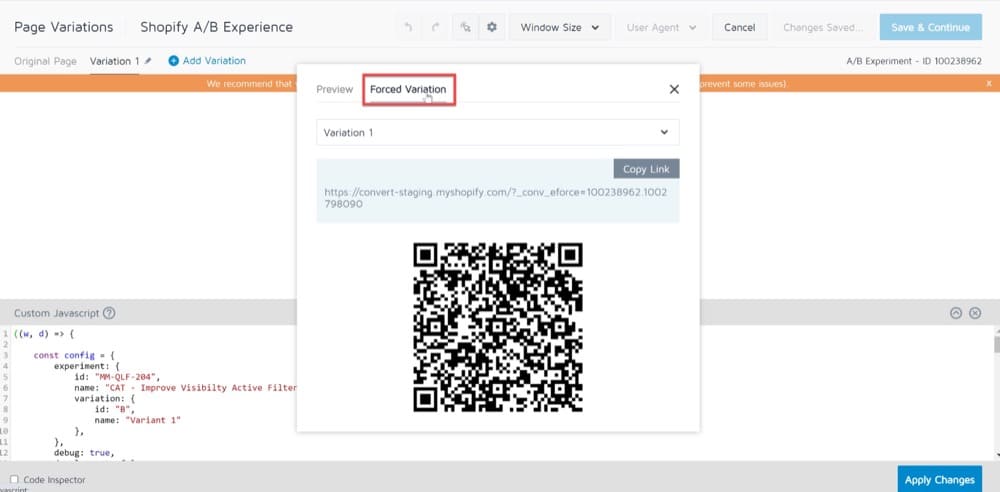

You will be taken to the Visual Editor that displays the URL you chose and a toolbar at the top:

In the Page Variations section at the top left, you will notice that “Variation 1” is selected by default.

This means that any changes we make to this version of the URL will not affect the original URL.

This will result in a classic A/B test, in which the “A” version is the original page and the “B” version is Variation 1.

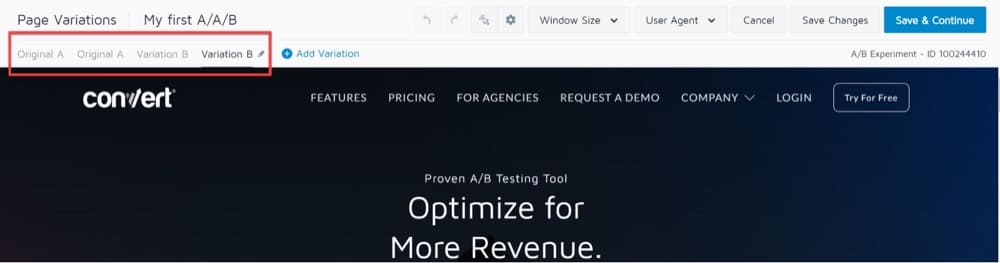

For the A/A/B or A/A/B/B, you’ll need to add another variation A and another variation B that are identical to variation A and variation B, respectively.

It should look like this:

Click the “Save & Continue” button and you’re all done!

Run Many A/A Experiences

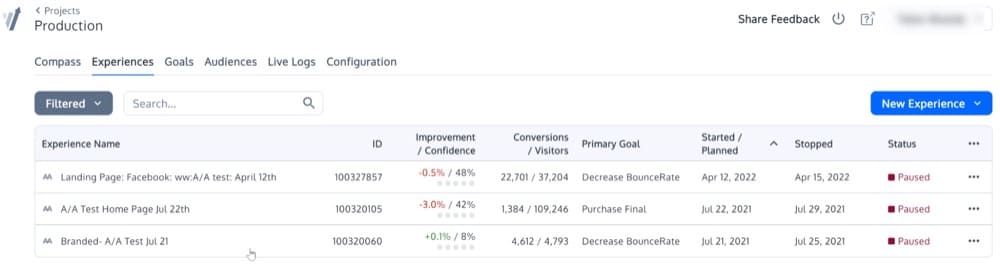

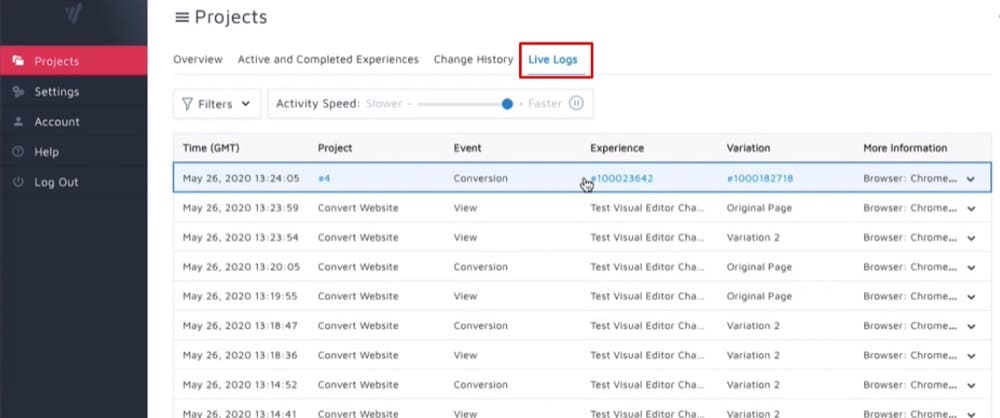

We’ve already covered this, but if you conduct 1000 successive A/A tests with large audiences, follow all of the requirements and achieve statistically significant results far more frequently than predicted, it is possible that your A/B testing framework is broken.

It could be that the samples aren’t properly randomized. Or maybe, the two variations aren’t mutually exclusive.

Here’s what that might look like:

Can I Run an A/A Experience at the Same Time as an A/B Experience?

There’s a chance you’ll need to run an A/A test at the same time as an A/B test, on the same website.

In that case, here are a few possibilities:

- You won’t have to worry about the tests conflicting with one another if you run them simultaneously.

- You could conduct the experiments simultaneously, but with distinct audiences.

- You could perform the tests in the correct order (complete test 1 (A/A test) before moving on to test 2 (A/B test)).

Option 3 is the safest, but it drastically limits your experience’s capabilities.

It is entirely possible to run multiple experiences on the same page or set of pages at the same time.

But, keep in mind that bucketing in one experiment may have an impact on data from another simultaneously occurring experiment.

Here are the two most important Convert techniques to use when running parallel tests:

- Allocate 50% of traffic to the A/A test, while allowing the other 50% of traffic to enter the other running A/B experiences.

- Exclude A/A visitors from other A/B tests.

Pre-Test QA Process: An Interesting Alternative to A/A Testing

When deciding whether or not to run an A/A test, the answers will vary depending on who you ask. There’s no doubt that A/A testing is a contentious issue.

One of the most common arguments against A/A testing is that it takes too long.

A/A testing consumes a significant amount of time and often requires a considerably higher sample size than A/B testing.

When comparing two identical versions of a website, a high sample size is required to demonstrate a significant bias.

As a result, the test will take longer to complete, potentially cutting into time spent on other important tests.

In such cases where you do not have much time or high traffic, it is best to consider conducting a pre-test QA process.

In this blog article, we walk you through all the steps you’ll need to follow to perform a full QA process. The methods you use are up to you and depend on how much time you have on your hands.

Can SRM Exist on A/A Tests?

Ask yourself this: Is the actual number of users observed during your A/A test close to the 50/50 ratio (or 90/10 ratio, or any other ratio) if you split them in half?

If not, you’re faced with one of two problems: Either there is an issue with how you’re invoking the testing infrastructure from within your code (making it “leaky” on one side) or there is a problem with the test infrastructure’s assignment mechanism.

A sample ratio mismatch error (SRM error) is a defect that an A/A test can detect.

If your ratio comes out to something like 65/35, you should investigate the issue before running another A/B test using the same targeting strategy.

Do the Advantages of A/A Testing Outweigh the Disadvantages?

Although A/A testing should not be done on a monthly basis, it is worthwhile to test your data when setting up a new A/B tool.

If you catch the faulty data now, you’ll have more confidence in your A/B test results later on down the road.

While the decision is ultimately yours, It is highly advisable that you conduct A/A tests if you’re getting started with a new tool. If not, we recommend that you set up a strict pre-test QA procedure, as A/B testing will save you time, money, and traffic.

We hope that the screenshots above answered your questions, but if not, sign up for a demo to see for yourself how easy it is to set up an A/A test with Convert Experiences.