11 A/B Tests Growth Teams Can Run Today

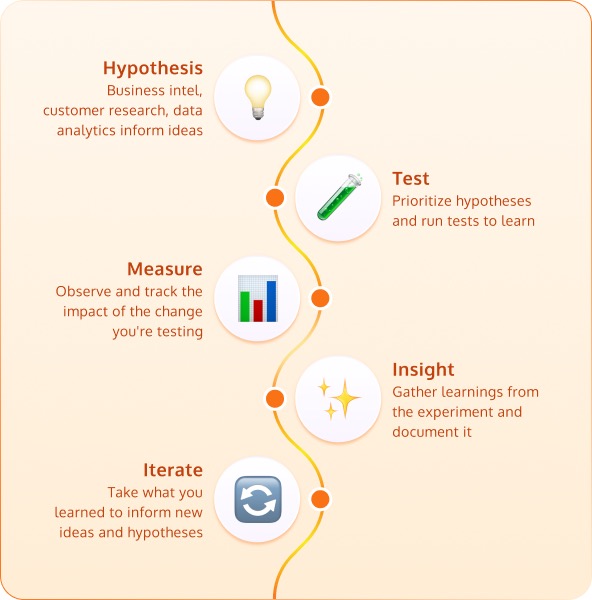

Growth doesn’t come from one-off tests. It comes from loops that compound.

Every experiment feeds the next, across acquisition, activation, retention, revenue, and referral. Running them systematically allows your gains to stack up into exponential growth.

That’s why this A/B tests for growth teams article is structured around the Pirate Metrics funnel (AARRR). For each stage, you’ll see real growth experiments with results and lessons you can adapt to your own product.

11 Growth Team A/B Tests at a Glance

| Stage (AARRR) | Experiment | Metric Impacted |

Key Learning |

||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acquisition | PLG Flow vs Cluttered Landing (Typeform vs Mailchimp) | Viral loop conversions | Contextual flows outperform clutter; connect next step to recent user action. | ||||||||||||||||||||

| Acquisition | Newsletter Funnel Validation (PR Package) | Subscriber signups, revenue | Scrappy funnels can validate demand and self-fund before product launch. | ||||||||||||||||||||

| Acquisition | Social Proof Headline Test (Trading Platform) | +11% signups | Social proof nudges fence-sitters at signup. | ||||||||||||||||||||

| Acquisition | Removing Friction from Trials (Lusha) | Trial signups, trial-to-paid | Removing credit card tripled signups without hurting paid conversion. | ||||||||||||||||||||

| Activation | AI-Suggested Templates in Onboarding (Magic Hour) | First-week retention +18% | Reducing cognitive load with templates drives users to the “aha” moment faster than a blank slate. | ||||||||||||||||||||

| Activation | Interactive Onboarding Checklist (Tevello) | +21% activation rate | Quick wins and visible progress beat long tutorials. | ||||||||||||||||||||

| Retention | Personalized Cancel Flow (B2C SaaS) | Saved ~30% of cancellations; +14.2% from copy tweak | Relevant interventions save more users than blanket discounts. | ||||||||||||||||||||

| Retention | Progress Meter for New Users (Prezlab) | +17% new-account retention | Making progress visible keeps users engaged past early drop-off. | ||||||||||||||||||||

| Revenue | The Labour Illusion (Kayak-style test) | Conversion trust in search/aggregation | Slowing down results with “effort” messaging can increase perceived value. | ||||||||||||||||||||

| Revenue | Contextual Paywalls (Canva) | Paid conversions | Paywalls work best when triggered at the moment of intent. | ||||||||||||||||||||

| Referral | Accelerating Review Loops (Tradefest) | +37% review volume; -60% time-to-review | Timely nudges and gamification speed up referral loops. |

Acquisition Experiments to Attract Users

Growth experiments often start at the top of the funnel, finding sharper ways to bring in new users or validate demand before investing further.

1. PLG Flow vs Cluttered Landing

By Rosie Hoggmascall, Growth Lead at Fyxer AI.

In one of her product growth dives, Rosie compared how Typeform and Mailchimp leveraged PLG loops for acquisition. Both relied on exposure to their product (surveys or email footers) to attract new signups.

Hypothesis: A clean, contextual PLG flow would convert more end-users into signups than a cluttered, generic one.

Design: Typeform added a sleek prompt at the end of a survey — “You’ve taken one. Now make one.” One tap led to a minimalist landing page with clear hierarchy and zero distractions. Mailchimp used a “Powered by Mailchimp” footer link pointing to a dense, text-heavy page disconnected from the context.

Outcome: Typeform’s design made the jump from participant to creator feel natural. Mailchimp’s cluttered page broke the flow and lost attention. While numbers aren’t public, behavioral science (cognitive load, clarity of next step) suggests that Typeform’s loop outperformed.

Lesson: Acquisition loops work best when they connect the dots between what a user just did and what they could do next. Context beats clutter.

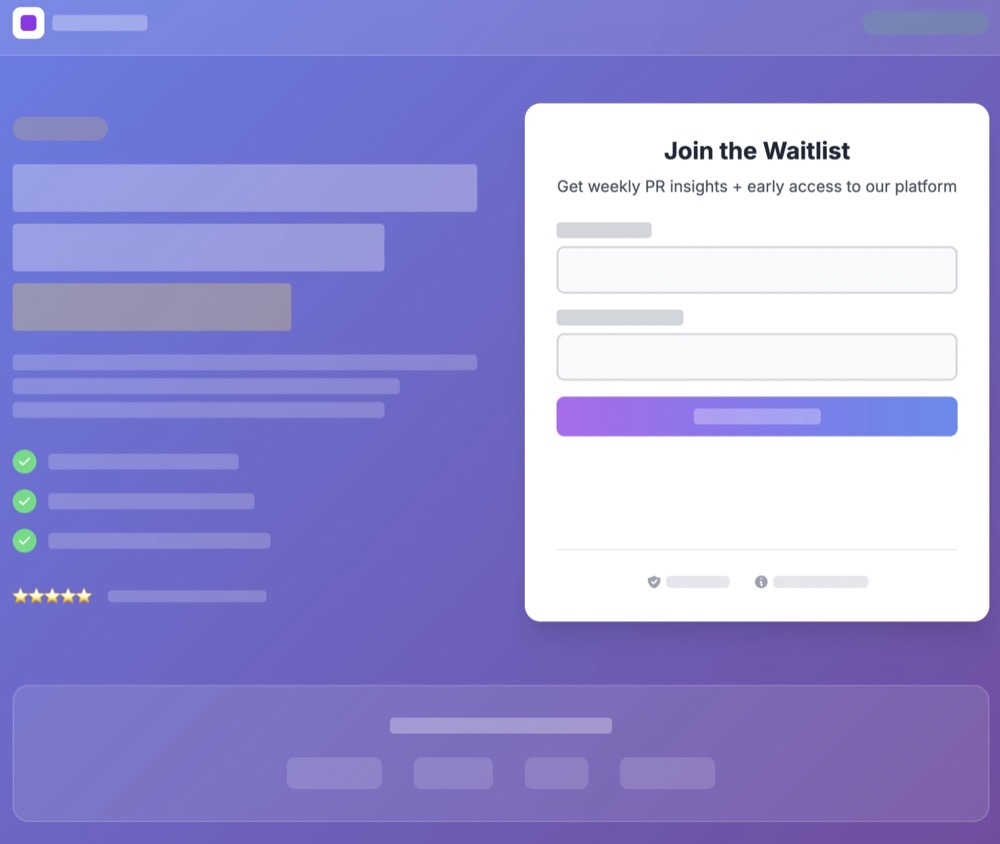

2. Newsletter Funnel Validation

By Victor Hsi, Founder & Community Manager, PR Package.

PR Package needed to validate demand for its SaaS idea before writing a line of code. Instead of building first, they tested whether a simple newsletter funnel could attract the right audience.

Hypothesis: A lightweight newsletter funnel could validate demand and offset acquisition costs before building the SaaS product.

Design: Instead of going straight into development, Victor’s team tested whether users would subscribe to a curated newsletter. The sign-ups were funneled into a loop where early affiliate revenue helped pay for ads, doubling as an acquisition engine.

Outcome: The funnel not only attracted qualified sign-ups but also generated enough revenue to pay for itself. Acquisition became self-sustaining, and the signal was clear: there was real demand worth building for.

Lesson: You don’t always need to launch a full product to test acquisition. A scrappy funnel can validate interest and fund itself.

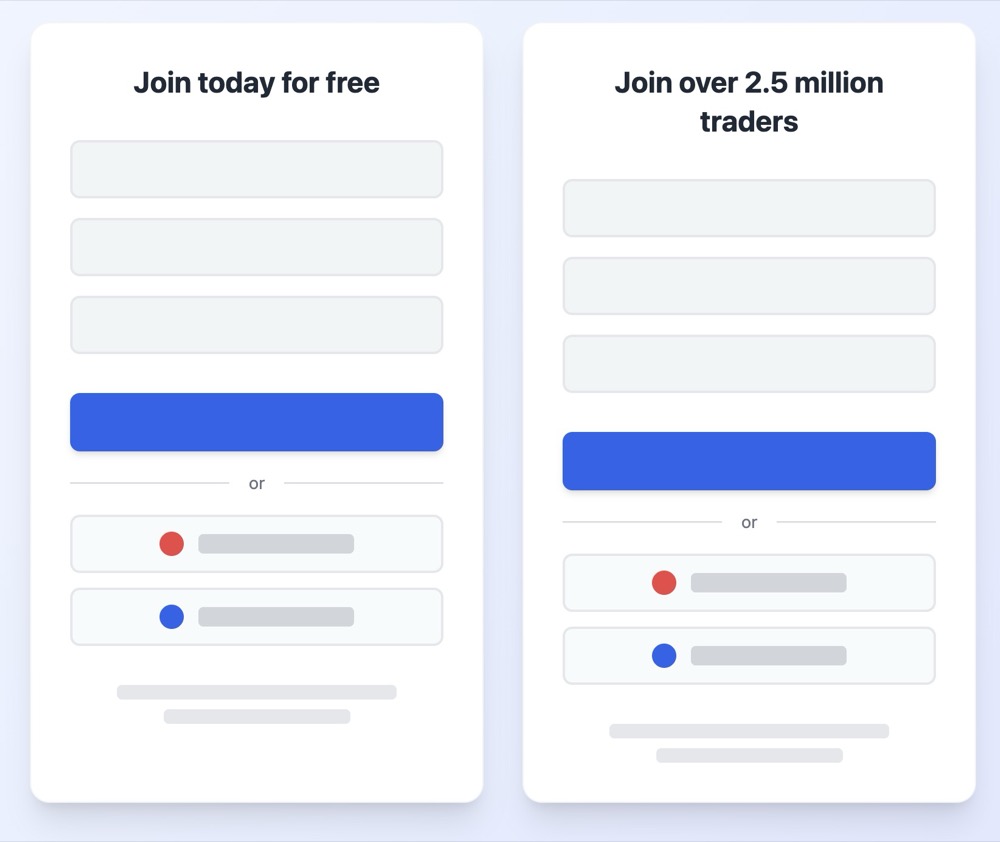

3. Social Proof Headline Test

By Oliver West, Regional Head of Customer Experience at VML.

A retail trading platform was struggling to get more users to commit at the signup stage. The question they had was whether social proof in the headline gives fence-sitters the push they needed.

Hypothesis: Adding a social proof stat to the signup form would increase conversions.

Design: This was for a trading platform. Oliver’s team swapped the generic headline “Join today for free” with “Join over 2.5 million traders.” Same form, same flow, just one line changed.

Outcome: Signups lifted by 11%. The simple use of behavioral science (social proof) nudged more visitors to commit.

Lesson: Tiny changes can unlock big wins when they tap into user psychology. At the acquisition stage, credibility signals are just as important as usability.

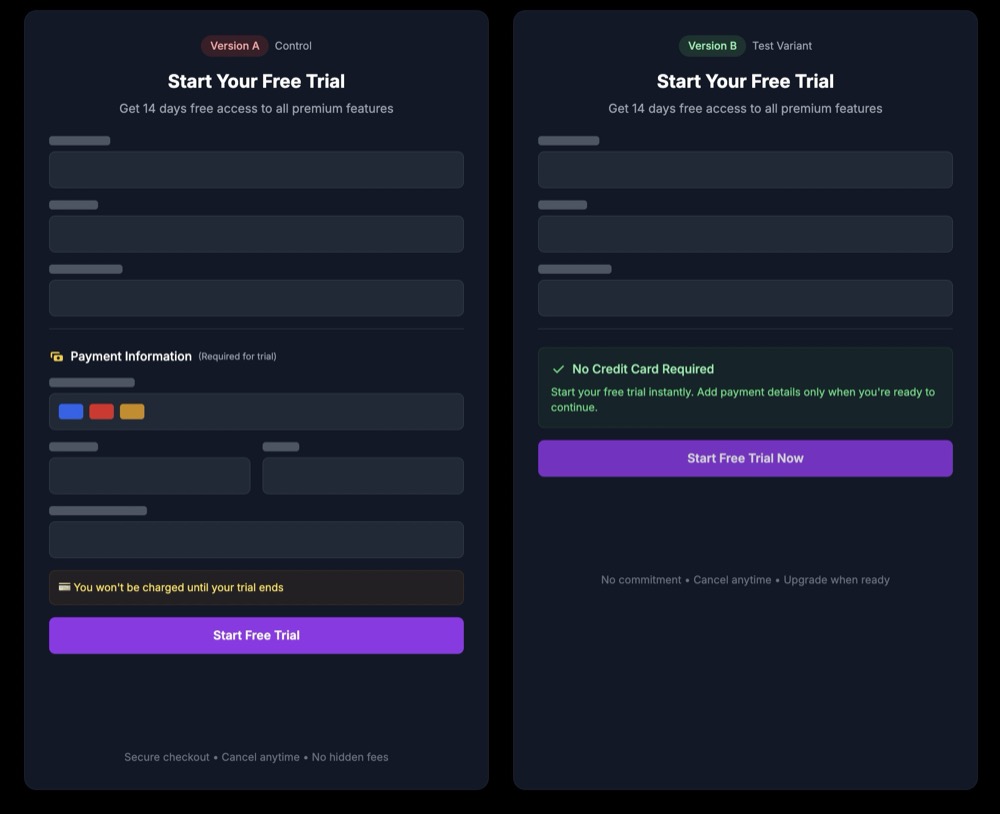

4. Removing Friction from Trials

By Yarden Morgan, Director of Growth, Lusha.

Lusha noticed a drop-off at the trial signup stage. Prospects balked at the credit card form, and the team questioned whether that barrier was worth the churn.

Hypothesis: Taking the credit card out of the trial signup process would increase signups without hurting trial-to-paid conversion.

Design: Lusha ran a simple A/B test: one version asked for card details upfront, the other didn’t. Everything else about the trial flow stayed the same.

Outcome: Signups tripled while trial-to-paid remained steady. The funnel widened without diluting quality.

Lesson: Sometimes the fastest lift in activation comes from removing barriers you assume are “standard.”

Growth A/B Tests in the Activation Stage

A/B tests in the activation stage focus on the moment users sign up and actually start experiencing value. Small barriers or nudges here often decide whether a new user becomes active or drifts away.

5. AI-Suggested Templates in Onboarding

By Runbo Li, CEO of Magic Hour

A common problem in design and creation products like Magic Hour is that too many signups stall at a blank canvas. However, this team wanted to help new users reach their “aha” moment more quickly.

Hypothesis: Preloading onboarding with AI-suggested templates would reduce cognitive load and help users experience value sooner.

Design: Instead of dropping users into an empty editor, Magic Hour tested showing ready-made templates. The templates acted as launchpads, letting users tweak instead of starting from scratch.

Outcome: First-week retention improved by about 18%. Runbo summarized the learning: “Reducing cognitive load upfront matters more than dazzling someone with limitless options early on.”

Lesson: Showing everything your product can do at once can impede activation. A lite version of your product’s UI, templates, presets, or starter content can guide them to the “aha” moment faster.

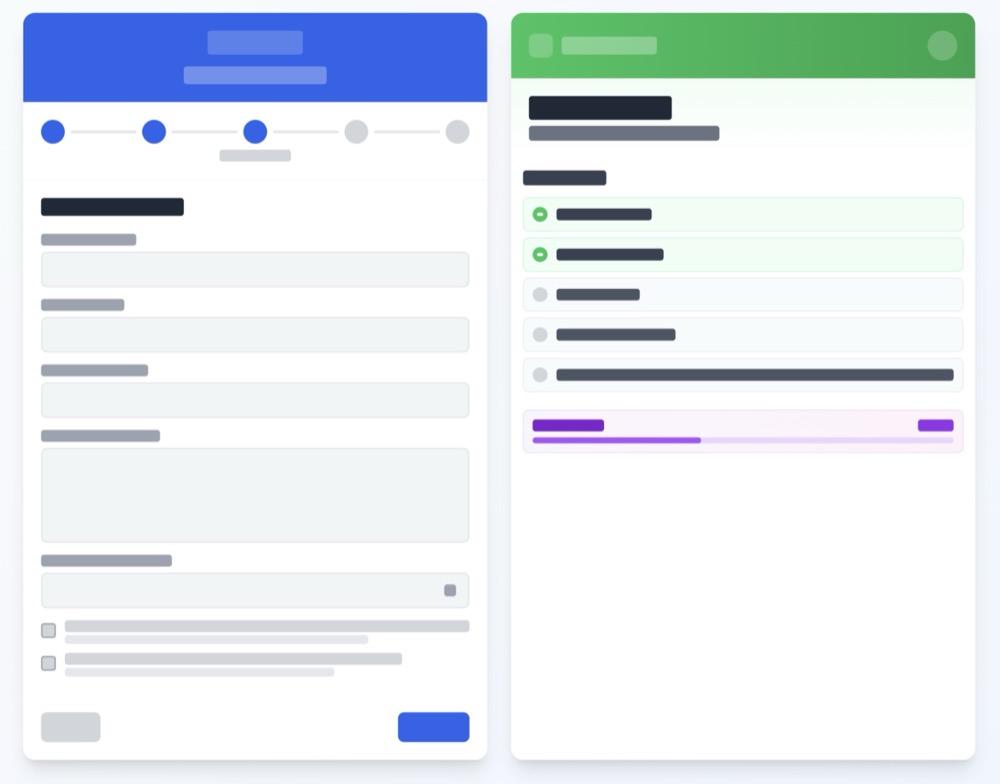

6. Interactive Onboarding Checklist

By Or Moshe, Founder and Developer, Tevello.

Tevello wanted to boost early product use. Their tutorial-heavy onboarding wasn’t engaging new users, and too many were dropping out before completing setup.

Hypothesis: A short, interactive checklist would give new users a sense of momentum and improve early activation.

Design: The team swapped a long tutorial flow for a checklist of quick actions inside the app. Each ticked item gave immediate feedback, a feeling of progress.

Outcome: Activation rose by 21%. Users who completed the checklist were more likely to continue exploring the product.

Lesson: He learned “giving people an early ‘win’ drives confidence more than over-explaining features.” Give new users something they can complete in minutes, not hours or days.

Retention-focused A/B Tests

At the retention stage, you test to keep users engaged after the initial excitement fades. The right intervention at the right moment can prevent churn and extend lifetime value.

7. Personalized Cancel Flow

By Khushi Lunkad, Growth Marketing and Product Operator with Toption.org.

A B2C SaaS startup faced high churn. By month three, nearly half of new users were gone. The cancel flow became the battleground to save customers before they left for good.

Here’s what Khushi did:

Hypothesis: A tailored cancel flow could save customers at the brink of churn better than blanket discounts.

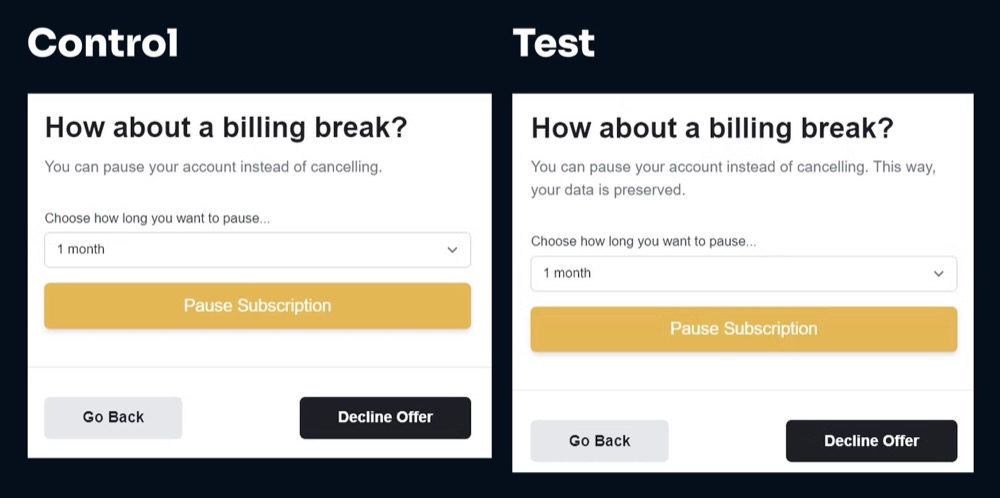

Design: Khushi audited the reasons people gave for canceling, then redesigned the flow. Instead of offering every customer the same discount, users were segmented.

Some were nudged toward support, others were offered education, and some were given alternative billing options. Tactical friction was added too, a small message like “This way, your data can be preserved”, to make abandoning the cancel flow less appealing.

Outcome: About 30% of customers who clicked “cancel” ended up staying. The extra line about preserving data alone improved churn prevention by a further 14.2%.

Lesson: Retention is about relevance, not bribery. Discounts aren’t the only lever; context-specific interventions work harder.

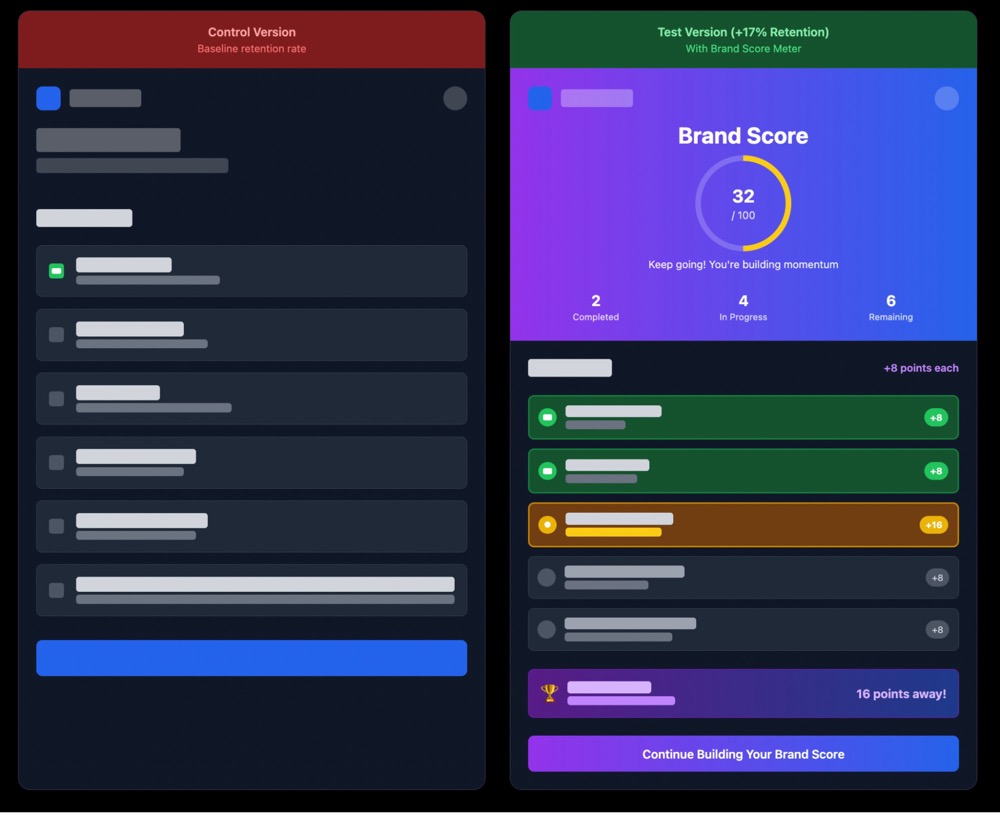

8. Progress Meter for New Users

By Ibrahim Alnabelsi, VP of New Ventures at Prezlab.

Prezlab struggled with new accounts fizzling out. The team asked: What if we made progress visible, so customers had a reason to come back and complete their setup?

Hypothesis: Showing users a visual “brand score” meter during setup would increase engagement and retention.

Design: Prezlab introduced a real-time progress bar that reflected how complete a user’s brand assets were inside the platform. It gamified the setup and gave instant feedback.

Outcome: Retention among new accounts jumped by 17%. Users who saw themselves moving closer to “completion” were far more likely to return.

Lesson: Progress is addictive. Making improvement visible helps users push past the early drop-off stage.

Revenue Growth Experiments

Revenue experiments test how product design, pricing, or monetization flows impact what customers are willing to pay and how they prefer to do so. These often reveal counterintuitive truths about trust and context.

9. The Labour Illusion (a Kayak-style Test)

Mike Fawcett, CRO Lead & Founder at Mammoth Website Optimisation.

In aggregation businesses like travel search, results often come back instantly. But some customers may not trust that speed. Could simulating “hard work” in the background increase conversions?

Hypothesis: Making search results feel like they take effort would increase trust and conversions in aggregation businesses.

Design: Inspired by Kayak, Mike tested slowing down search results with a loading animation and rolling status messages like “Searching the market” and “Collecting the best deals.” The results were technically instant, but the illusion suggested the system was working hard on behalf of the user.

Outcome: Conversions improved. People trusted the results more when they felt effort was involved, a classic example of the “Labour Illusion” bias.

Lesson: Faster isn’t always better. Sometimes, showing the work matters more than speed.

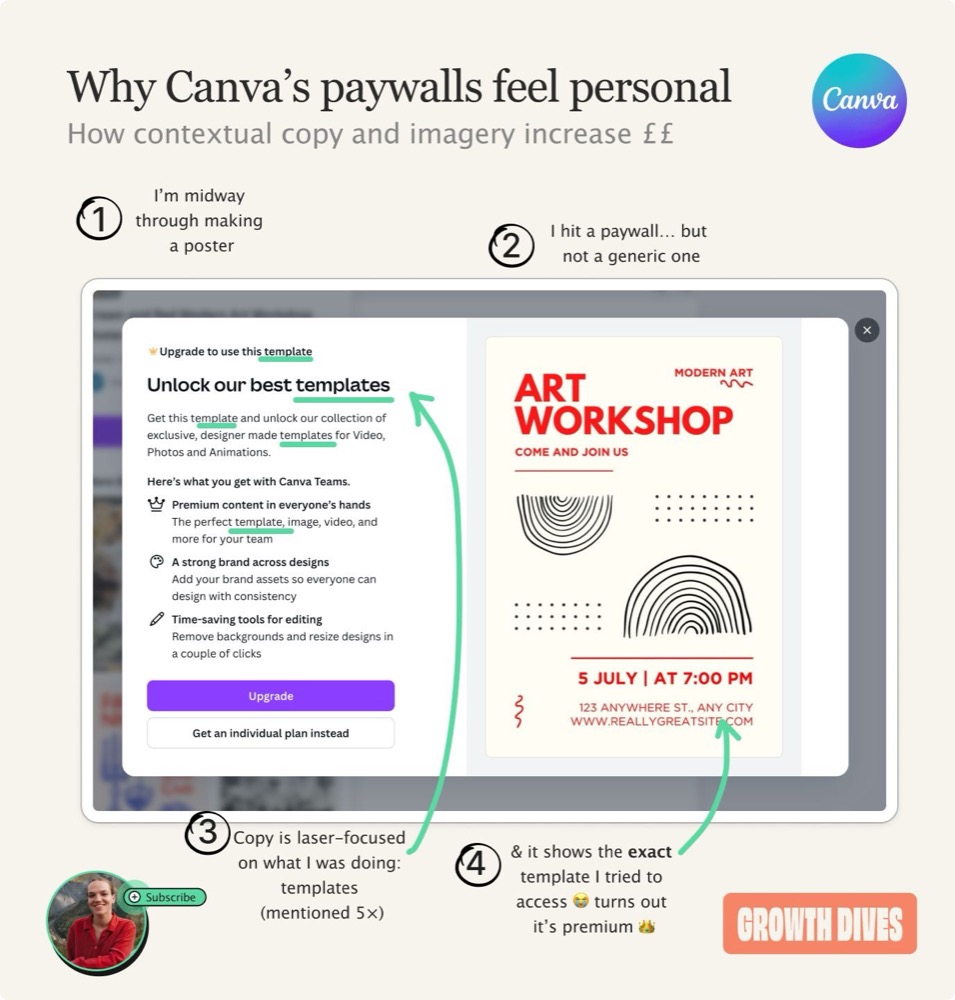

10. Contextual Paywalls

By Rosie Hoggmascall, Growth Lead at Fyxer AI.

This is another of Rosie’s growth dives.

In this example, Canva wants to monetize free users without breaking the creative flow. Would contextual paywalls, triggered at the moment of use, convert better than generic upsells?

Hypothesis: Paywalls triggered in context, when a user hits a feature they want, convert better than generic upsell prompts.

Design: Canva tested paywalls that appeared directly inside the editing flow, tied to the feature in use (like a premium font or image). Instead of a static “Upgrade Now” page, the upsell happened at the moment of intent.

Outcome: This works because users feel guided rather than blocked, and upgrading becomes the natural next step within the workflow they’re already on.

Lesson: Monetization works best when it aligns with user intent. Think of paywalls like bridges, not fences. They should extend momentum, not block or interrupt.

Referral Experiment Example for Growth Teams

Testing the referral step in SaaS or ecommerce growth focuses on loops, getting current users or customers to bring in the next wave. Timing and framing are often more important than the size of the incentive.

11. Accelerating Review Loops

By Joseph Cochrane, Co-Founder & CSO of Tradefest.io

Tradefest relied on reviews to fuel its growth loop, but exhibitors were slow to leave feedback. The challenge was accelerating that loop without over-incentivizing.

Hypothesis: Nudging exhibitors to leave reviews sooner would speed up the referral loop and increase overall review volume.

Design: Joseph tested review prompts immediately after an event, with light gamification. Exhibitors were encouraged to share feedback within 48 hours, and the system surfaced reviews quickly to drive visibility.

Outcome: Review volume increased by 37%, and the average time-to-first-review dropped by 60%. The faster loop meant new prospects saw fresher social proof, creating a compounding acquisition effect.

Lesson: Referrals aren’t only about rewards. Timing and immediacy can multiply the loop’s effectiveness.

What Growth Leaders Ask About A/B Testing

1. How do we ensure this experiment supports our growth goals?

Start with the growth target, not the test idea. If the business priority is reducing churn, then the hypothesis needs to reflect that, “A pause option will reduce cancellations by 10%” is sharper than “let’s tweak the cancel page copy.”

Every test should be mapped to a business lever, such as activation, retention, or revenue. If it doesn’t, you’re spending cycles on busywork.

2. Which KPIs should we use to measure success, and how do we avoid vanity metrics?

Pick one metric of interest before you launch. For SaaS, that might be the trial-to-paid conversion rate. For ecommerce, it might be revenue per visitor.

Secondary or proxy metrics can add color, but the winner is declared on the primary.

Vanity metrics creep in when you mistake activity for progress. More searches per user, more clicks, and more time on site, etc. They look good, but they mask problems like user frustration.

Always ask: Does this metric tie to customer value and business growth?

3. How do we determine the right sample size and test duration for confidence?

There’s no shortcut: calculate it. Power analysis upfront tells you how many users you need based on your baseline, the effect size worth acting on, and your appetite for risk.

As a rule of thumb, you want at least 100 conversion events per variant, and tests should run through a full business cycle, seven days or multiples, to smooth out weekday/weekend patterns. End too early and you’re just coin-flipping.

4. What pitfalls should we watch for in experiment design?

Bias is the constant enemy. Peeking early inflates false positives. Poor randomization means you’re testing against uneven groups. Seasonality can swing results if you’re not running long enough.

Parallel tests without guardrails can also cross-contaminate. Some teams run periodic A/A tests just to check that their allocation systems are working. You can fix this by

- Randomizing properly

- Resisting the urge to peek, and

- Logging external factors that might distort results.

5. How can we scale experimentation across teams without sacrificing rigor?

Scaling isn’t about cranking out more tests. It’s about building the rails so more teams can run high-quality tests in parallel. That means:

- Documenting hypotheses and results in a shared, searchable base.

- Standardizing briefs and decision rules so every team speaks the same language.

- Training cross-functional squads, product, design, data, and engineering, so the basics don’t have to be re-taught for every experiment.

The companies running thousands of tests a year aren’t reckless. They’ve invested in process, culture, and infrastructure that let them move fast without breaking trust in the data.

6. Who should own the testing process, and how should product, data, and engineering collaborate?

No single function can carry it. Product and marketing define the hypothesis and connect it to the growth strategy. Design crafts the experience. Engineering ensures clean implementation and event tracking. Data runs the power analysis and reads the results.

What’s critical is that one person, the experimentation lead, keeps cadence and quality consistent. Without that role, tests drift, results get siloed, and rigor slips.

7. How do we ensure insights, both wins and losses, feed into future product decisions?

Treat learning as an asset. Log every test, what you tried, what happened, and what you now believe, in a central a/b testing learning repository. Share it widely, not just in the immediate team.

Even “failed” tests are paid research if you capture the why. Spotify runs regular sessions to broadcast learnings across teams. In 2010, Netflix famously used an exploratory PS3 test to guide years of product evolution. They still embody that culture to this day. Same with Amazon, Google, and Booking.com.

The only real failed test is one you don’t learn from.

Wrapping It Up: Growth Experiments Compound

The best growth tests don’t live in isolation. Each one strengthens a loop, informs the next hypothesis, and compounds over time.

Whether it’s removing friction in trials, saving customers at the edge of churn, or tightening referral loops, these experiments show how growth leaders use A/B testing to move the metrics that matter — acquisition, activation, retention, revenue, and referrals.

Run them, adapt them, and let the learning rate of your team become its real advantage.

Written By

Uwemedimo Usa

Edited By

Carmen Apostu