Should You Choose Frequentist or Bayesian for Your Website A/B Test?

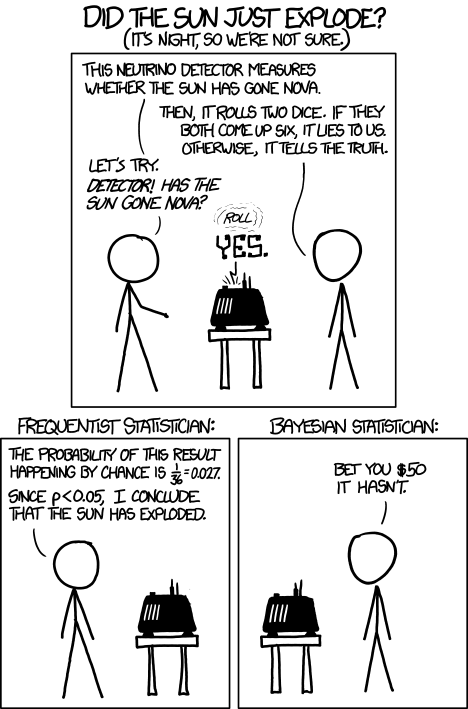

Frequentist and Bayesian are the two main approaches A/B testing tools use to crunch results. One uses a fixed, pre-determined sample size and p-values. The other gives probabilities like “there’s an 85% chance this version is better.”

Which should you trust? It depends on your traffic, test design, and the level of risk you’re willing to take.

This guide explains both approaches, their trade-offs, and the rules expert conversion rate optimizers actually use when choosing between them.

Breaking Down Frequentist Statistics

Frequentist statistics come from the randomized controlled trial (RCT) world and were the first stats methodology used in A/B testing. They rely on fixed sample sizes, pre-defined significance thresholds, and error control across repeated experiments.

Imagine you’re testing whether raising a product price from $9.99 to $11.99 increases revenue per visitor. A classic (fixed-horizon) Frequentist design sets the sample size in advance during test prep (via power calculations). To do that, it defines a minimum detectable effect (MDE), chooses a desired maximum p-value and power level to be achieved.

When the test completes, you get a p-value and confidence interval that tell you whether the new price is truly lifting revenue — and how certain you can be of that lift over the long run.

That structure is what makes Frequentist statistics powerful.

It’s also why practitioners who want clear guardrails lean on this method.

Common Pitfalls in Frequentist Testing

The strength of the framework doesn’t mean it’s foolproof:

- P-hacking and peeking: Examining results early and stopping when significance is reached inflates false positives.

When you choose Sequential Frequentist in Convert, we use Asymptotic Confidence Sequences (ACS), which provide anytime‑valid confidence intervals and maintain error control under continuous monitoring. This reduces the risk of false positives due to peeking.

- Misinterpreting p-values: A p-value is not “the probability the null is true.” It’s about how consistent your data is with the null, not the null itself.

- Underpowered tests: Too little traffic leads to missed effects (type II errors). One industry analysis of 1,001 A/B tests estimated 70% of A/B tests are underpowered.

- ‘Significance’ doesn’t mean ‘act’: A result that clears the 0.05 threshold doesn’t automatically justify rollout. Business context matters.

Frequentist statistics give you rigor, structure, and a clear framework for decision-making. But they also demand discipline: plan tests in advance, collect enough data, and resist the urge to peek.

How Bayesian Statistics Handle Evidence

Bayesian statistics are a probabilistic framework for reasoning under uncertainty. Instead of relying only on fixed sample sizes and long-run error rates, they update beliefs as new data comes in.

The math comes from Bayes’ Theorem, but in practice it’s a way of asking: Given what I already believe, and what I’ve now observed, how likely is it that this variation is better?

In A/B testing terms:

- Prior probability is what you believe about a variant’s conversion rate before you run the test (based on history, expert judgment, or a neutral “uninformative” prior).

At Convert, we start Bayesian experiments with uninformed priors, i.e., 100% divided by the number of variants. So, for two variants, each will have a 50% chance to win at the beginning. It’s like a race to figure out which ultimately and provenly performs better.

- Likelihood or chance to win literally means chance to win. It’s very intuitive.

- Posterior probability combines both and gives you an updated belief, expressed as something intuitive like: “There’s a 92% chance Variant B is better than control.”

For practitioners juggling speed and business pressure, Bayesian can also offer flexibility. Lucia van den Brink put it this way:

“When the MDEs are 5% or higher, I prefer to go Bayesian. It gives directional answers like ‘there’s a 92% chance the variant is better,’ which helps teams act fast.”

Lucia van den Brink, Founder and Experimentation Lead Consultant at Increase-Conversion-Rate.com and Co-Founder / Director of Women in Experimentation Community

Imagine you’re evaluating whether a new café is “good.” Before looking at reviews, you start with no strong opinion (a neutral prior). You then see 946 positive reviews out of 1,074. Updating your belief with that data, Bayesian analysis might say: “There’s a 99.8% chance this café is good.”

Applied to websites, this is how Bayesian platforms can tell you the probability that a pricing change or new checkout flow is better, based on the test data plus any priors.

Where Bayesian Is Often Misunderstood

Despite its appeal, Bayesian analysis isn’t magic. Marketers and testers often trip up in a few areas:

- Priors are subjective. They need to be chosen and justified, not hidden. If vendors set them without transparency, you may be working with assumptions you didn’t agree to.

- Credible intervals ≠ confidence intervals. They’re interpreted differently, but many practitioners mix them up.

- Not a free pass on stopping rules. Some believe Bayesian testing means you can peek endlessly without consequence. In reality, stopping rules still matter.

- Complexity under the hood. Many Bayesian models require computationally heavy methods. Some testing platforms simplify this, but at the cost of hiding details from users.

Bayesian statistics reframes results in intuitive, probability-based language. That’s its strength. But those probabilities rest on assumptions (priors, model choices) that you need to understand before you take action.

Related reading: Bayesian Statistics Primer for A/B Testing

Key Differences Between Frequentist and Bayesian Statistics

Frequentist and Bayesian methods both analyze A/B test data, but they come from different philosophies of probability.

Frequentist approaches see probability as the long-run frequency of events. Bayesian approaches treat it as a degree of belief that updates as new data arrives.

Here’s how the two compare in practice:

| Criteria | Frequentist | Bayesian |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sample Size Requirements | Requires a fixed sample size, set in advance. Underpowered tests miss effects; overpowered tests detect trivial ones. |

Updates beliefs continuously as data comes in. Needs enough evidence for stable posteriors. |

|||||||||||||||

| Ability to Peek (Monitoring Mid-Test) | Traditionally can’t peek without inflating false positives. Sequential methods exist but must be pre-planned. |

Often marketed as “peek anytime,” but stopping rules still affect inference. |

|||||||||||||||

| Ease of Explanation to Stakeholders | Clear structure (p-values, confidence intervals (CI)), but concepts like p-values and CIs are often misinterpreted. |

Feels more intuitive: outputs direct probabilities (“92% chance variant B is better”). Requires transparency about priors, which some tools hide. |

|||||||||||||||

| Interpretability for Decision-Making | Provides error-controlled evidence, but requires patience and discipline to wait for full sample completion. More vulnerable to inflated false positives if results are peeked at prematurely. |

Directly provides probabilities that can guide immediate decisions. Easier to act on, but influenced by priors. |

|||||||||||||||

| Suitability for Different MDEs | Strong when effects are small and precision matters. |

Practical for larger effects or when directional guidance is enough. |

Note: Sequential Frequentist testing is still Frequentist. Confidence sequences enable safe continuous monitoring and early stopping while maintaining Frequentist error guarantees without adopting Bayesian priors.

CRO Practitioner Views on the Trade-Offs

Lucia van den Brink defaults to Frequentist because it’s “statistically stronger” and easier to explain to stakeholders familiar with terms like significance. She explains:

“If I have lots of data and a small MDE (around 1%), Frequentist is perfect. I can answer the question of ‘is this change better?’ with a clean yes or no. Plus, stakeholders often know the term ‘significant’ from medicine or psychology, so it’s easier to explain.

For ecommerce, I’m fine lowering the confidence level to 90% because there are no lives at stake here. This speeds up learning.”

Lucia van den Brink

For her, Bayesian is a good fit when MDEs are 5% or higher, with easy-to-translate directional answers.

Ryan Thomas emphasizes rigor and a clear understanding of what your statistical results actually mean:

“I go with Frequentist almost exclusively for the simple reason of having transparent error control and being able to plan tests in advance. Bayesian has no concept of statistical power, peeking, sample size, or test planning in general. But sweeping these things under the rug doesn’t make them go away. An underpowered test is still underpowered even when the Bayesian CTBC looks good.”

Ryan Thomas, Co-Founder of Koalatative

What Bayesian Statistics Can’t Do (Despite A/B Testing Tool Vendor Claims)

Bayesian methods are powerful, but some of the common marketing claims around them don’t hold up under scrutiny:

- The “Bayesian is faster” myth suggests that updating posteriors continuously means you need less data. With large samples and neutral priors, Bayesian and Frequentist converge to the same result. Well-designed Frequentist group-sequential tests can also shorten expected sample sizes significantly.

- “You can peek anytime.” The fact is, posterior calibration under optional stopping is nuanced. Some Bayes factors are invariant to stopping, but with default priors or composite hypotheses (the kind most tools use), robustness breaks down. Stopping rules still matter, and ignoring them can lead to biased results.

- Bayesian outputs are “plug-and-play objective truth.” Bayesian blends priors with new data. Without transparency about those priors, or with vague “probability to beat baseline” metrics, it’s easy for users to misinterpret results or for vendors to oversimplify them.

Yes, Bayesian testing gives intuitive probabilities you can act on. But it doesn’t eliminate the need for rigor around priors, stopping rules, or sample size.

For a deeper look at where vendors oversell and what Bayesian really can (and cannot) do, see our Bayesian Statistics Primer.

When to Use Frequentist in Website A/B Testing

Frequentist statistics are the default choice in many industries and remain the backbone of large-scale experimentation programs at companies like Google, Microsoft, and Amazon. The reason is simple: they offer clear error control, repeatable procedures, and results that can stand up to scrutiny.

Business and Testing Contexts Where Frequentist Fits Best

- In regulated industries like finance, healthcare, and other high-stakes fields, error control is non-negotiable. A false positive can mean wasted millions, or, in the case of medicine, human risk. Frequentist methods, with their strict error bounds, are trusted in clinical trials and quality assurance because they quantify risk in hard numbers.

- For pricing, quality control, and surveys. When a business needs repeatable, objective thresholds (e.g., pricing elasticity tests, manufacturing quality control, or large-scale survey analysis), the Frequentist framework provides reliable “yes/no” answers.

- Frequentist probability defines outcomes in terms of long-run frequency. That matches well with tests you’d expect to repeat, such as checkout flows, sign-up steps, or recurring product experiments.

Error Control in High-Stakes Environments

In regulated or high-impact settings, teams often set stricter thresholds than the common 95% confidence level. Pharmaceutical trials, for instance, might require 99% confidence, accepting the higher data burden to avoid costly false positives.

Risk frameworks often build in:

- Lower significance thresholds (e.g., p < 0.01) for critical changes.

- A/A tests to ensure the system itself isn’t generating false positives.

- Replication and holdbacks to confirm results before rolling them out broadly.

- Ramp-ups that gradually expand exposure while monitoring real-world impact.

These practices acknowledge a simple truth: with a 5% significance threshold, roughly 1 in 20 tests will still produce a false positive. Multiply that across dozens or hundreds of experiments, and false learnings become inevitable unless controlled for.

Why Frequentist Still Matters

Frequentist methods aren’t always trendy, but they offer something Bayesian doesn’t: transparent, objective error control tied to repeated procedures.

If your test involves a small minimum detectable effect, operates in a regulated space, or demands results that will be challenged by skeptics, Frequentist is often the safer and more defensible path.

When to Use Bayesian in Website A/B Testing

Bayesian statistics shine when data is limited, prior knowledge matters, or when decisions need to adapt as new information arrives.

Contexts Where Bayesian Fits Best

- When traffic is low or data is incomplete, Bayesian methods can incorporate prior knowledge to stabilize estimates. This is why they’re common in clinical trials and other fields where sample sizes are constrained.

- Priors allow teams to encode historical data or expert judgment directly into the model. When done transparently, this can make results more actionable earlier.

- Bayesian frameworks update as data flows in, making them suitable for environments where you need to act quickly, such as product-led growth loops or high-velocity ecommerce.

- When communicating uncertainty. For stakeholders, saying “variant B has a 90% chance of being better” is often clearer than explaining p-values and error rates.

Some examples of when they are used are:

- Subscription flows: Product teams running growth loops often don’t want to wait for fixed sample sizes. A Bayesian probability that a variation improves retention is easier to integrate into continuous decision-making.

- Ecommerce: A homepage hero test with higher expected lifts may not require the rigidity of Frequentist planning. Bayesian results can guide faster iteration, even if the certainty isn’t bulletproof.

The MDE Trade-Off

- Small MDEs (1-2%). Frequentist tests, with proper power calculations, are often more reliable.

- Larger MDEs (5%+). Bayesian becomes practical, offering usable directional insights without waiting for very large samples.

Limits of Bayesian Stats in CRO Practice

The appeal of Bayesian testing is often oversold. Studies of Bayesian A/B testing platforms show that “probability to be best” metrics can behave counterintuitively in A/A tests, and subjective priors can blur the line between evidence and executive opinion.

And while Bayesian analysis is often framed as “faster,” in online testing, the real speed gains usually come from Sequential Frequentist methods.

The Bottom Line

Bayesian testing is most useful when:

- You have limited data but credible priors.

- You need probabilities that stakeholders can act on.

- The expected lift is large enough that directional insights are still valuable.

It’s not a shortcut to certainty. But used in the right contexts, Bayesian methods help teams move faster when perfect precision isn’t required.

Expert Perspectives

Practitioners don’t treat Bayesian vs. Frequentist as an ideology. They choose based on context. Here are the rules of thumb from experimentation leaders:

Lucia van den Brink (Beginners → Learn both, choose based on context):

“For beginners and people starting to work at my consultancy, Increase Conversion Rate.com, I always suggest analyzing each A/B test with both Frequentist and Bayesian statistics. It will help you understand both frameworks: how results overlap, but also sometimes differ, and why that is.”

Comparing both methods side-by-side builds intuition for when to trust each and how to interpret results confidently.

Ryan Thomas (Focus on fundamentals → Frequentist for transparency and planning):

“I always advise beginners to invest time in learning about frequentist p-values, power, and minimal detectable effect. They’re intimidating at first, but there are lots of courses and other resources out there to get you up to speed, and the complexity is right in your face instead of being hidden. Once you understand the basic parameters, there’s not much else lurking beneath the surface. From there, if you still want to use Bayesian, then at least you’re doing it from a place of understanding rather than putting blinders on.”

A/B Testing Stats Calculators & the Statistical Models They Use

The stats engine behind your A/B testing calculator directly affects how you interpret results, when you stop a test, and what confidence you can claim in your conclusions. Different vendors take different approaches, and their transparency varies just as much.

Here’s how the major platforms stack up:

| Tool | Model Used | Transparency |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Convert | Supports three modes: Fixed-horizon Frequentist (with in-app power calculations & MDE-based “time until done”), Sequential Frequentist (always-valid confidence intervals via Asymptotic Confidence Sequences), and Bayesian (chance-to-win with configurable thresholds). |

Offers detailed documentation (“Statistical Methods Used”) explaining each approach, how they work, and when to apply them. |

|||||||||||||||

| Optimizely | Sequential Frequentist with built-in False Discovery Rate (FDR) control. |

Publishes a detailed Stats Engine whitepaper explaining sequential testing and FDR. |

|||||||||||||||

| Kameleoon | Supports both Frequentist and Bayesian. Frequentist is the default, with sequential options, CUPED, and Bayesian also available. |

Provides a public “Statistics at Kameleoon” paper outlining methods in depth. |

|||||||||||||||

| Dynamic Yield | Bayesian. Uses “Probability to be Best” and “Expected Loss.” |

Labels clearly as Bayesian; explains methodology in documentation. |

|||||||||||||||

| VWO | Bayesian. Built its own engine to replace p-values with probability-to-be-best metrics. |

Transparent about its Bayesian preference; explains approach in blogs and resources. |

|||||||||||||||

| AB Tasty | Bayesian-first but also provides a Frequentist analysis mode. Uses Bayesian probability-to-be-best, gain intervals, and Dynamic Traffic Allocation. |

Openly positions itself as preferring Bayesian; explains pros/cons in blog and docs. |

In short…

Vendors take different stances. Some commit to Bayesian, others lean on Frequentist, and a few blend both. Convert is unique in supporting three.

The point is, A/B testing tools and their calculators don’t reveal a universal truth. They reflect the statistical philosophy of the platform. Without transparency, you risk making decisions on assumptions you never agreed to.

Convert’s Options: Both Frequentist & Bayesian Available

Most A/B testing tools force you into one statistical camp. Convert is one of the few platforms that lets you choose and configure the engine that fits your experiment.

Frequentist in Convert

Since October 2023, Convert defaults to t-tests for statistical significance (a shift from z-tests). From there, users can configure how strict or flexible they want to be:

- Confidence level: 95% by default; 99% recommended for mission-critical tests.

- Test type: Two-tailed (default, conservative) or one-tailed (faster, less strict).

Use one‑tailed tests only when the direction of the effect is pre‑specified and actions for the opposite direction are ruled out; otherwise, prefer two‑tailed.

- Multiple comparison correction: Bonferroni, Sidak (recommended for robustness), or None.

- Sensible Defaults menu: Quick presets for “standard” vs. “mission-critical” experiments.

Convert also integrates power calculations and sequential testing:

- Dynamic mode: Uses observed lift as the MDE for estimating progress (default).

- Fixed mode: Lets you pre-set an MDE for traditional fixed-horizon planning.

- Sequential testing: ACS gives teams the option to monitor continuously while keeping Type I error under control.

Bayesian in Convert

Convert’s Bayesian engine starts with uninformative priors (each variant begins with a 50% chance of being best) and updates as data accumulates.

- Decision thresholds:

- 95% (default) → ~5% risk

- 99% → for high-certainty, mission-critical changes

- 90% → for faster, lower-stakes decisions

The output is phrased in plain terms: “There’s a 95% probability Variant A beats Control.” This framing makes results easier to explain to stakeholders who don’t want a lesson in p-values.

Unlike some vendors, Convert documents every detail of how both engines work in its Statistical Methods Used article. That includes test design, power calculations, sequential testing, and clear guidance on when to use Frequentist vs. Bayesian.

With both A/B testing statistics engines available, teams aren’t locked into one philosophy. They can match the method to their context.

Don’t Switch Mid-Test

Convert gives you the freedom to choose between Bayesian and Frequentist engines. That flexibility invites a natural question: what happens if you switch mid-test?

Here’s what happens if you change engines midstream:

- It can lead to different assumptions. Frequentist fixed-sample tests are built on “one-look” designs, while sequential tests rely on pre-defined monitoring rules. Bayesian analysis treats probability as belief updated with evidence. Each framework comes with its own logic.

- Error control can shift because engines handle confidence and false positives differently. Toggling methods midstream changes how error is accounted for. The math doesn’t “break,” but your interpretation of what the numbers mean may break.

- Sequential Frequentist results are sensitive to stopping points, while Bayesian posteriors will happily update at any stage. Switching engines means accepting that each view comes with its own lens.

Some practitioners see this as cherry-picking. Others see it as a way to explore how different philosophies interpret the same data.

The key is to recognize that you’re changing not the outcome itself, but the story you’re telling about it.

Our Take

Use Convert’s flexibility thoughtfully. If your goal is clean, reproducible inference, pick your engine at the start and stick with it.

If your goal is to learn how different models frame uncertainty, you can absolutely experiment with both. The numbers won’t invalidate each other; rather, they’ll simply reflect different philosophies.

Final Thoughts

There isn’t a single “right” engine for every A/B test. Frequentist offers rigor and error control, Bayesian offers probabilities that are easier to act on and explain. Each shines in different contexts.

What matters is choosing upfront, sticking to the rules of that method, and interpreting results honestly. Convert gives you both engines, so you can match the model to your business context instead of being boxed in by a vendor’s philosophy.

FAQs: Frequentist vs Bayesian Stats in A/B Testing

1. What is the fundamental difference between Frequentist and Bayesian approaches in A/B testing?

Frequentist methods treat probability as the long-run frequency of outcomes. Parameters (like conversion rates) are fixed but unknown, and results are framed in terms of error control across repeated trials.

Meanwhile, Bayesian methods treat probability as a degree of belief. Parameters are modeled as distributions, priors reflect what you know before the test, and posteriors update those beliefs as data comes in.

2. When should I choose Frequentist over Bayesian for A/B testing?

Use Frequentist when you need strict error control, large-sample rigor, or established procedures (like regulated industries, pricing tests, or small MDEs). Choose it if you want transparent thresholds and reproducibility.

3. How does sample size affect the choice between Frequentist and Bayesian methods?

Frequentist tests require pre-calculated sample sizes based on MDE, alpha, and power. If traffic is low, they risk being underpowered.

Bayesian methods (and Sequential Frequentist testing) allow continuous monitoring, but they don’t eliminate the need for enough data. Small effects still require large samples, no matter the framework.

4. Which method is easier for business stakeholders to interpret?

Bayesian framing is usually more intuitive: “There’s a 92% chance variant B is better.”

Frequentist outputs (p-values and confidence intervals) are harder to explain and can be misinterpreted by stakeholders who aren’t familiar with the stats.

That said, Bayesian metrics, such as “probability to be best,” can behave counterintuitively in certain contexts (e.g., A/A tests).

5. Do Bayesian methods really solve the “peeking” problem?

Not entirely. While Bayesian inference updates continuously, the stopping rule (when you decide to look and stop) is still part of the data-generating process. Ignoring it can bias results.

Sequential Frequentist methods like confidence sequences or alpha-spending designs offer rigorous frameworks for controlled peeking.

6. Which approach is safer for small businesses with limited traffic?

Neither approach overcomes the core limitation: too little data means inconclusive tests.

Bayesian methods can feel more usable with small samples if you have credible priors, but for very low-traffic sites, A/B testing may not be worth the effort. In that case, focus on qualitative research or driving more traffic first.

7. Are there any ethical concerns or practical limitations with Bayesian methods?

Yes. Subjective priors can tilt results if chosen poorly, and many tools don’t disclose the priors they’re using. That lack of transparency is risky. Bayesian models can also be computationally intensive and are best understood as decision-making tools, not objective measures of truth.

In Sequential settings, ignoring stopping rules still biases results, despite vendor claims.

8. What is Convert Experiences’ statistical model for A/B testing?

Convert supports all three statistical modes:

- Fixed-horizon Frequentist: Pre-set sample sizes with classic significance testing.

- Sequential Frequentist: Confidence sequences that allow safe monitoring and peeking without inflating false positives.

- Bayesian: Probability-to-be-best framing with configurable decision thresholds (90%, 95%, 99%).

Data is collected once, and you can re-interpret the same experiment in both Bayesian and Frequentist frameworks. Convert is one of the few A/B testing platforms that offers this flexibility, so you’re never locked into a single statistical philosophy.

Written By

Uwemedimo Usa

Edited By

Carmen Apostu

Fact-Checked By

Karim Naufal