How to Do A/B Testing in Marketing (A Comic Strip Guide)

Is your marketing not performing how you want it to?

Maybe you’re pushing out as much as you can but you only have so much time or budget and you need more impact? Or maybe you have a budget for ads, but you’re struggling with paid traffic as the competition for ad space rises and you’re finding it harder and harder to compete?

You can learn to make your marketing far more effective by improving it with A/B testing!

It doesn’t matter if you’re a small business or an enterprise company, A/B testing can help you get ‘more’ from all of your marketing efforts. More lift, more conversions, more impact.

In this article, we’re going to look at 5 popular marketing channels and how you can test and improve your results from each of them.

What Is A/B Testing?

A/B testing is the process of taking one ‘event’ that your audience experiences, measuring its performance, then testing a variation of that experience to see which performs best.

This event could be visitors interacting with your sales page, but it can easily apply to them clicking an advert or opening and reading an email. Whatever event and goal you have, you can test to try and improve the action.

If the new version gets just a 1% improvement then this all adds up and increases your performance. (Some tests can see leaps of 10-50% difference.)

Every test you run helps you to learn more and improve, or to avoid potential failures. This is why it’s worth testing any element of your business to find improvements, especially your marketing…

What Is A/B Testing in Digital Marketing?

Traditionally, you might think of A/B testing as simply testing elements of your website. Perhaps testing a CTA on a button to see which version gets the most clicks.

However, this is just the tip of the iceberg. The reality is that you can test any event, channel, or platform that interacts with your audience and try to improve its performance.

- Run ads? You can run multiple A/B tests to see which version gets the highest CTR. Everything from the image or video, the copy, the placement, and even the target audience.

- Have a landing page? You can test how well that traffic converts on that page. How far they read, if they bounce, if they get stuck, if they convert and how to improve it. (You can even take these principles and apply them to your content marketing.)

- Send emails? You can test headlines for open rates, times of day to send, CTAs to click, etc.

There is no digital marketing channel that can’t be tested and improved upon. You may need a different tool, or you may even have these features built into what you already use and not be aware of them.

The key to a successful digital marketing campaign is not just funnels, audience, and messaging. It’s split testing and improving those elements again and again.

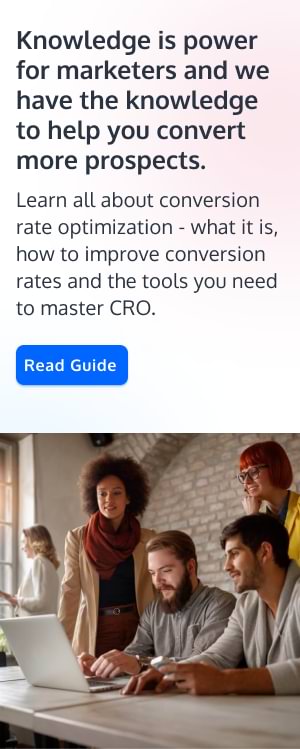

A/B Testing? Isn’t That to Increase Conversions?

Yes and no. A/B testing is just one method of ‘experimentation’ that’s designed to help you improve your interactions with your audience.

In reality, it belongs to a larger methodology called CRO or Conversion Rate Optimization. Because of the name, it’s easy to think that CRO is just about conversions, but the reality is that conversions are merely a byproduct of scientifically testing and improving all the elements that help the customer want to buy.

CRO is about:

- Improving the user experience,

- Removing any flaws in the system that affect their experience or hinder their ability to move forward,

- Understanding your audience so you can provide the best offers and products,

- Using the audience’s language and interactions to connect with what they want,

- Improving processes to help them make their buying decision.

Sure, there are direct tests that can help get more conversions such as running tests on your sales page, but by improving these core elements you’ll see more sales simply because you’re affecting and improving the entire sales and marketing process.

How Can A/B Testing Benefit a Marketing Campaign?

A/B testing can be used in almost any element of your marketing strategy. It doesn’t matter if it’s for a new launch, or even if it’s ongoing. There are tests you can run to improve at almost every point that it interacts with your audience.

Let’s say you have a new product.

You can use A/B testing and CRO before launch to:

- Test the product with the ideal audience in advance to make sure it’s what they want,

- Test the language, pricing, and imagery used for the offer before you push to the public, so you can find which versions get the most actions,

- Run QA tests to find common sticking points in the customer buying experience that is stopping them from buying or using your product, and then create assets to help remove those issues.

Then, once the product is live, you can improve further by:

- Running tests on the product page design, layout, and copy to increase lift,

- Running tests on traffic channels to find the best performing communication,

- Running tests on your content assets to make them perform better (clicks, rankings, leads),

- Run tests on your emails to get higher open rates and more clicks,

- Continue to run tests on each of these elements for even further improvements,

- Run A/B tests for marketing on different platforms,

- Or run A/B tests for marketing to new audience groups…

… and so much more!

A common misconception is that A/B testing is just for the landing page, but imagine if you improve the entire process by just 1% at each stage of the buyer’s journey? More lift at the front end, more touchpoints hit, more leads, more sales, higher average sales, and more repeat sales?

All of this can be tested and improved, but it’s not just about wins either. It’s just as important to find out what doesn’t work so that you don’t take action on things that can harm your business.

In 2017, Pepsi became frustrated with how long it took agencies to create marketing assets and decided to form an internal agency to create faster.

The result?

They created an ad trivializing the Black Lives Matter movement and had to pull the ad off the air in 48 hours…

Imagine if they had tested the idea with a focus group of their customers and not their staff first before pushing it live? (Heck, before even creating the ad!)

A/B Testing KPIs That Marketers Should Care About

The KPIs depend on which audience interaction you are testing and what your goal for that interaction is. It could be more lift, time on page, bounce rate, CTR, CVR, average sale, but it will all change based on what you’re testing. (Is it content? An ad? A web page?)

The key things to remember are:

- Have a singular goal that you want to improve that’s relevant to that particular audience interaction and use that as the KPI for that event,

- Measure its current performance,

- Hypothesize how you can improve it,

- Test and measure that event against the current version,

- And then measure how it affects your end goal or ‘guardrail metrics’.

Why test it against your end goal?

Let’s say that you run an ad and find that it gets far more clicks to your landing page, but far fewer sales from that new audience. If all you focused on was the ads’ CTR, then it would look like a success but in reality, you might be losing money.

5 A/B Testing Examples that Will Blow Your Socks Off

Let’s have a look at some examples of 5 unique A/B testing case studies, set across the 4 core marketing channels that we’re going to cover in this article.

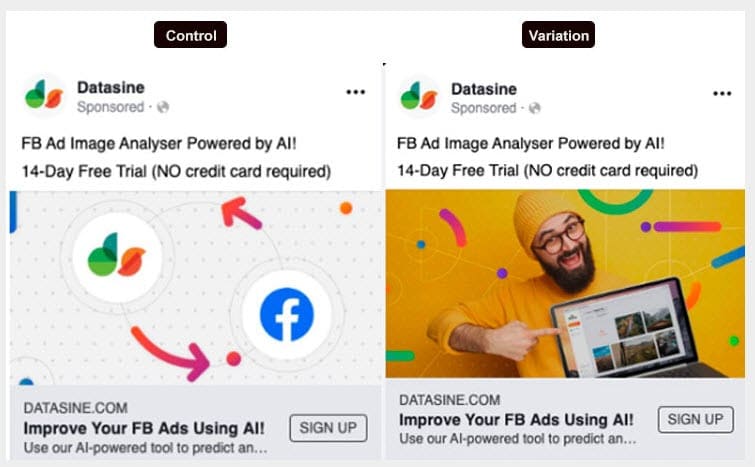

- Datasine saw a 57.48% increase in CTR by A/B testing the image on their paid Facebook ads.

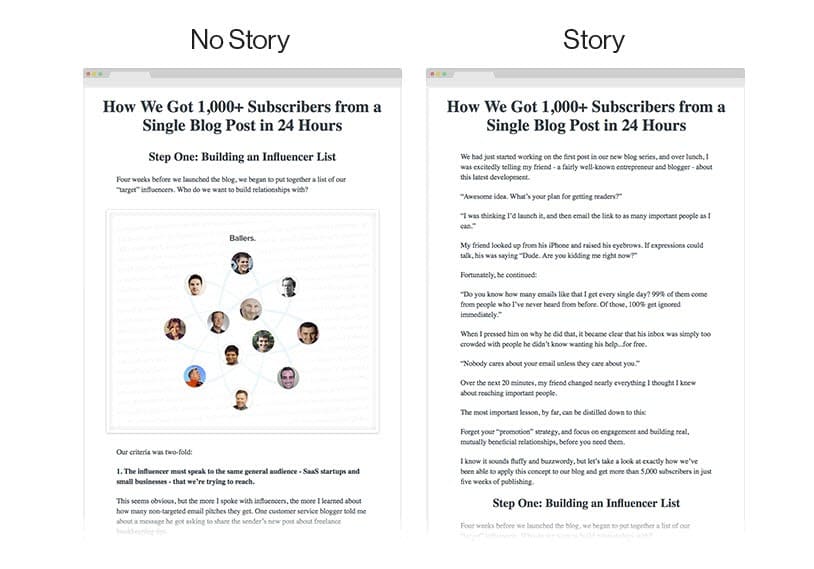

- GrooveHQ saw a 296% increase in full read-through and a 520% increase in time on page by testing the introduction to their article.

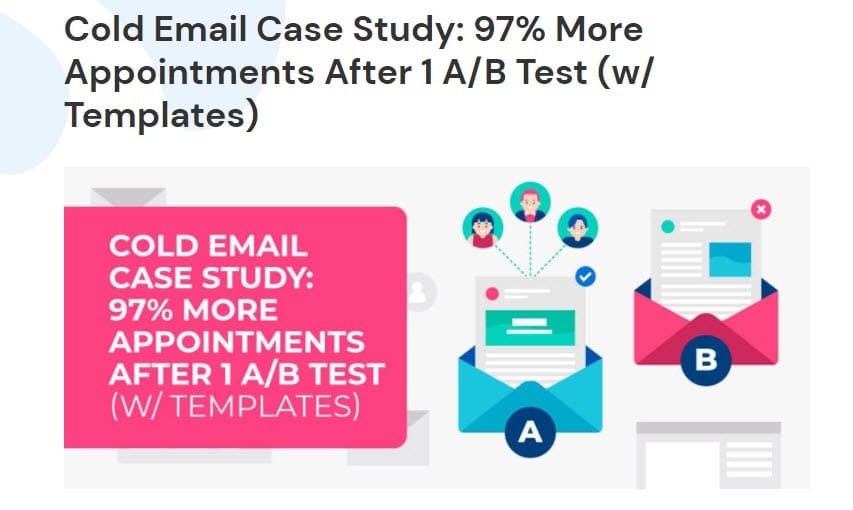

- Mailshake saw a 97% increase in email replies after A/B testing the content in their outreach emails.

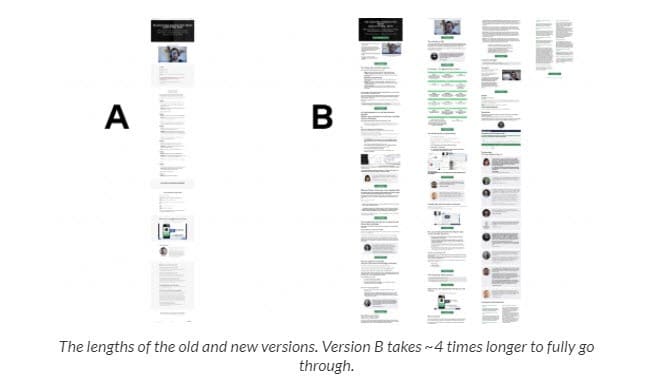

- Data36.com saw a 96% lift in conversions on their landing page by listening to their audience and increasing the length to further give context and remove objections. (Taking the reading time from 4 mins to 16 mins+.)

- AmpMyContent saw a 127.4% increase in leads when they implemented 2 stage pop-up forms.

Now, let’s get into how to run A/B tests on these platforms and the common mistakes to look out for…

11 A/B Testing Myths and Mistakes that Marketers Should Avoid

We’ve hinted at a few of these already, but let’s break down each myth and why they are wrong, so you can sidestep the major mistakes that most people make.

A/B Testing = Money

Not at all! CRO and testing, in general, are all about understanding your audience and improving their experience. Yes, you can run tests on specific elements that will immediately impact conversions and revenue, but your goal is to instead understand your audience better.

Revenue is not the goal of A/B testing. It’s simply a byproduct of improving our audience’s experience.

A Test Is Only as Good as Its Effect on Your Conversion Rate!

Not every test is about conversions. At least, not a traditional ‘conversion’ anyway.

Sometimes, we simply want to learn what’s working and what isn’t. Other times, we want our audience to take an action that we might not classify as a conversion.

Ideas and Opinions Beat Data

We can have great ideas about what works, but we need to test and trust the data instead.

Not every test will succeed. Sometimes the variant you think is weakest performs best. Sometimes it’s the design.

It doesn’t matter if it’s your personal opinion or that of the Highest Paid Person (HiPPO), you need to test and trust the data. That’s how you move forward.

If a Test Doesn’t Win, Then CRO Is Not for You

CRO and testing are not about revenue or conversions, but about understanding your audience.

90% of tests that you run will fail. We’re finding what doesn’t work and getting insights into what does. Even when we have a winner, it can always be improved on. We find the reason why and dive deeper, testing again and getting more lift.

If you want your site and your marketing to improve, you have to follow the scientific method. Embrace the losses instead of trying to avoid them!

Speed of Tests Is the Most Important Factor

If we know that 90% of tests fail, surely we should run as many tests as possible, right?

Yes, we should be aiming to run more, but not before we learn from them.

A test where one version fails and the other wins is only helpful if we understand why it won and why it failed. Rushing off to run another test without that insight is wasting time.

A/B Testing Gets Results, Fast

Actually, it doesn’t. Even if you have a lot of traffic and the ability to see results quickly, we need to take into account other factors:

- Can we trust this test will continue to perform this way after we choose the winner?

- Are there any other external factors that may have affected the test?

Most tests are advised to run for a single sales cycle (usually a month or however long that may be in your case.)

This is why it’s important to cut downtime between tests, but it’s also why we should analyze and learn before rushing into the next month-long test.

Even if you have the traffic to hit statistical significance quickly, be sure to run your test for long enough to get an accurate view of your audience’s behavior.

You Need Huge Traffic to Be Able to Test

To be able to trust your test results, you need to have enough data to support your results, OR your test needs to be performing incredibly well against the original.

Without getting into the math of it too much, it works like this:

- If you’re seeing a very large difference in results consistently, then it requires fewer data points to trust them.

- But if you’re only seeing a smaller change in the test results, then you need more traffic to be able to trust the results.

To trust a small change we need more data, but to trust a larger change we need less. As a rule of thumb, this means that you need around 10,000 visitors to your particular test or around 500 conversion events but it really depends on how the test performs.

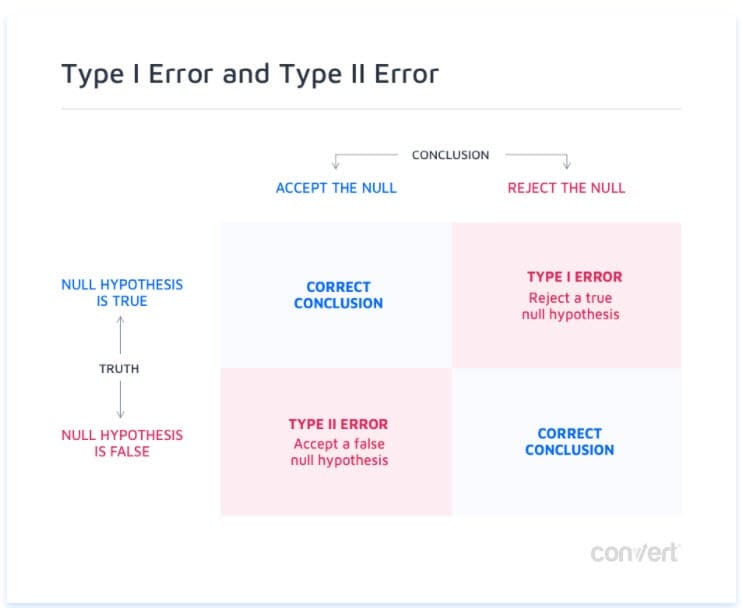

Otherwise, we can get false positives and false negatives.

This being said, you can let a test run for as long as you want until you hit that data size. It just might take you longer to get there.

What Worked for Others Will Work for You Too

Nope! Remember that we need to test everything.

We can use other companies’ tests and best practices to find test ideas, but what worked for one site may not exactly work for you.

Instead of copying them outright, use their test ideas to come up with your own.

Test, analyze, improve.

You Should Test Everything

You don’t need to test absolutely everything on your site. Instead, look at testing the things that you think will have the biggest impact and work through them first.

Testing CTA button colors is great but only when you can’t get any more lift from the version you have.

You Can Only Change One Thing at a Time

Most people think that when it comes to A/B testing, you only ever test one thing at a time, but it’s not strictly true. Instead, you can run what’s called radical tests.Rather than just test a new headline, you could test a radical new design that changes everything on that page all once.

Sure, you can’t tell what change made the impact right away, but you can test tweaking those elements after to see which changes the audience’s response.

Why would we do this?

Well, when a page or element has a low conversion rate, tweaking one thing at a time for a small lift will take a long time. Running a radical test can help us to find that big change that might see a huge return.

The Winning Result Will Always Look Beautiful

Just like an opinion, you need to test and see what works. I’ve had ads run where the worst design outperforms the slickest one. Same for landing pages.

You need to test and find what works for you!

Top A/B Testing Mistakes Digital Marketers Should Look Out For

Here’s a quick list of what to do when running tests to avoid those common mistakes:

- Always have a single goal for a test. A lack of focus means a lack of results.

- Measure that event’s current performance. You need to know how it’s working now before you can improve it.

- Create a hypothesis of why this result is like this, then try to come up with a variation to change. The key is to understand why it’s happening to be able to resolve it.

- Don’t be scared of running QA and customer research. Watch screen recordings and even interview them to find out what’s happening if possible. Our guesses can sometimes be different from what’s happening in the customer’s mind.

- Set up the test and check that everything works and performs before running anything and testing it on multiple devices. So many tests fail because people hit go too soon.

- Allow the test to run long enough to get enough data, conversions and to run for a whole sales cycle so that you can trust your results.

- Don’t change anything mid-test.

- Always analyze the data. Opinions don’t matter, results do.

- Most tests will fail. Some will win. Either way, learn why and improve! Even if you have a winner, see if you can improve it further.

- Always keep track of your tests to see what you’ve tested so far, what worked, what failed, and how far you’ve improved!

A Short Primer on A/B Testing Statistics for Marketers

So let’s talk about statistics. We cover this topic here in greater detail, but it’s important to understand the basic concepts if you want to run A/B tests in your marketing. Otherwise, you might get winning tests that you think are failures, or worse, failing tests that you push live.

The goal of any test is to both learn and improve. The thing is we need to be able to trust the data from our test. Is it giving us accurate results or is it a fluke? Also, how accurate is it? How much can we trust that it will continue to perform this way?

I’m going to be honest with you, some of the concepts and language in statistics are a little dry and overly complicated, so let’s break them down nice and simple so you can understand what they mean and why they are important.

- Sample: This refers to the data source for your test. Usually, this is a segment of your audience visiting the specific page/seeing x advert.

- Traffic distribution: This refers to how the traffic from your sample audience is distributed in your test. Ideally, you want an equal distribution so that version a gets 50% of the traffic, and version b also gets 50%. By doing this, we can get more accurate data.

- Minimum Detectable Effect (MDE): It refers to the sensitivity of your test. It’s a calculation that we can perform before the test in which we specify the smallest improvement that we want to be able to measure in our test.

- Hypothesis: Simply put, this is the idea that we have for our test. Our goal is to test that theory and hopefully see improvements.

- Statistical significance: It refers to the probability that the results you got in your test are not due to a random chance. Our goal is to run tests with enough data and for long enough to have more significant results and be confident in their accuracy.

- Statistical confidence: The more visitors your test gets, the more the statistical confidence in your results increases and the better the likelihood that you won’t trust a false or random result. Most tests are set to run at a confidence level of 95%. This means that we need to get enough traffic from our sample so that there is a 95% chance that these results are correct.

- Statistical power: It refers to the probability of you detecting an effect from your test changes in your test results.

The Different Types of Tests Marketers Can Run and Their Impact on Traffic, Engagement & Conversions

Although we’re talking about A/B tests in this guide, there are a few other types of tests you can use, so let’s break them down quickly.

A/B Tests

This is the test you will use 99% of the time. You’re simply running one variation against your current ‘control’ to see if it improves.

Because we’re only testing one variant, it requires less traffic than other tests and gets results quickly without a lot of setup. There are a few different types of A/B testing processes, but almost every other test is some kind of variation of this idea.

Split Tests

A split test is just another name for an A/B test! We’re basically saying that we’re going to ‘split the traffic on this test’ equally, between the original (a) and the new version (b).

A/B/n Tests

In an A/B/n test, you’ll have multiple variants tested against the control.

Let’s say you have a headline that you want to test but instead of running an A/B test and running just one variation, you could run an A/B/n test and test as many different variations of that headline as you want.

The limit here is traffic size and how long you want to wait for results. Because each variation needs around 10,000 visitors or so, it can affect how long the test runs.

Multivariate Tests

They require a very high volume of traffic but allow you to test multiple variations and combinations.

Let’s say you wanted to improve your home page and you wanted to test your headline and the hero shot image. A multivariate test allows you to not only run every single headline variation at once but also every image variation so that you find the best combination of elements across different versions of your webpage.

(Perhaps headline 1 works best on its own with the current image, but headline 3 works best with image 4 for the most lift. Without running a multivariate test, you may have ignored headline 3 entirely.)

There is a drawback of course. Because of all these options and combinations, it would mean that you suddenly have potentially 30 or more variations all running at once. And because each test needs enough traffic to trust its results, you would need an incredibly high volume before you could trust the data.

Multivariate tests allow you to find the best winning combination, but I would steer clear unless you’re seeing 100,000 visitors a month to your test page or higher.

Multipage Tests

Multipage testing is the process of testing multiple connecting pages all at once, to see how they impact each other.

Let’s say that you have a product library, a product page, and a checkout page. You could in theory run A/B tests on each of these pages all at the same time.

The issue here is that the result of changing one page can also affect the website visitors that go through to the next and so on, causing a chain reaction.

What if the first page causes fewer people to click through? What if changes to the product description confuse them?

This would hurt the checkout page so multipage tests are rarely used. Instead, most testers would focus on running a single A/B test on the first page and finish that, before testing the next page.

As you can see, there are multiple test types but the majority of the time you’ll be running A/B or A/B/n tests simply because of the speed to implement and the traffic and conversion requirements for each.

A/B Testing & Privacy: A Few Things Marketers Should Remember When Gathering Data

There have been a lot of changes recently into privacy and customer data protection, and so if you’re running any marketing campaigns or tests that collect user data, then you need to be aware of the major changes- especially if you’re tracking user data in your analytics…

GDPR, ePrivacy & Google Analytics

We wrote a few guides on how to implement this in the links below, but here’s a quick overview.

Make sure to meet GDPR and ePrivacy requirements in your analytics by:

- Eliminating long URLs/parameters that track user data such as location or email address.

- Anonymizing user IP addresses.

- Getting consent for tracking cookies.

- Eliminating non-compliant cookies.

- Securing your GA account from 3rd parties. (Fines are high for data breaches!)

Now that your analytics is meeting requirements, let’s get into some A/B testing…

There are 4 core channels that I want to look at improving with you below:

- Paid traffic (specifically Facebook ads)

- Social media organic traffic

- Email marketing, and

- Content marketing.

Some of these platforms have their own built-in A/B testing tools or you can find providers that offer this functionality.

How to A/B Test Landing Pages?

Let’s start with the traditional marketing channel that you probably think of when we talk about A/B testing your marketing assets: landing pages!

It’s important to test and improve your landing pages.

Why?

Because of 2 core things:

- Every visitor to a landing page usually has high intent i.e. they are a very warm audience that you can get conversions from,

- And each page will always have a direct and measurable call to action for your audience to take.

Because of this, any lift we can get from this audience is almost always a direct lift in either immediate or downline ROI and profit. Either from a lift in subs, trials or sales.

This is why your landing page is one of the first things you should look at testing and improving in your marketing campaigns (assuming you’re getting enough traffic to the page).

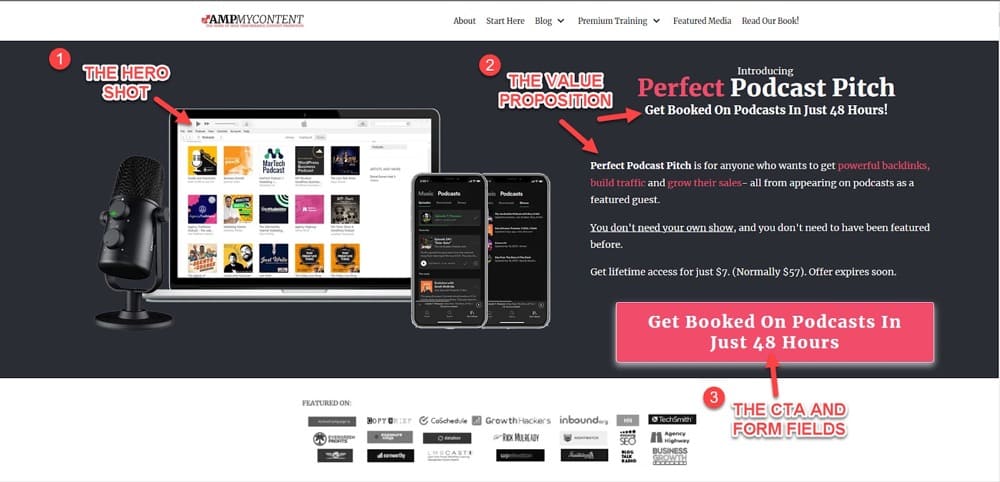

With this in mind, let’s look at 3 simple ways that you can improve your own landing pages:

- Testing the hero shot so you get their attention,

- Testing the value proposition that calls out to your audience and hooks them on your offer,

- And testing the form fields that they fill out. These can be a source of resistance so streamlining this helps immediately impact landing page performance.

And good news?

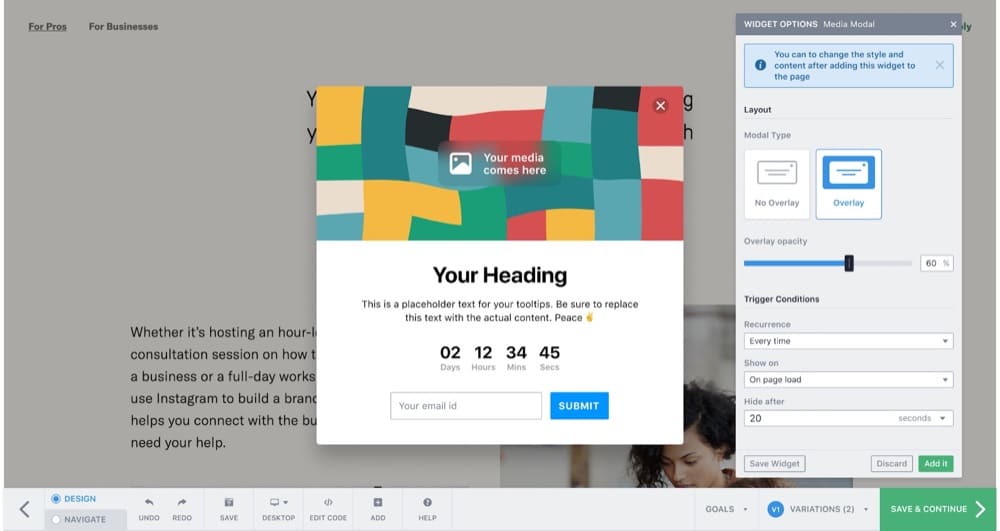

A/B testing these elements with a testing tool is incredibly easy.

Why?

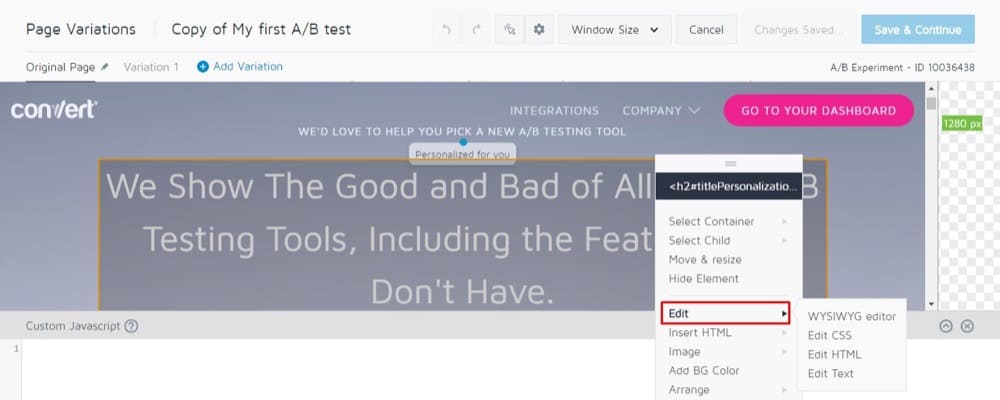

Not only are the tests super simple to set up, but because of how these tools work, you can make direct edits in the app natively without ever needing to change anything to the core page.

That’s right. You can run tests without even needing a designer to make any changes to your page!

You can simply load the page URL in the app and then drag, drop, and edit for whichever element you want to test. Then, once you’ve finished setting up your test, the app will display that change on your test page to your audience without you ever needing to edit anything on the page itself.

Now, let’s walk you through 3 recommended changes that you can test on your own landing pages.

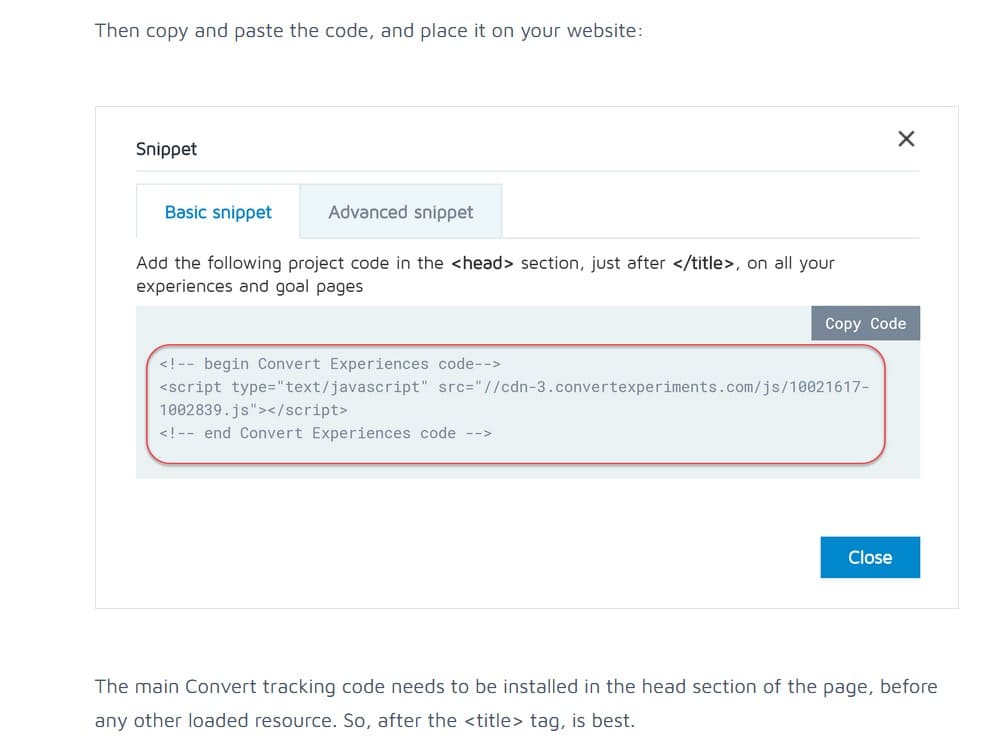

Install An A/B Testing Tool

Unless you’re using a specific landing page builder that has built in testing features, you’re going to need an A/B testing tool to run tests.

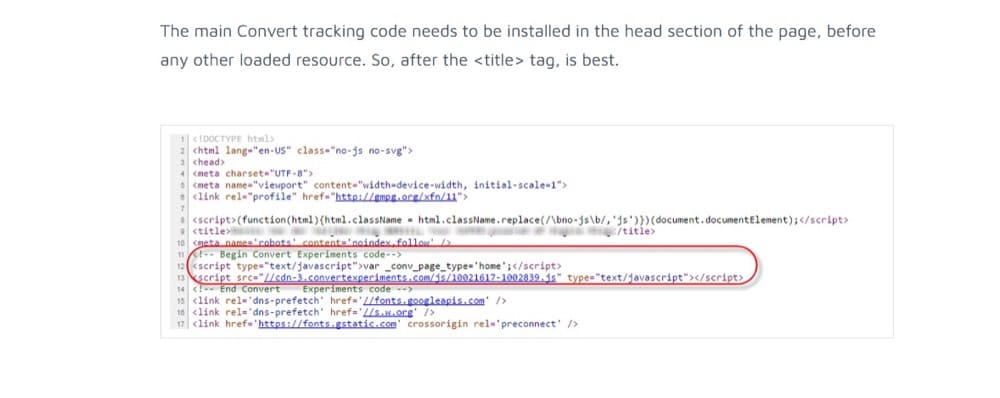

You can grab a free trial of the Convert Experiences app here. Once you’ve created a trial account, go ahead and install the code on your website. It’s super easy to do. You can install it exactly like you would a Facebook Pixel:

You simply click on the install code to copy it.

And then install it. Either in a separate section in your CMS (some have places to copy and paste the code), in your theme header, or in Google Tag Manager.

Now that it’s installed, you can load up the app and start making changes to your landing page and running tests…

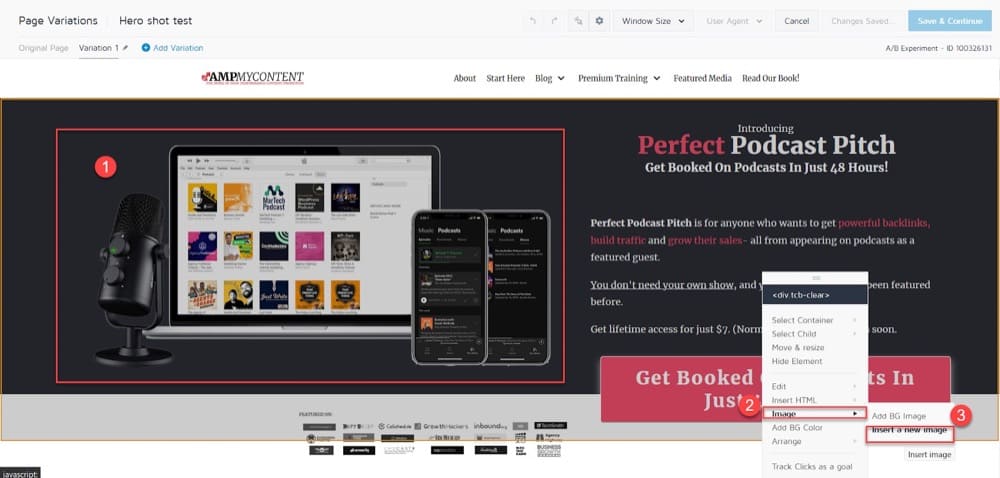

A/B Testing Your Landing Page ‘Hero’ Images

Another thing we recommend testing is the hero image on your landing page.

This is the primary image at the top of the page, designed to get the audience’s attention asap.

We test this because if we can’t get their attention then they might not read our value prop or CTA.

Now, there are a few things you could do:

- You could try moving the hero shot that you have. (Perhaps it’s too low down the page?)

- You could try testing new images and angles. Perhaps instead of the user in the photo you show the product or end goal they achieve instead?

It’s hard to know which will work best, which is why we test this with our testing tool. We can try out each version and see which is the winner.

So let’s show you how easy this is to do.

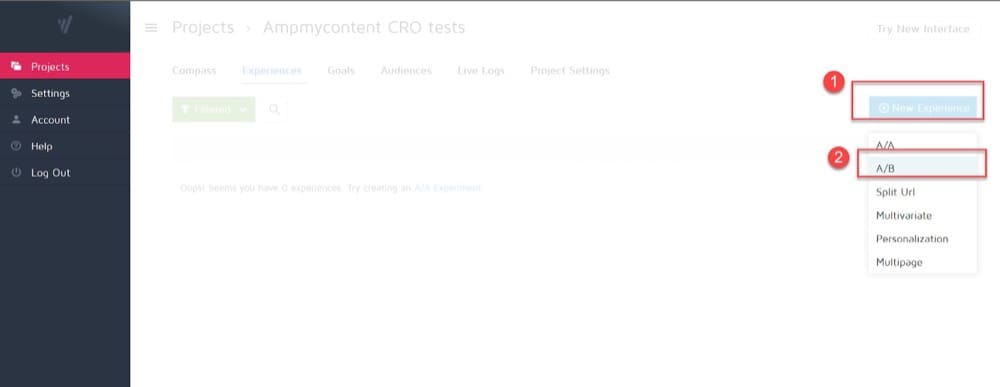

Once you have the Convert Experiences app loaded up, you’re going to create a new project to hold all your tests.

Then, you’re going to create your first test or ‘experience’.

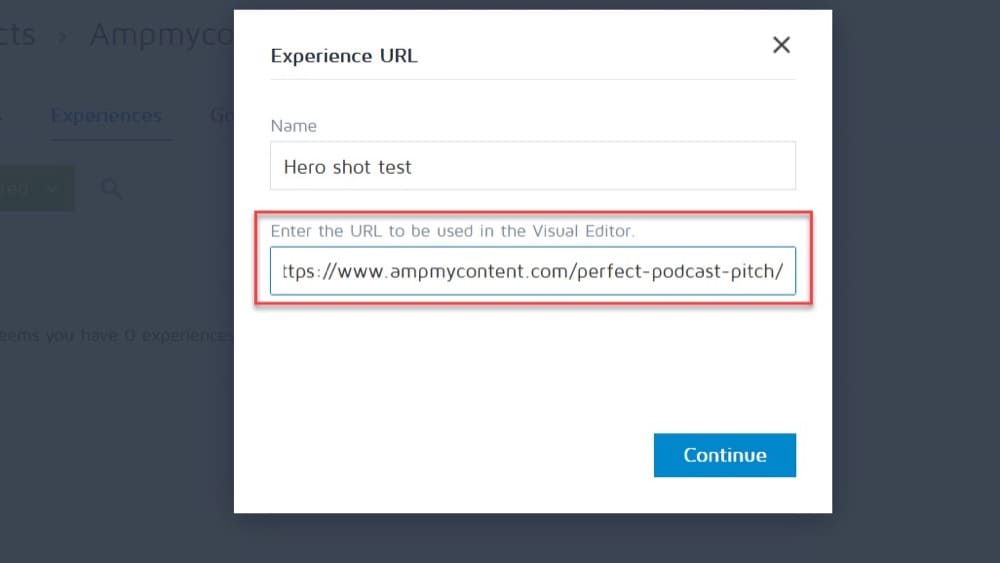

Select ‘a/b’ test, and then fill out the information for your test.

Make sure you add the URL of the page you want to test here. This will then load up the page so you can make changes to it natively inside the Convert app.

Note:

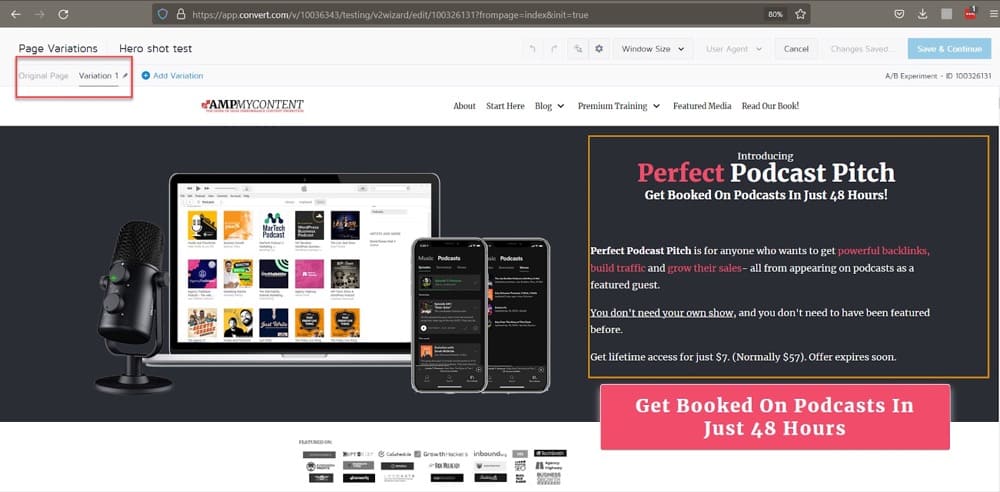

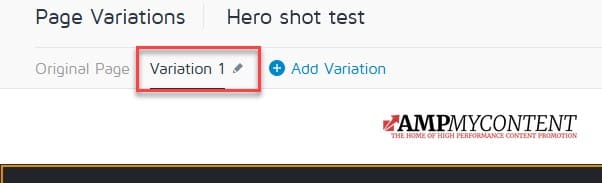

When the page loads up in the app, it will load in the ‘Variation 1’ tab.

This means that you can start making changes to this page design now, and still have the original version saved to test against and find which works best for you and your site.

So let’s look at some tweaks we can make to this hero shot.

I can simply click on the image and drag it to a new position and change the page layout.

Or I can swap out the image for a different one.

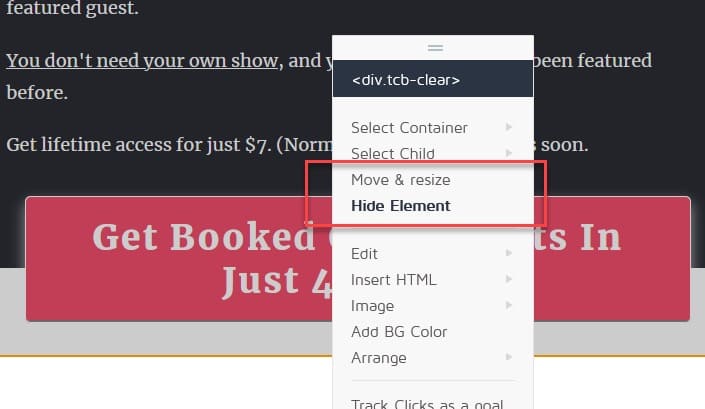

You just click on the image, select edit, and then upload a different image.

Or I could remove it entirely by hiding the element.

Simple!

So now that I have my edited image that I want to test, I just need to push the test live.

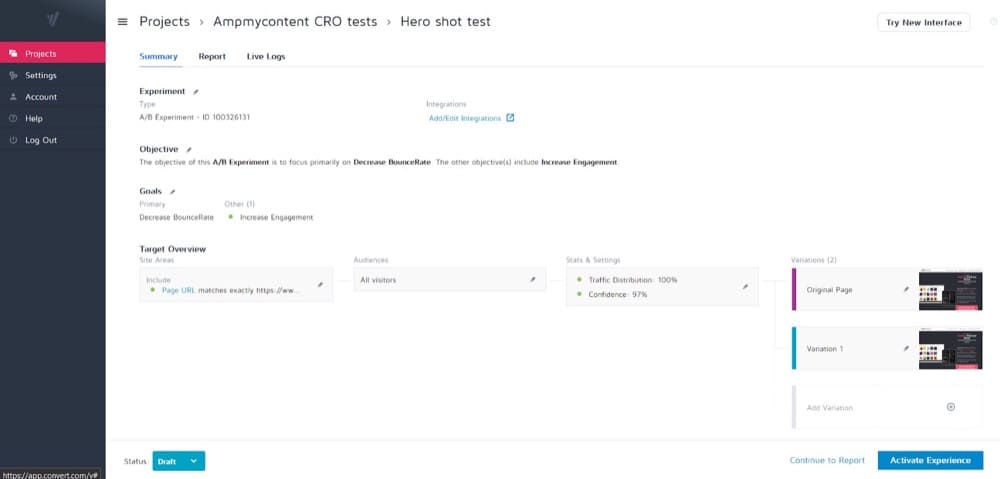

Click on the blue ‘save and continue button’ on the page editor, and it will then load up the test settings page.

From here you can specify a few things.

The Test Goal

You can choose a specific goal for the app to look for during the test.

This could be a lower bounce rate between pages, higher CTR or even attach it directly to revenue so the app knows which version is getting the highest dollar value.

The audience to target

Here you can decide on exactly who sees your test.

You can split it 50/50 among all page visitors, or even segment it to show to only specific people, demographics, locations or even UTM parameters of where they clicked through from!

Stats and Settings

Finally you can also specify how accurate you want the test to be.

For high volume sites or tests where you want a high confidence in the results, you would usually set this to 97% confidence rating.

Finally, once you’re happy with these settings, you can click on the blue ‘Activate Experience’ button to set the test live.

Then the app will show this variation to you target audience and size, all without you needing to go and change anything on the website itself!

Pretty cool right?

OK let’s walk you through 2 other quick changes that you can make to your landing page…

A/B Testing Your Value Proposition

So once you’ve tested the hero shot and got their attention, the next logical thing to look at on your landing page is your value proposition.

This is the text that helps hook your reader in, and it’s usually to the side of the hero shot or overlaid on top of it.

The value prop is so important because it provides context and desire to click on your CTA and buy or optin.

There are a lot of ways to improve this text:

- You can try different angles or hook idea,

- Or you can research and interview your audience to listen to their needs and address that in your value prop,

- Or you can simply improve it further by adding more clarity to what you’re trying to say. (Sometimes the angle idea is good, but the execution is bad)

Either way, implementing this in a test is super simple.

It’s as easy as highlighting the text you want to change, and then clicking on edit.

Then you simply write the new version and set up the test just like you would before.

Easy, right?

So let’s look at one more way that you might want to improve your landing page performance.

A/B Testing Your User Form Fields

We’ve covered this before in detail here, but let me give you a quick recap.

The forms on an optin page are incredibly important, because it’s the last step to getting the audience’s details and committing to an action–be it a sub, a trial or a sale.

The thing is, the number of forms they have to fill out or the layout can cause some resistance in the user. It’s a perceived effort that they can’t always be bothered to follow through and complete.

Now you can get around this by:

- Providing a good UX,

- Staggering the form detail requests,

- Improving the written copy and alignment of the form and CTA

Or simply asking for less information. (Do you really need their name and phone number just for them to subscribe?)

Simple, right?

And the good news is that testing all of this is very easy inside of the Convert Experiences app.

You can simply click on the form element and choose to edit text, edit the CTA, remove form sections and more, all with just a few clicks.

So now that we’ve covered some simple tests to improve your landing pages, let’s look at some A/B tests you can run on the channels that can drive traffic to those pages also…

How to A/B Test Facebook Ads?

It doesn’t matter which paid media platform you use, the main elements of ad performance are:

- The target audience,

- The ad copy and creative(s),

- The landing page(s).

Target the wrong audience and the best ads won’t make a difference. Target the right people and have a bad ad, and it won’t perform. Have a great ad but a terrible page and the ad clicks will be useless.

Because we’re not working on the landing page right now, our main concern is testing the audience and the ad so that we can improve the Visibility, Click Through Rate, and ideally the Conversion Rate.

A/B testing an audience is as simple as taking the winning ad and running it to a new audience group. However, I’m going to share with you a specific method that I use to A/B test Facebook ads that should work even if you go fairly broad with targeting, simply due to Facebook’s AI.

There are different types of Facebook ads and placements. Some ads will even let you try and get a sale or lead right there in the Facebook app. But the most popular ad type on Facebook is Newsfeed, so I’m going to focus on this type here.

You can apply the same principles and ideology to improve other ads on Facebook or other platforms.

You can even use these methods to find a winning text ad, then use it to create a video version once you know the messaging works.

Be Prepared To Lose Money To Find What Works

The first thing to understand is that very few tests start as winners and this is especially true in the paid ad space. With that in mind, you need to adapt to the mindset that you have to spend money to find the current performance of your ad and then improve from there.

In this example, I’m going to recommend 4 different tests to improve the image, the headline, the body text, and the CTA.

However, I recommend that you start with just one ad and get it profitable before adding in more ads. The reason is that you need enough traffic for each test to find the winning variation.

If you have a small budget then only run one ad at first and run a series of A/B tests until you improve it enough that it is profitable or close to it. Don’t make the mistake of trying to test multiple ads at once and running out of budget before you can get any of them profitable!

How Facebook’s AI Works and Issues with Budgeting

Facebook’s ad platform works via a Machine Learning algorithm. ML is a type of AI that finds patterns in data sets, like connections in your audience that you might never think to target, etc.

Because of this, you can set your ad goal and it can find the right people for you, based on who converts most on your specific goal. The thing is, this is kind of flawed especially if you have a low budget.

Here’s why:

Facebook has what’s called a ‘learning phase’ for its ads. Its goal is to learn about your audience to help the ML target the right people but to do this it needs at least 50 conversion events during a 3-day period. As you can guess, if you’re running an ad for leads and they cost you $2 each, then you need at least $100 in ad budget simply to hit the minimum conversions requirement and that’s just in the first 3 days.

(Not to mention the budget for each day after that so that the ad doesn’t turn off).

How to Run An Ad with a Lower Budget

A way around this is to move one conversion event away from your goal. By doing this, they are usually much cheaper each time you move away.

If we look at potential conversion events then sales would cost the most, then leads, then clicks, and then impressions.

In theory, the ML could find you the best audience and the best ad but ONLY if you can feed it enough data (which means larger budgets). If you don’t have that though, you need to test smarter. Here’s how.

Test with a Focus Group of an Ideal Audience and then Expand out

Rather than using the AI to find the right audience while also testing out ad versions, we’re going to use some old-school direct response techniques and work with a focus group first.

It’s as simple as running an ad to your warmest fans and testing what works best with them.

It costs more to show to them because we’re using a narrow or smaller audience, but we won’t stay here. Our goal is to create an ad that gets a response from our ideal audience and then expand out. This way when targeting a broader and cheaper audience, we know the ad copy and imagery works already.

Only then do we let the AI do its job of finding people.

Why?

Because at this point we have an ad that responds with our ideal audience. Facebook’s AI works by tracking the people that convert and then showing it to people who are similar. And because we’ve ‘focus group’ tested this, it means that the ideal audience will respond to it.

FB will track who is converting and then start to show it to the right people but without charging us more for it.

Smart eh?

The Facebook A/B Testing Process

We’re going to test the 4 main elements of a written ad, based on the order people pay attention to them:

- The image,

- The headline,

- The body copy,

- The CTA.

And because we’re trying to find the ad that appeals to our audience organically, rather than set up for conversions, we’re going to run the ad tests as impressions only. This way we can lower the cost to show the ad while getting enough data points for an accurate test.

This method also allows us to learn which elements organically improve CTR instead of the AI picking people for us.

You want to run all 4 tests to this focus group one after the next, starting with the image.

Before we get into that though, let’s quickly cover our testing options…

Should You Run ‘Manual Tests’, Dynamic Creatives, a 3rd Party Tool Or Use the Facebook Internal Testing Tool?

We can use a 3rd party tool to help test but we don’t really need to.

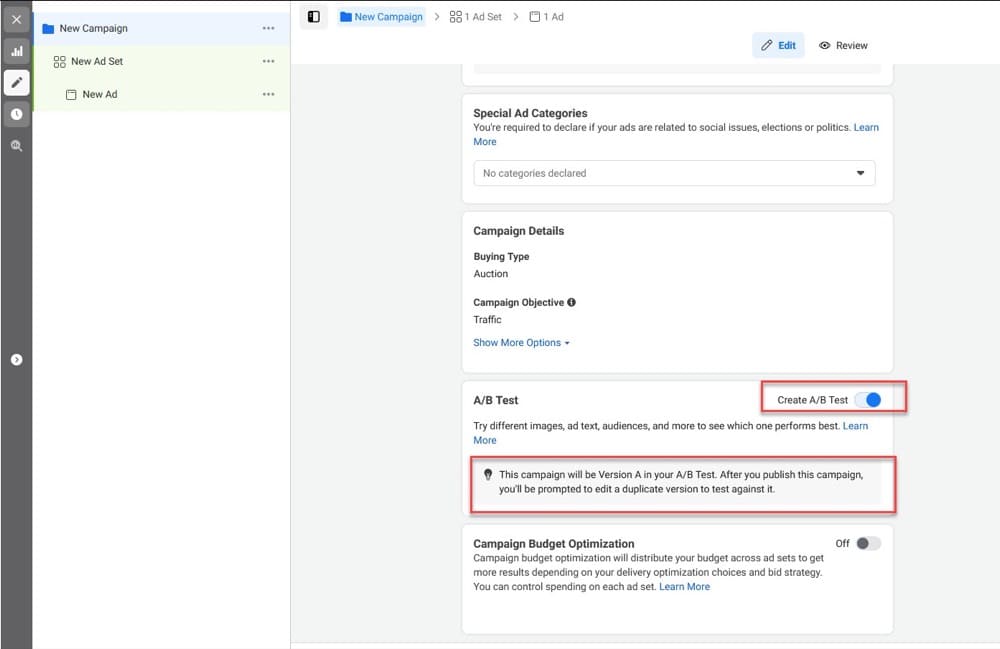

Likewise, Facebook has its own internal A/B testing option that allows you to split test campaigns, creatives and ad sets.

I’m not a huge fan of it for a few reasons:

- It is not the easiest to use. You can jump in from 3 different locations and the UX is different almost every time. Not to mention, it’s not immediately clear how the test is being set up. (Sometimes it wants you to have created the ads already before you test, other times it wants you to create them as you set up the test).

- The ‘end test early’ option for when a winner is found is set at an 80% confidence rating instead of 95% or 99% which is the industry standard. (At 80% confidence there is a 20% chance of an error in the results. I’m pretty sure they have set this option because it requires less traffic to test at this level of accuracy.)

- It only allows A/B tests and not A/B/n.

- It does however split the traffic equally between each test and doesn’t divert traffic from lower-performing ad options too early.

There are 2 other options though.

A/B test with Dynamic Creative

You can set up a single ad as normal and then select ‘Dynamic Creative’. This allows you to run a multivariate test, in that you can enter multiple image, headline, and body text options, and Facebook will deliver these to your audience and find the best performing combination of these variables.

There are a few flaws with this method though:

- It can put together combinations that don’t always make sense, like images and headlines that refer to different things.

- This also requires more traffic for each variation to get an accurate test. Facebook gets around this traffic requirement by predicting early winners and giving them more attention while taking budget and traffic away from other versions.

A/B test with Manual Testing

The final option is to run a ‘manual test’. Here, you can simply A/B/n test an option, duplicate the ad inside the ad set and then change the single element such as the image or headline, etc.

The beauty of this is that you require little traffic to do as there are fewer versions AND the traffic is not being diverted from one ad to another because of machine learning. (As long as you don’t use the campaign budget optimization option).

Personally, I feel this is the best way to find a winning ad when you only have a low budget, while also finding the best performing elements of the test, without it being tweaked by the ML or requiring a huge budget.

Now let’s walk you through setting up the tests…

Step #1: A/B Test the Image First

The image is the first thing that gets your audience’s attention. Depending on your budget, I recommend creating 2-4 different images to test against each other.

The setup process is quite simple. Start by choosing the ‘Traffic’ option for the ad choice and give it a name.

Make sure that you don’t have the ‘campaign budget optimization’ turned on, as this will cause the different ads to not be shown equally.

Then, click through to the ad set section and select the destination you want to send the audience to (in this case, your website).

Set a daily budget of around $10-20 so that it delivers impressions quickly and you can get a large data size for your test.

Then, set the audience as the most ideal and warmest option that you have for what you’re pushing them to. (If you’re testing traffic to a sales page then test the ad on leads, but if you’re testing traffic to content, target site visitors. Use a custom audience for this.)

In this example, I’m running ads to an article, so I want to test against all my audience to see what resonates with them to click through.

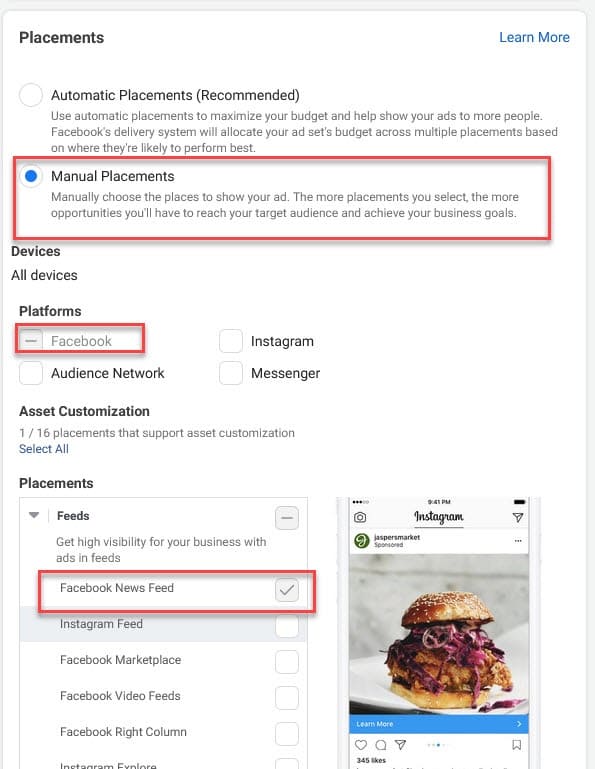

Next, you need to choose the placement.

Personally, I like to test News Feed ads before anything else, as they give the largest view for me to get my audience’s attention. You can in theory test any placement but if you try and test them all at once, you might find that your ad image works well in one placement and bad in another. Be aware that narrowing down like this will help you to test initially but will be more expensive. You will see costs drop significantly later when you expand out. The goal is to find the ad that gets the most organic clicks from the ideal audience.

So, set the placement to manual and select the “Facebook News Feed” option. For optimization, set to impressions.

Scroll down to the optimization and delivery section.

Although we’re setting up an ad to drive traffic off Facebook, we want to select the ‘Impressions’ option. This way our audience will see the ad for cheap and any clicks are based on the creative performance vs the AI.

Now that the adset is set up, it’s time to create the first ad for the test.

Because we’re testing a few image variations, we need to create one ad, then duplicate and alter the image in the other versions.

So go ahead and upload the first of your image options to the ad, and then fill out all the content settings such as the headline, the body, and the CTA.

You’re going to keep these identical in each variation and only change the image.

To duplicate an ad, before you hit publish, go up to the 3 dots on the left-hand menu and click on ‘quickly duplicate’. Just make sure you do this on the ad, and not the ad set or campaign.

This will create a copy of the ad. Open it up, rename it so you know it’s a variant, and then swap out the image in this new ad with another that you want to test.

You want to do this for as many image variations as you want to use.

When you’ve done all that, hit publish!

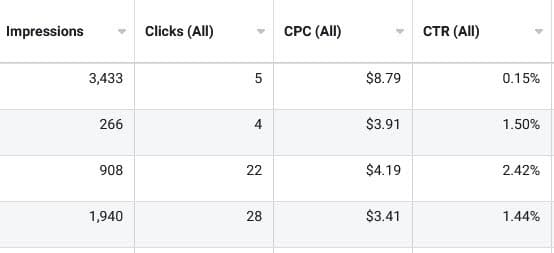

Step #2: Run the Ad, then Test your Results

Because it takes a little while for Facebook’s delivery to balance out and make sure each version is being seen, you want to let the ad run for at least 24 hours before checking back in to see how it’s performing. The goal is to find the image that gets the most organic clicks from your focus group.

Now just because the platform tells us the CTR of each version, we need to look deeper and make sure that we have enough data to trust these results will stay the same going forward. (It could be that one ad has great performance with the initial audience but will drop off when scaled, etc).

A real easy way to see if we can trust these results is to use a statistical significance tool.

You can simply copy and paste the impressions and clicks from each version and test them to see if you have enough data.

Once you let it run for long enough and find the winning image, simply pause the ad, keep the winner and then remove the other ads that are running the weaker images.

Step #3: Repeat for the Next Tests

The next thing that the audience checks after the image gets their attention is your headline. This means it’s the 2nd highest impact change that we can test.

Fortunately, all the hard work is already done. Go back into your ad editor and duplicate the winning image ad as you did before and give each of them a new name.

Keep the winning image the same for each duplicate, but now start writing different headlines for each, and then hit publish.

Let the ad run for 24 hours, then check back and use the stat sig tool to test your results just like before. If it needs more time or a larger sample size per variation, then let it run for another day or so.

Now, you simply repeat the process for the body copy test and write different versions and find a winner. Then you can test again for the CTA if you want to improve it even further.

You may find that your ad is technically costing you too much to be profitable and that’s ok. Our goal was to A/B test the ad to find the version with the highest organic CTR from your ideal audience. At this point, you can now expand out to a colder audience and run this as a new ad with a different conversion goal, and let the ML do its magic!

And that’s all there is to manual A/B testing on Facebook with a low budget. You can use the same principles for any of their ad types.

How to A/B Test on Social Media?

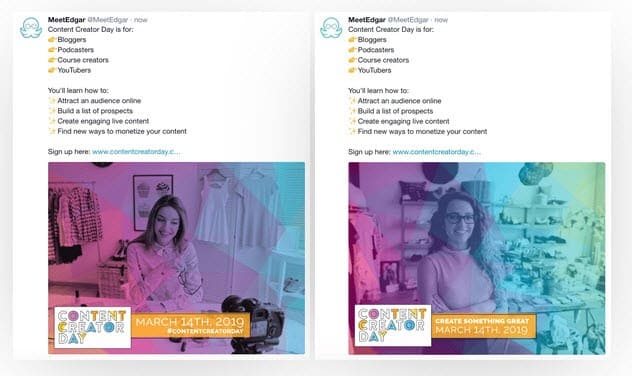

Testing on social media is almost identical to testing for paid ads, in that you’re tweaking the image, body copy, and CTA to try and improve your response.

Your goal could be to improve the CTR if you want to drive traffic elsewhere, or you could test to improve engagement. It doesn’t matter what your goal is, the process to test is the same in that we test the highest impact elements first.

We do that because:

- If the image doesn’t get their attention then they don’t read the body copy.

- If they don’t read the body copy then they never see the CTA.

- And if the CTA is weak then they won’t do what you ask them to.

The good news is that this is much easier to set up as we don’t need to worry about any machine learning or ad imbalances like we do with paid ads.

There are however a few key differences with the actual setup for A/B testing on social media vs paid social:

- Because you’re not paying to amplify its reach in front of an audience, you may not get a huge data set. The total audience that you can test with is limited by the size of your social fans. This means that your results may not be totally accurate or may take a while to get a large enough sample size.

- It might also take a while to see traction in different tests because of how testing on social works.

The Testing Process

The beauty of paid social testing is that we can isolate who sees each version. That way we segment out our audience so that they only see one version and not all of them.

With organic social, we can’t really do this. Even though only around 10% of your audience will see your social post without boosting, we still want to try and avoid the potential awkwardness of posting multiple variations and your audience seeing all of them at once, so we stagger their release.

We post the first version to your audience today, then another version later on or in a few days. The goal is to try and see which version does better but without them seeing multiple versions of the same post back to back. The issue of course is that this delays your test a little.

The good news is that the process to do this is super easy.

You can do it in 2 ways. You can either do this manually by:

- Coming up with an idea for a post, then mocking up different images, body copy, and CTAs.

- Creating UTM links for each version so you can track the clicks each version drives to your site.

- Posting the first version.

- Automating or self-posting an image variation in a week or so.

- Waiting another week and then posting again for the 3rd image, then waiting a week and posting the 4th.

- Finally, comparing the performance and finding the winning image variation.

- Repeating the same process for the body copy test, the CTA, etc.

Or you can set it all up and automate everything by using a social media scheduling tool like MeetEdgar.

This allows you to schedule the posts with the variations you want to run, record the results and track the link clicks, engagement, CTRs for you.

Using this process, you could:

- Set up 4 image variation tests,

- Let it run for 14-30 days so that it tests each image variation,

- Find the winning image with the best CTR,

- And then repeat and find the best body copy, the best CTA, etc. All without you having to mess around.

Top tip for social media posts and even ads?

Try creating UGC or ‘User Generated Content’.

You simply take customer testimonials and format them as a post, almost as if it’s been shared directly from their own social media account. The image looks good, but the copy reads like a friend giving a recommendation on their news feed.

Trust me on this, these types of ads convert!

How to A/B Test Emails?

When it comes to A/B testing email marketing, we care about 3 areas:

- Delivery: Is the email landing in their inbox or going to the spam folder?

- Open Rate: Are they opening the email? If not, the rest is pointless.

- CTR: Are reading the email and then clicking on the CTA?

When it comes to delivery, we’re not really A/B testing here. You can try out different providers as there are some theories that if an email automation tool is used by a bunch of spammy companies and you’re also hosted with them, it can also affect your open rate. (This is why some companies only use tools that deny access to certain industries).

Other than that, the best thing to do to ensure you stay out of the spam folder is to keep your list clean, remove consistent unopened or bounced addresses, and be careful what you write in the email so it doesn’t get picked up by spam filters.

It’s also worth checking that any email you write is actually responsive to different devices. You can sometimes get a bad CTR simply because they can’t read the email or the button or link is off-screen.

A final thought on delivery: when you start to improve your emails using the testing process we’re about to share, you’ll start to see a better response from your audience. This can help you stay out of spam folders, as your email provider can see that your audience enjoys the emails that you send them and you don’t get penalized so it’s a win-win situation!

With that covered, let’s look at this A/B testing method.

The Email Testing Process

As you can guess from the list above, there is a priority order when it comes to A/B testing your emails:

- We test the email subject lines first to make sure that the email gets opened,

- Then, the body copy to make sure they read and care about what you’re saying,

- Finally, the CTA to make sure they click.

(We can also test times of day for best delivery and other features, but it’s these 3 elements that will make the most difference to your email results.)

Not every email tool has A/B testing built into it, so ideally you want to use one that has these features. Personally, I use Active Campaign as it allows you to A/B and A/B/n test different elements of your emails.

You can test elements like:

- The subject line,

- Who the email is from,

- The email body copy,

- Images embedded in the email,

- The CTA.

Even better, it has a unique feature called ‘predictive sending’, which tracks the individual open times for each of your current subscribers and sends the next emails based on the best times.

So let’s have a look at how we would do this…

There are 2 common types of email:

- Evergreen emails that could be part of an automation.

- Broadcast emails that you send once to your list, perhaps to advertise an offer.

Evergreen emails are easy to test and make sense to improve because you’ll always be sending them on automation. Any lift here has a very noticeable effect over time.

That being said, you can also A/B test broadcast emails, but the process is a little different. The reason being that you test with a small section of your audience first to find the most effective email version, then send it out to the rest of the list.

This way, the majority get the version that provides the most lift. It may seem like overkill but if you can get 2-10% more CTR from a broadcast, and your list is 100k subs, that’s a HUGE difference in traffic from that one email, and it’s worth testing. (Even with smaller lists.)

Let me walk you through them both quickly…

The Setup

This process is very similar to what we’ve talked about so far. We test the subject line, the body copy, then the CTA. We also run one test at a time, find the winner, and then use that as the new ‘control’ for when we test the next element.

It’s going to look a little different for testing broadcast emails but I’ll explain how when we get to it. So let’s show you how inside of Active Campaign (you can use any other tool btw).

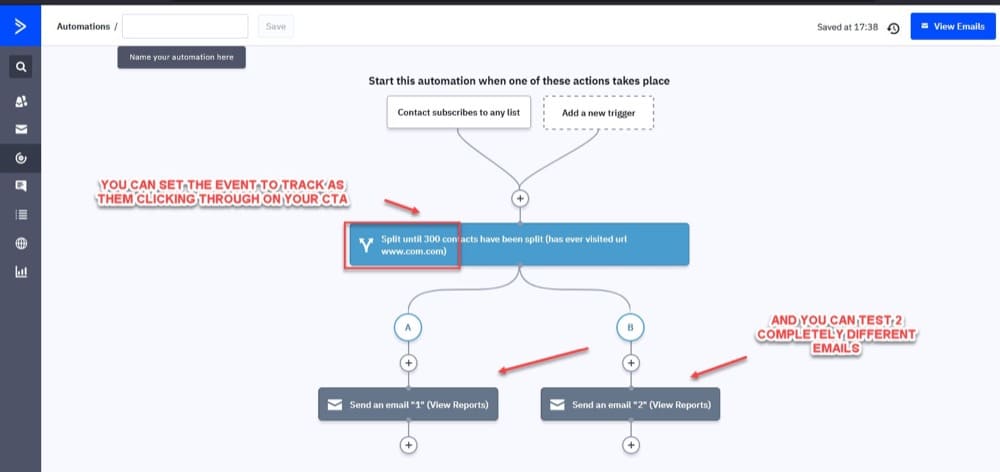

Annoyingly, you can only A/B test a single variation inside the automation builder and not run an A/B/n test.

This is fine if you only want to test one version, but if you want to test as many as 4 variations, then you’re going to have to use the campaign builder to test the email, then copy and paste it back into your automation later.

This isn’t a bad thing as you would test broadcast emails this way also. So go ahead and click on a new campaign, and then choose the split test option.

Next, choose the audience you want to send this to. Here’s where the test differs depending on if you’re testing the evergreen or broadcast emails.

If you want to test an evergreen email (perhaps an automation when someone opts in to your home page for example), choose that list.

But if you want to A/B test a broadcast email, you need to create some ‘test’ lists. You simply create a few lists that are segments of your current subscribers that you can run your initial ‘feeler’ tests to (almost like a focus group that we did with paid ads earlier.)

You run the broadcast tests to these lists and improve the email before then sending it out to the remainder of your audience.

Now, you’re going to need a test list for each element that you want to test. This way you can send the test email to them and not then send a duplicate later on or a variation.

Because I recommend running 3 tests, you need to make at least 3 test segments and import subscribers into them that best match the type of content that you want to send and test. (Ideally, you want a few hundred people at least in each).

This way you can run the subject line test to segment 1 and see how they respond. Then you can run the body copy test to segment 2, then the CTA to segment 3, and, finally, send the winner to everyone else.

Make sense? The rest of the testing from here is identical for both types of email until the end.

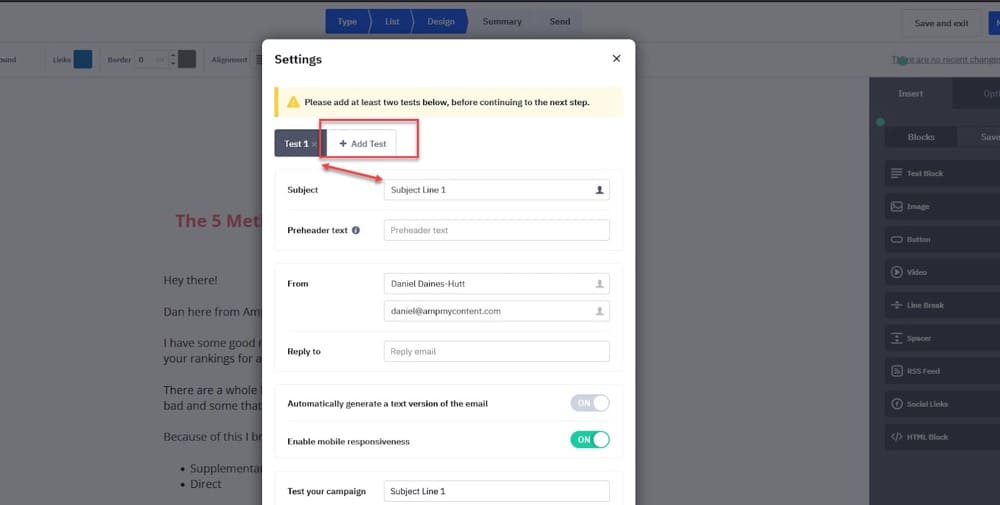

Once you’ve chosen the list, scroll down to the bottom of the page to find the split test options you can run. Choose the bottom option, “Test different email subjects, from information, and/or email contents”, as this will allow you to run any test and hit ‘next’ to load up the email editor and write your email.

Do this as normal, then click the blue ‘next’ button. This will cause a pop-up to appear with the option to split test the subject lines.

Click on the ‘test’ button to create as many subject lines as you want to test. Then simply click on each one and edit the subject line.

Write out your different subject lines. I recommend trying to think of different angles of why your audience would care about the content in the email. I would write maybe 4 variations max to try and find the best version.

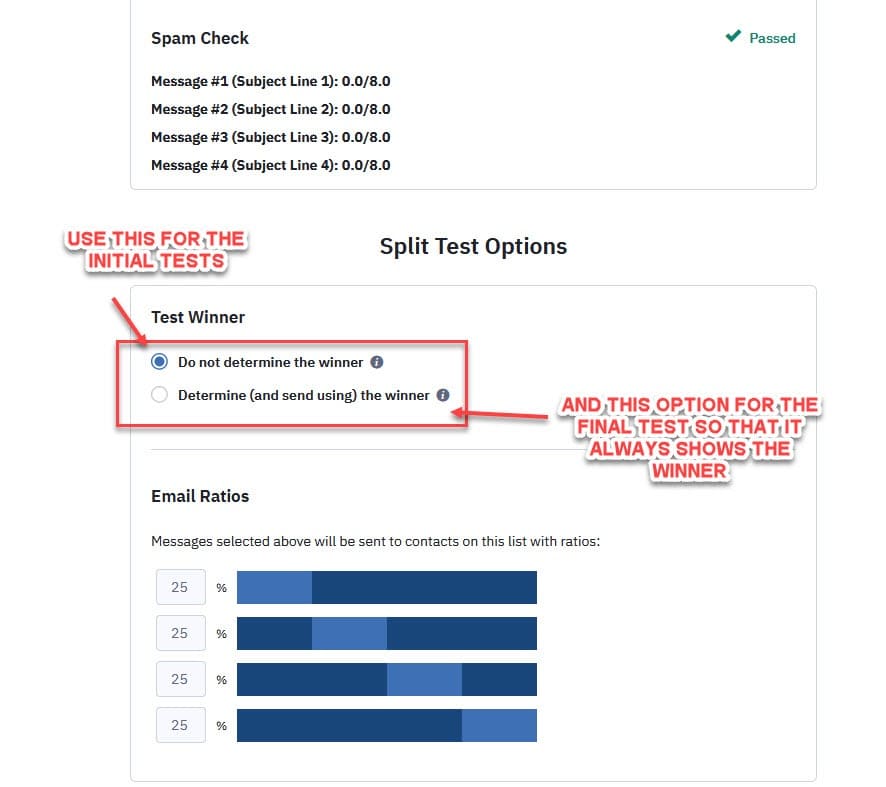

Then, click “next” to go to the final page to confirm your details. Here, you’ll see the traffic % to each test which should be equal. Also, there is an option to either ‘not determine the winner’ and ‘determine the winner’.

Now, this text is a bit misleading. Either option will give you a report after you run the test and tell you the results. The top option will simply end the test which is what we want because we’re going to use the subject line winner to then test again for the body copy etc.

The bottom option however will find the winner and then use that in all future signups to that list. We never really need to use this as if it’s an evergreen we’re copying and pasting it back in, and if it’s a broadcast, we’re going to resend the winning version to the remaining people anyway.

For now, just hit send and let the subject line test run.

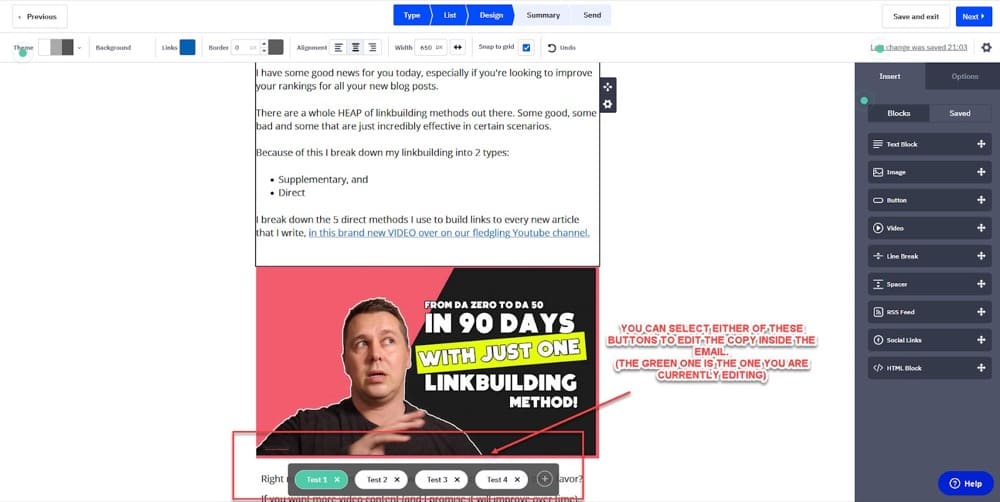

Once you have a winning variation, go back in and set up a new test and make sure to choose the email content option (“Test different email subjects, from information, and/or email contents”) when choosing the type of split test you want to run.

Keep the winning subject line and original email. Then, scroll down the page in the email editor and you will see some green and white test buttons.

You can click on any of the tests to edit the body content for that particular email. (The green one is the one you are currently editing).

Test our writing in different body sections. Try new angles or hooks to keep them interested. Try a short version or a long one.

Once you’ve made the changes and have the variations created, simply send just like before. Later, check back in to see which version won.

Once you have the winner, simply repeat the process to test the CTA until you have the final version with the best performing subject line, body copy, and CTA.

At this point, you can either copy and paste this email back into your evergreen campaigns, or if you’ve been testing a broadcast email to segments of your audience, simply send this winning version to the rest of your list (and choose not to send it to the test segments).

And there you have it. A super simple way to A/B test your emails for far more lift.

Email is a VERY effective channel. You would be crazy to not try and improve each email you send-especially because it helps improve the delivery of all future emails!

How to A/B Test Content with Engagement or Conversion as the KPI?

It amazes me that so few people do this because your content is just like a sales page. Its goal is to attract a specific audience and get some kind of conversion event, be it a lead, a click, a share, or even an ‘aha’ moment where they now move closer to purchase.

(Everyone seems focused on testing and updating them for SEO traffic but doesn’t really care if it does its job when the traffic gets there…)

The testing processes and methods are incredibly similar and can have a huge effect on the results of your content.

There are 3 main performance elements that we care about with content marketing:

- Visibility. Are people finding it? Can they read it?

- Engagement. Are they reading it? Do they bounce? How much are they reading?

- CTA. Are they taking action? Do they see your CTA? Are we asking them to take action…?

So let’s look at each of these elements and areas that we could run potential A/B tests.

Improving Content Visibility

Your audience needs to be able to read your content. This means:

- Loading fast enough so they don’t bounce away,

- Having the content be responsive so they can see the content and have a good experience on any device.

- Finding it in search engines.

- And wanting to click on that result.

Here’s a cool (yet underrated) way to improve your CTR from search traffic: testing and improving the title tag and meta description. By doing so, you can get far more traffic and improve your rankings. (Especially when you realize that most sites don’t even write a compelling meta description and just let WordPress pull the intro from their article instead.)

Allied Pickfords, a furniture moving company, decided to run a test on their meta description for one page that gets around 6,600 searches per month. They managed to get a 36% increase in clicks (going from 2.8% to 3.9%) with just their initial test for this single page (and they only tested improving the meta description.)

This was for a high intent page that would directly affect their sales, but you could apply the same method to any article that attracts traffic.

What’s really cool is that Google uses CTR in the SERPs as a minor ranking factor. The reason being that if people are clicking through to content and it’s not even the first choice on the page, then it must be good and so they reward it by featuring it higher in the results.

Unfortunately, there are no automated tools for this type of test and it only works if you’re on pages 1-3 for your keyword, as the audience has to find you in the SERPs before they would ever see your description.

That being said, the process to apply this is simple:

- Go into the Google Search Console, check the traffic and CTR for your test page in the past month.

- Run a meta description test by editing the current version, let it run for 14-30 days, and track the results.

- Head back into Google Search Console and compare the CTR and rankings for that page before and after.

- Continue to improve on the description for further iterative and incremental improvement.

- Start testing titles to see if that gets more lift.

Testing and Improving Content Engagement & CTAs

When it comes to improving engagement and CTA, the main elements we’re focused on are:

Bounce Rates

Ideally, you’ve already got a fast loading and responsive page to counter any obvious bounce issues, but have you checked that it’s all working?

Corrupted images and off-center text provide a bad user experience that can make them leave.

If the page works, is it actually what the visitors want?

From a testing perspective, you can look at competing content on the topic that ranks high and see how it differs. Is the angle different? Do they cover things you are missing?

You can easily edit your article to better fit intent and measure the difference in Google Analytics and track the bounce rate before and after the changes.

Engagement

It doesn’t matter if your content ranks if you lose visitors.

A simple test is to find drop-off points in your articles where you lose the audience. It’s easy enough to set up some QA tests and track scroll depth by setting up goals in either your analytics or A/B testing tool and using that as an engagement tracking factor.

Or, you can take it a step further and use a tool like Hotjar to track heatmaps of your articles along with user recordings, so you can track your user behavior and see exactly what they are doing on the page and where you lose them.

You can even survey or interview your audience to find out why they are leaving. I know it seems like overkill for a blog post but every article should not just attract but also convert in some way.

Speaking of which…

Conversions

Do you have a call to action for visitors to take?

Maybe a trial, an opt-in, or a social share? A lot of blog posts forget to ask the audience to do anything. You put the work in and got the traffic so you should at least try and get some measured response from it!

If you do have a CTA, does it work? Have you tested the buttons or links lately? Can the audience see the CTA? Do they scroll far enough to even see it? Is it even clear that it’s a CTA? Sometimes hyperlinks are the same color as site branding. Other times buttons are not clear that they can be clicked. Or perhaps the language on the CTA isn’t compelling enough.

All you need is the right A/B testing tools to do this. With that in mind, here is a list of specific A/B testing software and tools you can use to test out your content, site, and much more!

Top 7 Marketer Friendly A/B Testing Tools

I’ve based this shortlist on a few criteria that I, as a marketer, find crucial:

- The price!

- Does the tool have a WYSIWYG editor to visualize the changes and not need a coder or developer to implement them?

- Does it provide adequate support when setting up and running tests?

- Does it provide real-time results on tests (and not delayed by x days)?

- Does the test also connect to guardrail metrics (i.e. can you see revenue generated from each variation or test live)?

- Can it connect with an analytics tool for easy reporting?

So let’s show you some options…

#1: Convert Experiences

So let me try to be impartial, seeing as it’s Convert writing this guide.

Here at Convert, our focus is on providing the best tool we can, for you to run CRO experiments, while providing unparalleled support and customer service.

We’re the tool of choice for a lot of CRO agencies, even when they have a suite of them available. Our clients usually pick us when they’re looking to move away from single tests and start scaling out their testing programs.

We’re also a forward-focused company. We care about customer and audience privacy and built our tool to meet GDPR and other regulatory requirements, and are constantly looking for ways to provide better, focusing on data privacy and security.

We also care about our impact on the world and work with multiple charities and tree planting campaigns. Good tool, good service, good people, doing good :D.

WYSIWYG editor: Yes.

Pricing: Starting as low as $559/mo (annual), with $199 for every 100k visitors after that.

Does it provide support and help when setting up and running tests? Yes, right from the start of the trial. That’s right, we also offer a 15-day free trial, no card needed! What type? Live chat, blog, and knowledge base with more to come.

Does it provide real-time results? (and not delayed by x days) Yes.

Does the test also connect to your guardrail metrics? Yes.

Does the test show Revenue Per Variations in reports? Yes.

Can it connect with your analytics software? Yes. GA and others.

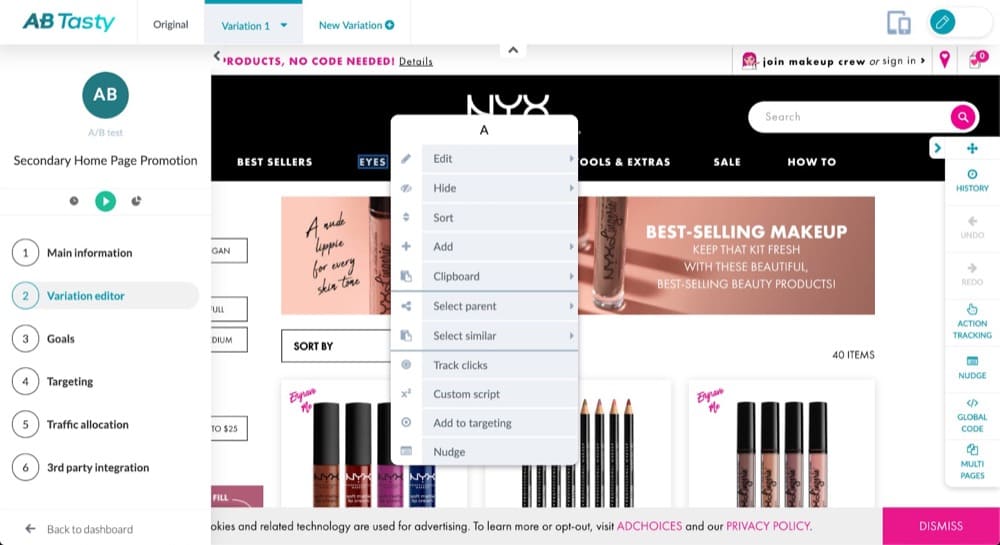

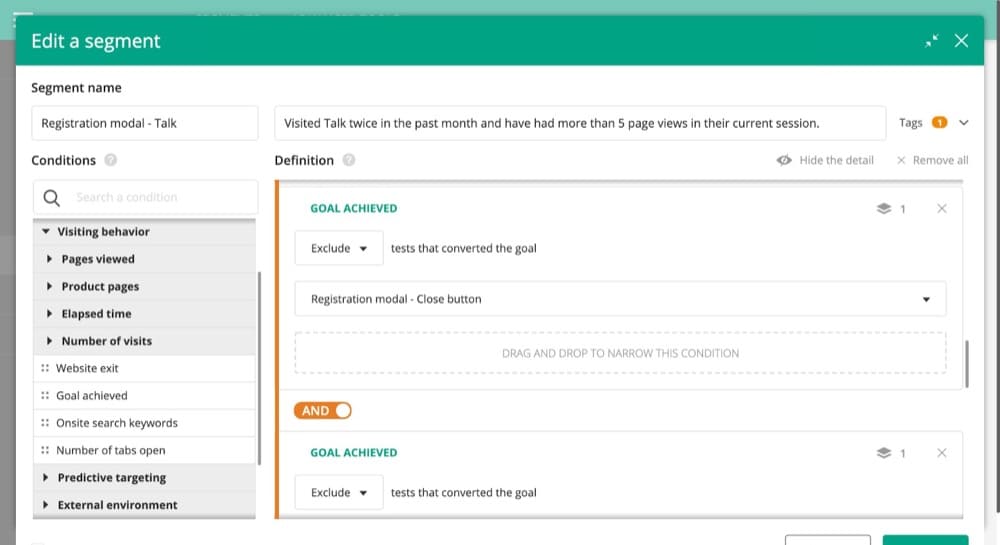

#2: AB Tasty

A/B Tasty is another entry to mid-level tool, for those companies looking to move past basic experimentation and start scaling their testing programs.

It features a clean-to-use interface, simple test set up, and even has machine learning features to help sites with large traffic run more sophisticated personalization and data tracking. (Apparently, 33% of their team is dedicated to R+D for new features).

The reporting function could do with being updated as it is quite simple and uses bar graphs instead of plotting data points, but overall a great tool.

WYSIWYG editor: Yes.

Pricing: AB Tasty’s plan(s) are gated. You need to fill out a custom form to request a demo, and better understand their pricing model

Does it provide support and help when setting up and running tests? If so, what kind? Yes. They have a knowledge base and live chat.

Does it provide real-time results? (and not delayed by x days) Yes.

Does the test also connect to your guardrail metrics? Yes.

Does the test show Revenue Per Variations in reports? Yes.

Can it connect with your analytics software? Yes.

#3: Optimizely

Optimizely is very Enterprise focused. Their goal seems to be to deliver the best testing product they can, for sites with very high traffic volume that are trying to become more data driven.

They have all the features you would expect, along with a machine learning element. (Again, ML works best with large traffic sites so this makes sense.)

Rather than just a testing tool, Optimizely also offers a content CMS for building a blog and an e-commerce site platform ala Shopify and similar under their ‘Digital Experience Platform’.

WYSIWYG editor: Yes.

Pricing: They’re using a custom pricing model. But Splitbase predicts they cost at least $36,000 per year (3k per month.) Pricing varies if using other tools also.

Does it provide support and help when setting up and running tests? If so, what kind? Yes. They have a bank of resources to help users get unstuck and phone numbers to call for help 24/7.

Does it provide real-time results? (and not delayed by x days) Yes.

Does the test also connect to your guardrail metrics? Yes.

Does the test show Revenue Per Variations in reports? Yes.

Can it connect with analytics software? Yes.

Book a demo with Convert and see how testing can be more accurate and more profitable.

#4: Kameleoon

Kameleoon is a French-based A/B testing platform used by teams around the world.

Praised for its UI, personalization settings, Shopify (and other 3rd party tool) integrations, it’s also the tool of choice for Healthcare and Fintech, thanks to its focus on privacy and data protection.

WYSIWYG editor: Yes.

Pricing: Customized according to your requirements, but roughly starts at around $30k per year. You get your unique price by contacting the sales team.

Does it provide support and help when setting up and running tests? If so, what kind? Yes. You can even get a dedicated account manager to assist you with complicated projects.

Does it provide real-time results? (and not delayed by x days) Yes.

Does the test also connect to your guardrail metrics? Yes.

Does the test show Revenue Per Variations in reports? Yes.

Can it connect with your analytics software? Yes.

#5: VWO Testing

VWO is another entry tool for those looking to start out in the A/B testing space and is actually quite popular with marketers who are just getting started with testing.

Not only does it offer a suite of tools that also support many marketing efforts, but the UI to set up a test is very intuitive.

They offer the major A/B testing features that you might expect, along with the ability to run heatmaps, click recordings, on-page surveys, full-funnel tracking, full-stack tracking (for apps and tools), and even offer cart abandonment marketing features such as integrations with Facebook Messenger, push notifications and automated emails!

Another smart angle is their ‘Deploy’ feature, which allows you to use the WYSIWYG editor to make changes to your website and push them live, separate from a test (although I highly recommend testing site changes first). The smart thing about this feature is most A/B testing tools can do this, but they are the only ones to advertise it, which as a fellow marketer, I can respect!

The only downside is the WYSIWYG editor can break sometimes, deleting the code and test setup before it goes live, so just be sure to save a copy of the code before you hit publish.

WYSIWYG editor: Yes.

Pricing: It’s not on the website but if you log in to the app via a free trial, you can see the starter prices. 10k/mth unique visitors starts at $199 per month, moving up to $284 a month for 30k visitors and then $354 a month for up to 50k visitors. (After that the pricing alters).

Does it provide support and help when setting up and running tests? If so, what kind? Yes, you can call them when you need help. Or consult their resource page.

Does it provide real-time results? (and not delayed by x days) Yes.

Does the test also connect to your guardrail metrics? Yes.

Does the test show Revenue Per Variations in reports? Yes.

Can it connect with your analytics software? Yes.

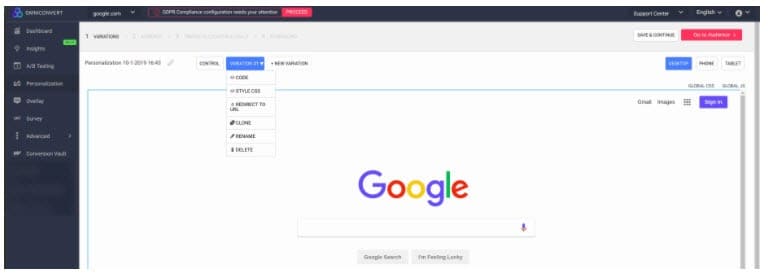

#6: OmniConvert

Primarily a testing tool for e-commerce, that’s not to say that you can’t use this for other business types and sites.

Rather than just a quantitative tool, OmniConvert also offers a suite of 3 other complementary tools, Explore which is their A/B testing platform, Reveal which is a customer retention and churn tracker, Adapt which is an automated CRO platform and Survey, a feedback tool for qualitative analysis. (All on separate pricing).

WYSIWYG editor: Yes.

Pricing: $320 a month is the starting rate for 50k visitors (or $167 if paid annually). Then further costs if you use their other tools.

Does it provide support and help when setting up and running tests? Yes. What type? They have a bank of resources to help users get unstuck, chat support, email support, and phone numbers to call for help.

Does it provide real-time results? (and not delayed by x days) Yes.

Does the test also connect to your guardrail metrics? Yes.

Does the test show Revenue Per Variations in reports? Yes. As an e-commerce focused tool, they will also track CLV and other attributes, depending on which tool you use.

Can it connect with your analytics software? Yes. GA and others.

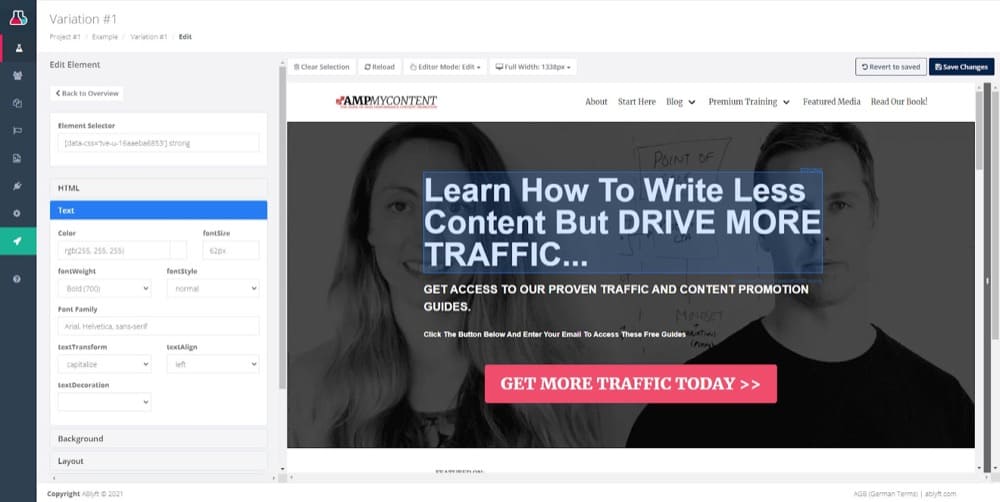

#7: ABLyft

You may not have heard of ABLyft before, but don’t let that sway you. They’re a great platform that has been busy working on their tool and with clients but have not really been doing any marketing as yet to grow their business.

Their primary focus seems to have been working directly with web developers who would set up custom tests and then building their tool around those features and needs. This meant for a while they never had a WYSIWYG editor, but they’ve since started to cater to more beginner-friendly users.

This could be a great tool in the pricepoint for those wanting something better than a free tool.

WYSIWYG editor: Yes.

Pricing: Available upon request. I did find an older document where they offered limited tests for free for 5k users, then $329 a month after that ($279 a month if paying annually).

Does it provide support and help when setting up and running tests? Yes. What type? Do they offer Customer support? Yes. They have a basic knowledge base and live chat options available at certain times of the day.

Does it provide real-time results? (and not delayed by x days) Yes.

Does the test also connect to your guardrail metrics? Yes.

Does the test show Revenue Per Variations in reports? Yes. As long as you set the goal to revenue tracking in the test setup.

Can it connect with your analytics software? Yes. GA and others.

Conclusion

There you have it, our in-depth guide to A/B testing marketing campaigns across 4 major traffic channels.

We’ve covered the types of tests, case studies of huge lifts, four major channels you can run A/B tests on, and the top 7 friendly A/B testing tools for marketers to use so they can become proficient website optimizers. And remember, a good is only as good as the person using it – so here’s how you can make your testing tool work as hard for you as possible.

So what are you waiting for?

Start improving your marketing today and see improvements across the board!

Written By

Daniel Daines Hutt

Edited By

Carmen Apostu