Does A/B Testing Impact SEO? Not If You Do it Right

Among the 200+ factors that determine how a website’s search ranking, content, links, and speed form the three key ones.

Conversion rate optimization experiments (like personalizations, split URL and A/B testing) can affect each of these.

If you have a high-traffic landing page, you’d be worried about A/B testing with it because you wouldn’t want to:

- lose your content’s search ranking, or worse, be perceived as serving duplicate content

- look sneaky with redirects

- slow down your page or website. (Fact: some testing tools can add up to 4 seconds to your website’s loading time!)

Besides these, how your website appears to Google’s bot (Googlebot) or other search engine bots also matters for SEO. A/B testing can impact this as well.

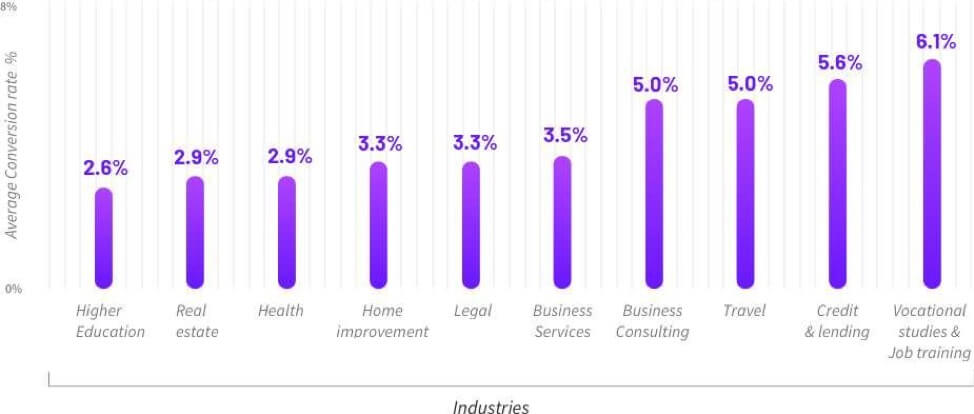

But if your landing page isn’t converting at 4.05% (which is the average conversion rate across industries), you can do better. This baseline lies at about 2%+ for B2C businesses.

Even if your pages convert in these ranges, you can still do better as the top converting pages in an industry report double digit conversion rates.

There’s always room for optimization, which needs rapid testing. But the good thing is that even Google says…

Can A/B Testing and SEO Play Nice?

Google doesn’t just encourage website testing, it also offers its own A/B testing and experimentation solution called Google Optimize.

In its guidelines on A/B testing, Google points out that when done right, A/B testing doesn’t impact SEO at all:

Small changes, such as the size, color, or placement of a button or image, or the text of your “call to action” (“Add to cart” vs. “Buy now!”), can have a surprising impact on users’ interactions with your webpage, but will often have little or no impact on that page’s search result snippet or ranking.

With that clear, let’s now look at a few best practices to follow to ensure A/B testing impact on your page’s search ranking is negligible.

Avoiding Cloaking, On-page Content SEO, and Duplication Issues When Experimenting

A/B testing works similar to cloaking because you essentially serve different content to your users and the search engines.

Such content delivery, however, doesn’t constitute cloaking. If you read Google’s definition of cloaking, this becomes clear:

Cloaking is the practice of presenting a version of a web page to search engines that is different from the version presented to users, with the intention of deceiving the search engines and affecting the page’s ranking in the search index.

Optimizers don’t do this. So, this concern doesn’t exist. You can, however, be perceived to be doing this if you program Google’s bot to get a specific version (more on this below.).

Let’s look at the content aspect of SEO that can get impacted by A/B testing and other experimentation methods.

During A/B testing, you change some part of your page’s content. This could be your page’s headline or its CTA button copy or color. If this change affects your on-page SEO, then it could become a problem.

For instance, if you’re A/B testing your page’s headline, you’d have to think in terms of SEO too and not just conversions.

In such cases, you need to come up with something that should work both SEO- and conversion-wise so that there’s no conflict. And if the variant beats the original, rolling out the A/B tested headline shouldn’t affect SEO at all.

Also, because Google already has indexed your static (or original) page, the content that you serve via your experimentation tool via Javascript won’t impact your on-page SEO. Besides, Google’s bot can read your Javascript-based content as well.

Balancing this aspect of A/B testing and SEO can be somewhat more challenging when you do personalizations because content duplication and content changes in the personalized variants can be higher. But even here, don’t forget that Google already has its indexed version of your static page (and you can use set the original page as the canonical version and maintain its SEO value; more on this below).

Another thing to keep in mind here, as Rand Fishkin of Moz explains, is that a page you are A/B testing is most likely a conversion page and not a key SEO pillar page. Such pages rarely have to serve both SEO and conversion goals. He elaborates citing Moz’s pricing page. He says that its job isn’t to rank for “SEO tools.” Instead, it’s “built to convert. It’s a pricing page — it’s going to let you choose which price. It doesn’t need to target any search keywords.” So naturally, A/B testing on the page content won’t affect your SEO.

Note also that this ^ doesn’t hold for B2C or eCommerce businesses. Because in these businesses, the landing pages are also conversion pages.

This may not always be so straightforward, but you surely get the drift.

Setting up Redirect Experiments Correctly

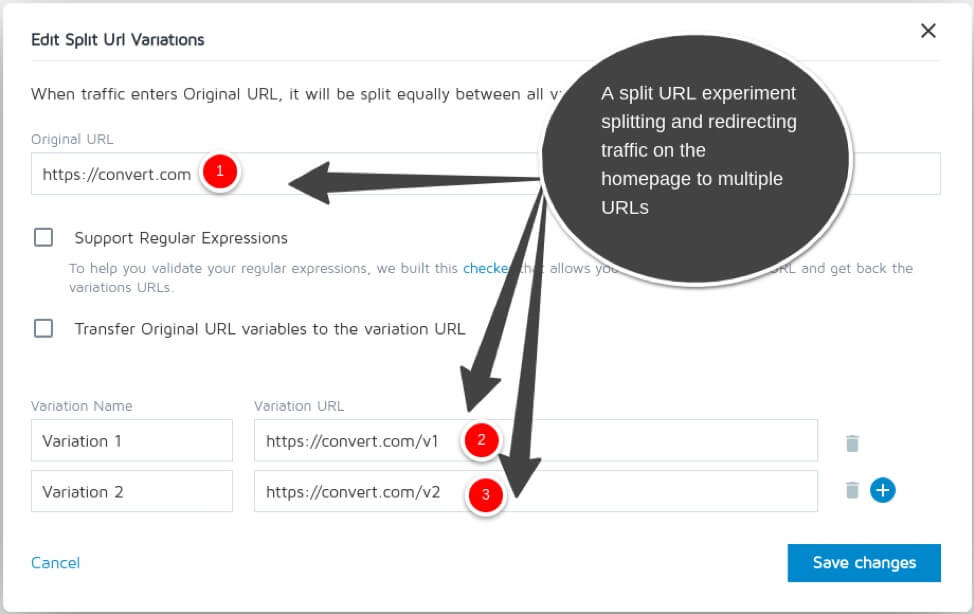

Redirect experiments (also known as split URL experiments) are ideal for testing radically different versions against the originals. For example, for testing a fully redesigned homepage against what a website currently uses.

When you create a redirect experiment, you send (or rather redirect) users and search engines to different URLs than the ones they intended to land on.

Below you can see a redirect experiment that’s set up on the homepage and which redirects the homepage traffic to two different URLs. The homepage traffic gets split and redirected to all the three URLs (the original + two versions):

When search engines come across redirects, they use the tags you use to understand what’s happening (if you use them). So, for instance, if you set up a 301 redirect, search engines will know that you’ve permanently moved the old URL to the new one. From the SEO perspective, this is the best redirect as it retains all the SEO juice.

However, since an experimental redirect isn’t a permanent one, instead use a 302 redirect which search engines understand to be of a temporary nature.

Also, instead of trying to stop the search engines from indexing the variants of the original page (by using the ‘noindex’ tag), use the canonical attribute in them and link to the original URL.

When search engines see the canonical attribute, they understand that SEO-wise, your preferred URL is the original page URL, and not the URLs you’re redirecting the traffic to. This lets you eliminate the possibility of getting the variants indexed while getting the original URL out of the index (if it gets accidentally perceived as a duplicate or for whatever reason).

Each of your experiment variants must include a <LINK> element with the attribute rel=”canonical” in their <HEAD> sections.

You can also use Javascript-based redirects.

Once your experiment ends, publish the winning version on the original URL. Post which, search engine bots will naturally in the due course reindex the revised content on the original URL.

A/B Testing Without Killing Speed and Performance

Speed links directly to organic rankings and also to conversions. And so both SEO and conversion optimizers optimize for it.

Unfortunately, though, running experiments can cause some lag because a CRO tech stack (even a lean one) causes additional requests when a website is requested, and the resulting back and forth takes time.

For example, if you want to run experiments, at the very least, you’ll need:

- A web analytics tool: A tool like Google Analytics that measures and gives you quantitative insights (such as a high drop-off on a key page) and highlights the leaks in your conversion funnel. (These tools can cost additional requests; even Google Analytics adds 3 HTTP requests.)

- A heatmap tool or a user testing tool (or both): A user behavior analytics tool like Hotjar that shows you how users behave on your website, often shining light on the “why’s” behind the data analytics tools. Or, a user testing tool like UsabilityHub that lets you get rich qualitative optimization insights right from your users.

- An A/B testing tool or a multivariate tool: A tool like Convert that delivers your experiments. Some experimentation tools that aren’t optimized for performance bleed speed and can introduce frustrating delays of multiple seconds.

Although most A/B testing tools use asynchronous loading (that’s optimized for speed), they can’t be said to be zero impact.

The speed impact is also worse on the first load because post that, caching makes the subsequent requests faster.

Note, however, that a lot of times, these speed lags won’t be perceptible to the end users as they won’t impact the loading of the “hero” elements of your website. However, lags do happen.

One way to ensure that the speed impact of A/B testing is minimum is to build an optimization stack that’s optimized for speed. It’s also important to configure the tools right as wrong setups can kill speed.

Ending Experiments on Time

The statistical significance you aim for determines how long your A/B tests will run. If you’re looking to hit the 95% statistical mark (that only one in five experiments achieve) and if you’ve limited traffic, your A/B test will take longer to end.

Whether or not experiments reach statistical significance, most optimizers end them after a set period (a week or two usually). And only the winning version or the original one is implemented.

Ending an A/B test on time and rolling out the better-performing version is important because it eliminates the probability that Google bots will find long-term redirects or multiple versions of a certain page on your website all the time.

Treating Search Engine Bots Like Regular Users

Blocking Googlebot from crawling or indexing your experiment’s versions or redirects (so you can avoid duplicate content issues) is a bad idea.

Matt Cutt (former head of Google web SPAM) recommends optimizers to not do anything special for Googlebots:

“Treat Googlebot just like any other user and don’t hard-code our user-agent or IP address.”

Reiterating the same advice, John Mueller (Google) warns that special-casing Googlebots can seem fishy:

“Ideally you would treat Googlebot the same as any other user-group that you deal with in your testing. You shouldn’t special-case Googlebot on its own, that would be considered cloaking.”

Focus on setting up your variant and redirects right and speedy, and trust Googlebot and other search engine bots to correctly process your experiments. They won’t just index your experiments rightly but will also note the eventual updates you make as you roll out the winning version.

CRO Doesn’t Work Against SEO; It’s the Most Natural Thing to Do After SEO

Occasionally, optimizers might hypothesize experiments that seem to threaten SEO. Think: experimenting with a short-form homepage copy instead of the well-performing long-form SEO copy a website might currently use.

Running such experiments can feel stressful, given their potential SEO impact.

However, good optimizers only hypothesize experiments based on insights data points to. And with a little creativity and collaboration, search and conversion optimizers can get the best of both disciplines. With Google’s page experience update rolling out in 2021, conversion and search optimizers will have to work together to ensure a better experience for visitors, ranking, and conversions.

Besides, if you run an experiment that doesn’t lead to more conversions, you can return to your original version. Googlebot — that would have indexed your experiment — will note that you’ve reverted back to the original version. Any search traffic dip that you may have experienced will also get back to normal with the reindexing. Teams that run hardcore SEO experiments have also reported SEO ranking and traffic “normalizing” after reverting to the original version post unsuccessful experiments.

So you can think of this — at the most — as a small, temporary side effect of experimentation.

What about you? Has the fear of SEO ranking dips kept you away from running A/B tests? Have you tried a lean, flicker-free tool like Convert yet?

Written By

Disha Sharma

Edited By

Carmen Apostu