Meta Andromeda: The algorithm you can’t ignore

How to navigate the new rules as a CRO specialist

If you’ve been running Meta ads lately and felt like something shifted—like your targeting suddenly matters less and your creatives matter more—you’re not imagining things.

Meta’s Andromeda update rolled out through 2025, completing its global deployment in October. It’s probably the biggest change to how ads get delivered since iOS 14 broke everyone’s tracking.

But here’s what bothers me: most of the coverage focuses on media buyers. Almost nobody is talking about what this means for CRO specialists, and trust me, it changes everything about how we should think about testing.

Let me break it down.

What is Andromeda anyway?

Andromeda is actually our nearest galaxy: a massive spiral with over a trillion stars. Maybe Meta was being clever, hinting at the connectivity and vast potential of the update… or maybe they just wanted to freak us out.

Either way, the name stuck.

Andromeda is a filtering system, not a single brain.

When an ad impression becomes available, Meta isn’t picking from a handful of ads—it’s choosing from millions. Three systems work together:

- Andromeda handles retrieval, narrowing millions of ads to a shortlist based on predicted value using deep neural networks.

- GEM (Generative Ads Model) predicts which creative types resonate with each user based on behavior patterns.

- Lattice determines optimal timing, placement, and journey stage for ad delivery.

You don’t need to remember the names. The key takeaway: most ads never even get a chance to compete.

What has Andromeda changed?

At its core, Andromeda changed how Meta decides which ads show up for which users. In simple terms, since around October 2025:

- Targeting matters less than it used to. Your ads guide the targeting and what is best for each audience.

- Ads that Meta deems “signal poor” just don’t get shown (thanks a lot, Meta).

Signal-poor ads (think: generic product shots with minimal context) give the algorithm nothing to optimize against, whilst signal-rich ads give Meta clear behavioral data to work with—video watch time, engagement patterns, clear audience cues in the creative itself.

Most importantly, Meta now decides which ads even enter the auction based on predicted value, not just targeting or bids.

So what does predicted value actually mean?

Even though you still choose a campaign goal — like purchases — Meta is really optimizing for expected economic value:

Meta revenue × advertiser success

That single shift explains a lot of the weird behavior advertisers have been noticing over the past few months:

- Profitable accounts are suddenly dropping in performance by 45%-50%

- New ads get no spend even with proven concepts

- The algorithm favouring 1-2 ads with purchase history and ignoring everything else

It isn’t you, it’s them for sure.

Creatives now act as targeting

Audiences haven’t disappeared, but their importance has clearly declined. The old targeting methods were already fading, and Andromeda just made it official.

If I’m honest, despite the dramatic name, this is mostly an acceleration of trends we’ve been seeing for a while:

- Lookalike and interest audiences weakening over time

- Fewer interest options available

- A strong push towards broad targeting + automation

Meta’s recommendation is simple: go broad and use Advantage+ (more on that later).

It sounds easy, but in practice, it’s uncomfortable.

“But what if my audience is niche?”

This is where most e-commerce brands struggle.

Imagine you’re selling a menopause supplement. Meta is basically saying:

“Just include men and women who are probably too young to be experiencing menopause. I’ve got this, babe.”

That’s hard; we are optimizers, we like data (and control).

Then imagine trying to explain to stakeholders why a few men are seeing your ads… yeah, not always fun.

But in my experience, yes, it will reach a few men, and no, that doesn’t automatically mean anything is broken. That’s just how the system works now. The algorithm is generally good at self-correcting—those few men who see the ad likely won’t engage, which teaches the system to stop showing it to similar profiles. The ‘waste’ is usually smaller than it feels.

Meta claims these changes can drive around 8% improvements when set up correctly. Take that number with a pinch of salt, but directionally, the point still stands.

What “getting into the auction” means

Not all ads compete. Basically, you can set an ad live only for it to get zero spend, ouch.

Ads that look economically weak (for Meta) are filtered out before they ever drive traffic. On the one hand, great. You don’t waste money on truly bad ads. On the other hand, it royally sucks, especially for startups:

- You might spend time producing a creative idea and never even get a chance to test it.

- You also have less data (typically), meaning less model confidence, meaning it’s harder to get into the auction in the first place.

It’s like setting up a full A/B test, only for your testing tool to say:

“Nope. We’re not convinced this variant will win, so you’re not allowed to test it.”

I don’t know about you, but I prefer learning lessons firsthand. But that being said, try not to see it as a bad thing. Abigail Laurel Morton, a Creative Manager at Aperture, explains it as follows:

“What looks like creative failure is often just Meta selecting the signals it understands best. Creative volume allows the algorithm to identify patterns and predict value.

Out of twelve UGCs, Meta might only scale three, but those three unlock multiple new avenues for iteration across creators, hooks, formats, and messaging.”

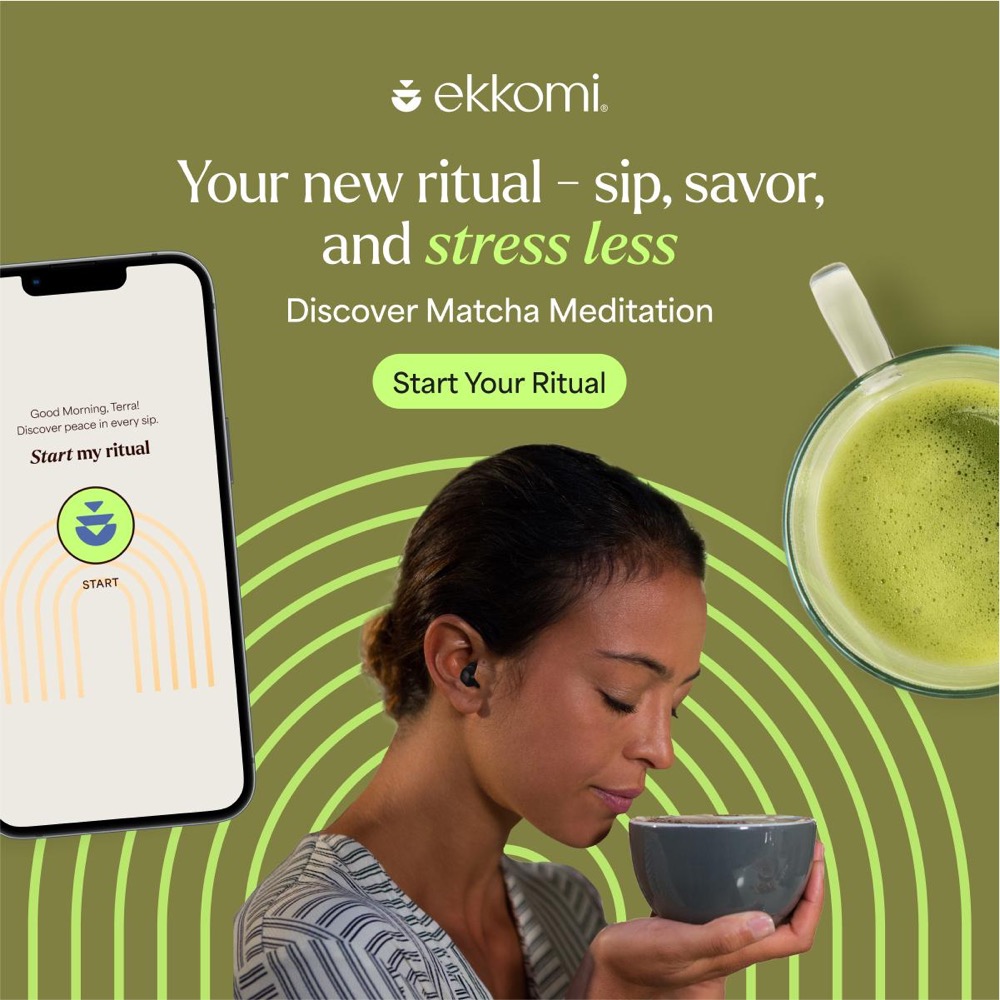

Here are some creatives Abigal has seen scale, they feel organic and stand out:

So how do you fight this?

The advice I keep hearing is “Better quality creatives.” So there you have.

Just kidding, in my opinion, it’s pretty useless advice.

Let’s be more practical.

Focus on:

- The first 3 seconds of video — Make them genuinely scroll-stopping

- Clarity in the ad — Who is the ad for? Why is it different?

- Creative diversity — Give the algorithm more signals to work with

That last point is the most important one. You also really need to understand what makes for a strong ad. Back to Abigail, who explains it as follows:

“Better quality creatives aren’t about looking more polished; they’re about giving Meta clearer signals grounded in real customer insight. Design is what stops the scroll, but copy is what carries users through the funnel. When messaging is rooted in actual customer psychology, real jobs-to-be-done, real pain points, it helps people immediately recognise “this is for me.” That self-selection is what improves performance.

Meta isn’t optimising for aesthetics, it’s optimising for clarity of intent. The better your creative encodes who the customer is and why they care, the faster the system learns and the more efficiently it can scale.”

For example, the following ads Abigal has tested are clearly bottom of the funnel, pushing the algorithm to optimise for action:

What creative diversity looks like

Under Andromeda, small tweaks don’t count as new information.

If you take a winning ad and just:

- Change the headline

- Swap a background

- Slightly adjust the copy

Meta largely sees that as the same ad — good job diminishing our attempts at optimizing, Meta.

Side note: yes, slight bitterness here. I hold a small grudge against Meta for all this. But we need them, so I promise to stay constructive and keep giving solutions.

True creative diversity means variation across:

- Hooks: the opening line, visual, or first frame

- Formats: short video, long video, UGC, statics, carousel

- Audiences: different customer segments and motivations

- Concepts: pain-led, product-led, social proof, outcomes

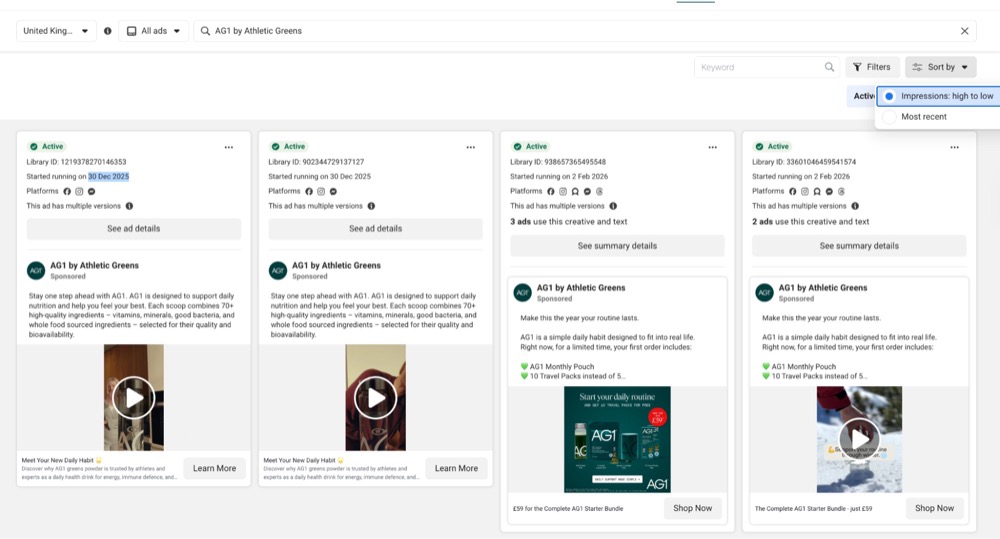

If you’ve ever looked at Meta’s Ad Library and noticed certain creatives running for months, that’s usually a good sign they’ve passed the auction filter.

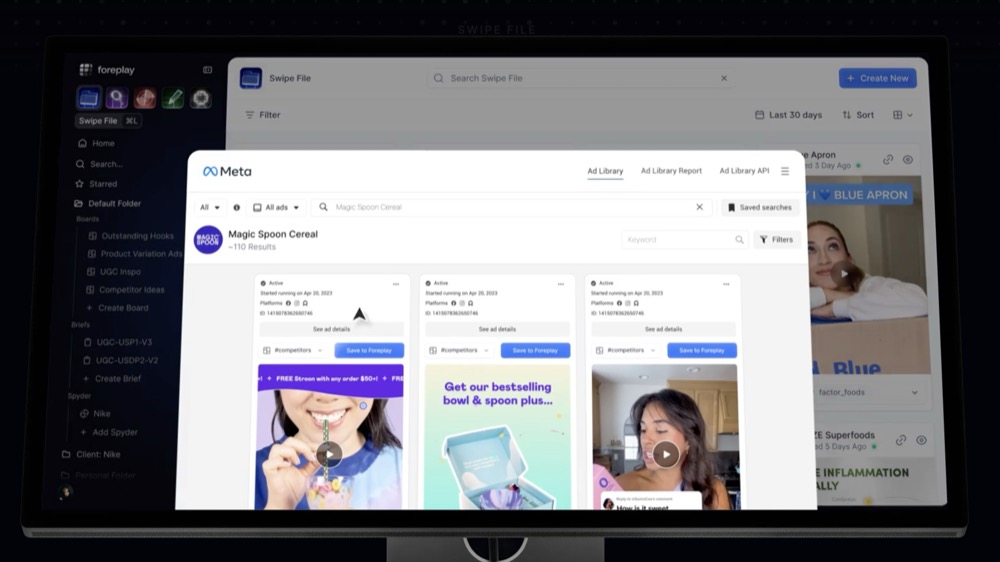

Another tip: use platforms like Foreplay to see which creatives are trending and what the algorithm is prioritizing.

In general, Meta favors ads that look native and relatable, blending seamlessly into users’ feeds.

How Advantage+ fits into all of this

Introduced in 2022, Advantage+ doesn’t refer to a single feature. It’s used across multiple layers of Meta to indicate where automation takes over.

In simple terms, Advantage+ removes manual optimization for:

- Audiences

- Placements

- Sometimes, even budget distribution

More importantly, it creates faster learning by imposing fewer constraints. Instead of you narrowing the system, Meta gets more freedom to figure out what works.

Advantage+ trains Andromeda by linking:

- Creative patterns

- Downstream conversion

- Eventual revenue

It also enables simpler account structures. Meta consistently prefers:

- One campaign (especially CBO campaigns)

- One ad set

- Many strong creatives

Over complex multi-ad-set setups.

And yes, you can now run far more than the old “3–6 ads per ad set” rule of thumb a lot of media buyers used.

Data-backed signals from $14.5M in spend

The Ecommerce Playbook Podcast (from Common Thread Collective) analyzed $14.5M in Meta BFCN spend across 147 brands and 53,000 ads with different bidding strategies and creative formats, pulling out patterns that highlight what seems to be working (and what isn’t) across accounts.

A quick caveat: we know from testing that “best practices” aren’t universal, so consider these more test ideas than hard rules.

1. Bidding strategy can impact Average Order Value

Three bidding strategies accounted for 99% of spend:

- Lowest cost (highest volume)

- Min-ROAS

- Cost cap

Most media buyers stick to Lowest Cost, but an interesting finding:

- Lowest cost campaigns had an average AOV of ~$15

- Min-ROAS and Cost Cap campaigns averaged around $150

So if your AOV feels too low, testing alternative bidding strategies — especially for higher-priced products — could help.

Note: those alternative strategies didn’t reach the same scale as Lowest Cost, so there’s a tradeoff between value and volume.

2. Video seemed to scale better than static

As spend increased year over year, static ads hit diminishing returns. Video, on the other hand, could absorb significantly more spend without breaking efficiency.

This likely comes down to signal density: video provides stronger behavioral signals, like watch time and engagement, which help Andromeda decide who to show ads to.

3. Volume matters

A huge number of ads were never spent meaningfully, while a small group drove the majority of revenue. The takeaway isn’t that “most ads fail” — we already knew that.

The real lesson: Andromeda requires many attempts to find scalable winners. Low-volume, high-spend setups showed no evidence of working. Accounts that scaled successfully had a high volume of ads running.

4. UGC had a higher chance of producing scalable winners.

UGC-style video ads were nearly twice as likely to become high-spend winners compared to more standard creative.

They also drove a meaningfully higher AOV. Not because UGC is magic, but because it provides clearer, more credible signals for the model, helping Andromeda understand who you’re targeting and what you’re offering.

The impact on CRO

This is where things get spicy. Meta is now pre-qualifying users before they even reach your landing page by being so selective about who they show ads to.

That means traffic is no longer neutral or random. For CRO specialists, this changes everything.

You often need to validate demand through your creatives first before investing heavily in landing pages. Otherwise, you risk optimizing a page that never sees the right users.

Why CRO tests can break under Andromeda

Different creatives now send different quality users, even within the same campaign. That means you might see:

- The same number of users per variant

- But wildly different intent levels depending on the type of creative

Which makes test results feel inconsistent or “wrong.”

In practice:

- Traffic is no longer stable over time

- Traffic composition changes as Meta learns

- Variant performance can shift without any page changes

The takeaway: you need to be extra cautious when analyzing results.

How to analyze your results (without gaslighting yourself)

When interpreting outcomes, keep in mind:

- Winning variants may just be artefacts of the creative mix

- Losing variants may never have seen high-intent users

- Page-level conclusions always need creative context

A simple sanity check: analyze which ads actually drove traffic to each variant. If UTM parameters are being stripped (either through a redirect A/B test or Meta), check out the guide I wrote earlier for testing with Meta.

What should optimizers do now?

The biggest shift (if you aren’t already doing it): treat creative and landing page as one optimization loop, not two separate systems.

Don’t ask: Which landing page converts better?

Ask: Which creative-landing page combination creates the highest value?

How does this impact your test design?

1. Match destination to intent, not traffic volume

Be intentional about aligning creative intent with the destination experience.

For example, with one client, we’re testing within the same ad set:

- Bottom-of-funnel statics going straight to product pages

- Video ads going to a landing page

Same audience, very different creatives, very different intent levels.

Past data already showed that some ads drove high CTR but low conversions. The journey needed to change, not just the page.

2. Segment CRO analysis by creative type

We already talked about segmenting by ad; another useful approach is segmenting by creative type:

- UGC often attracts warmer, more trust-driven users

- Statics tend to skew bottom-of-funnel

- Video often brings in higher curiosity but lower immediate intent

This is where naming conventions become really useful. Name your ads in a way that lets you easily split and analyse them, for example:

- UGC | BOF | Testimonial | Video | V1

- Static | BOF | Offer | Image | V2

- Video | TOF | Problem-Solution | Short | V1

- UGC | MOF | Creator Demo | Video | V3

This approach lets you learn more and spot patterns across ads, especially since the data per individual ad is smaller under Andromeda.

3. Rethink classic A/B testing assumptions

Traditional A/B testing assumes:

- Random traffic

- Stable traffic

- Comparable users

Under Andromeda, none of these are guaranteed. Yeah, that won’t make you popular in a room of CRO specialists.

What to do instead:

- Run tests long enough to account for Meta’s learning phase

- Be skeptical of early wins or losses

- Re-evaluate results once delivery stabilizes

In terms of how long to run your test, it depends a bit on how long your users need to decide. In general, creatives now need 4-7 days and meaningful spend before making kill decisions, so the old 24-48 hour rule doesn’t apply when the algorithm needs time to find micro-audiences for each creative. Keep that in mind when determining your test length.

Not to give you an excuse, but sometimes it really isn’t your page’s fault; it’s who Meta sent. And other times, yeah, your page is the problem.

The TL;DR for dealing with Andromeda

I get it, this all feels like a whole new galaxy. But think of it more like Meta taking some of the work off your hands (see, trying to stay positive here!).

Here are a few simple rules for embracing Andromeda:

- Targeting: Go broad, use Advantage+, and treat lookalikes as signals, not constraints

- Creative diversity: Explore real variation, not cosmetic tweaks

- Audience learning: Let performance insights shape future creatives

- Campaign structure: Keep it simple, don’t fear more ads in one ad set

- Automation: Advantage+ is now the default, whether we like it or not

And finally, don’t panic if you’re not seeing that magical (and honestly modest) 8% lift. It assumes a “perfect” setup in a system that’s increasingly a black box.

With less control, focus on what you do control:

- A strong, consistent stream of diverse creatives

- Solid landing pages (what you’re great at)

- And keep doing what we do best as optimizers… experiment.

Written By

Daphne Tideman

Edited By

Carmen Apostu