Why Tests Fail: Possible Mistakes When Conducting Tests

Not too long ago, the Convert Academy hosted a webinar presented by Nazli Yuzak, a Senior Digital Consultant at Dell who was initially working in the optimization industry prior to joining the folks at Dell. She first worked at a global education company before venturing into the E-commerce setting. Both stints which swallowed up five years of her life. But this post is not a biography, so we’ll spare you most of the details, though you can still catch more of that on the presentation here.

Nazli is perhaps better placed to speak about tests, and why they fail, given her enviable experience in the industry. The first session dwelt on what failed tests basically mean, and how gaining some insight from a test is a good thing. See here: Why Tests Fail and why that’s a Good Thing.

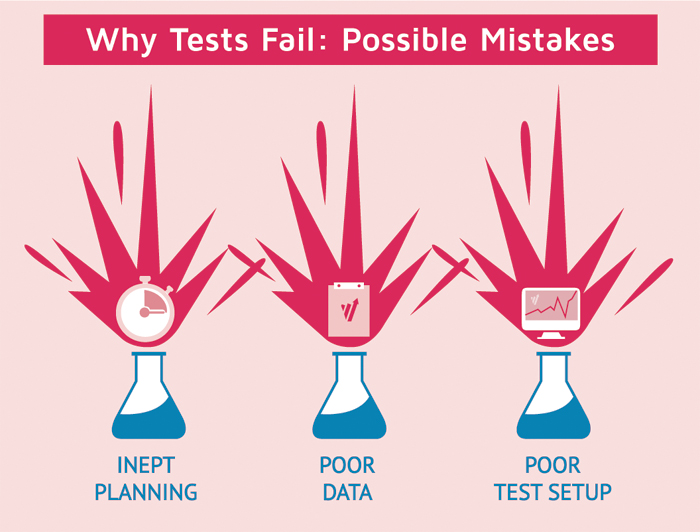

Continuing from where we left off, Nazli highlighted some of the elements of a page that contribute to what the owners are selling. In other words, the mistakes you could make when going about testing.

It’s easy to make mistakes when testing software or planning a testing effort. Some mistakes are made so often, so repeatedly, by so many different people, that they deserve the label Classic Mistake.

Brian Marick

You can visit Brian Marick’s article, Classic Testing Mistakes, to have a more broad understanding about this subject. However, for the sake of discussion, we’ll take on Nazli’s point of view. Listed below are some of the possible mistakes when conducting a test.

- Goal Misinterpretation

- Inept Planning

- Poor Data

- Poor Test Setup

Goal Misinterpretation

It may sound simple but this really calls for a deep conversation based on what your stakeholders are trying to achieve as a result of a particular test. Is it a concept they are trying to prove? Are they trying to take some sort of step(s) after the results? If so, what kind of steps will they take? What is their timeline? You really need to delve a little deeper to understand more details around that by asking yourself these sorts of questions.

There are many reasons for doing this actually. For starters, if you don’t understand your stakeholders’ goals and beliefs in detailed fashion, you won’t be able to create a hypothesis to be able to give them the insight they need. They may want to see something and then by the time you’re done with the test, you could have a completely different result, a result they cannot take an action from.

This is not the kind of situation you want to end up in. At the end of the day, you really want to contribute to stakeholders making good business decisions. If you’re not able to provide that for them, then what you just did ends up as a fail.

Inept Planning

When stakeholders see the timeline of a particular project and determine the time they can take an action based on the insight from the test, that will be fine. You need to understand, from beginning to end, the amount of time you’ll be testing and the cost that goes into developing the test.

Take for instance, today, your stakeholders want to make a particular change on the product page and you made some tests and gave them some insight. However, after three months, that product page may retire or merge into a completely different path. As such, what you would really want to invest your time and resources on is trying to focus on the content that will no longer be relevant in three months’ time. You really need to understand the timeline and plan accordingly.

Poor data

When it comes to the opening of a hypothesis, you need to ensure that it’s not just a gut feeling or an executive mandate you’re relying upon. Rather, it should be a true combination of things such as a quick-stream analysis from particular or similar resources. Look at previous tests and what you learned from them, not to mention what came out of those. Look at competitive applications to see if you can learn anything off of that.

Additionally, look at your best practices and a combination of all those and this will help you in building your hypothesis, plus the goals. What stakeholders do is tell you to build their hypothesis. If you try to build one based on all that they’ve got, it’s highly unlikely you’ll achieve what you are looking to, and this will also be a failed test at the end of it all.

Poor test setup

The thing is, what’s on your testing culture should be reflected on your test setup and your recipe as well. And if for some reason there happens to be changes in your default that wasn’t reflected on your test recipe, you’ll end up testing something that’s not against the action default. Consequently, you won’t see any healthy numbers at the end of that test, and this too will be a fail.

It could happen to you true, but the best thing is to learn from the experience and leverage it to your benefit in future, at the very least by paying extra attention not to replicate that kind of situation again.

Other Mistakes

Sometimes when you don’t have dedicated testing resources at your disposal, it will call time and attention away from what could have been a really good test. This is because you’re relying on people who are not actually testing experts or aren’t in that field to do the testing for you, which is like setting yourself up to fail because it is highly likely that you won’t get positive test results out of it.

Another thing, and this is something common with several companies:

You need to have tremendous amount of traffic to be able to accommodate a test of such magnitude (say 20 recipes on a single test). And even if that’s the case, you’ll need to do the test in stages: you learn how it works, and once you have a winner, you start testing against that and so on. If you want to learn more about this, here’s the link to the webinar in full.

Written By

Lemuel Galpo