AI in A/B Testing: The Marketer’s Guide to Experiments that Design Themselves (And Still Respect Privacy)

Remember when A/B testing meant waiting weeks to find out your brilliant idea tanked? Now we’ve got agents generating variants in minutes and algorithms that predict, personalize, and optimize every step of the user journey.

AI-driven experimentation is giving optimizers unprecedented speed and insight.

Yet with great data power comes great privacy responsibility. Because every one of those predictions runs on behavioral and intent signals we’ve vacuuming up at an industrial scale. And while we’re celebrating the conversion lifts, we’re walking straight into a complex web of privacy laws, consent requirements, and ethical boundaries.

So what we’re exploring in this article is how we can use AI across our marketing and CRO workflows, without sacrificing integrity or compliance.

What AI-Powered A/B Testing Looks Like Now

AI touches basically every part of the testing workflow, from creative generation to traffic allocation and advanced segmentation. A/B testing platforms use automated insights, predictive models, and generative tools to help teams design, launch, and optimize tests at a pace that simply wasn’t possible a few years ago.

This is continuous experimentation, a process where ML continuously learns from user behavior and adapts experiences on the fly.

Automated Variant Creation

Leading tools like Optimizely’s Opal, VWO’s Editor Copilot and Convert’s Editor AI Wizard let marketers highlight any page element and generate multiple alternatives instantly. Subtle text tweaks to full design reworks, all from simple prompts.

This means more tests, faster learning, and higher experimentation velocity. But as AI accelerates creativity, it also introduces new responsibilities: training data compliance (ensuring the AI wasn’t trained on biased or copyrighted content), bias injection (where the AI reinforces existing patterns rather than discovering new ones), and content quality control..

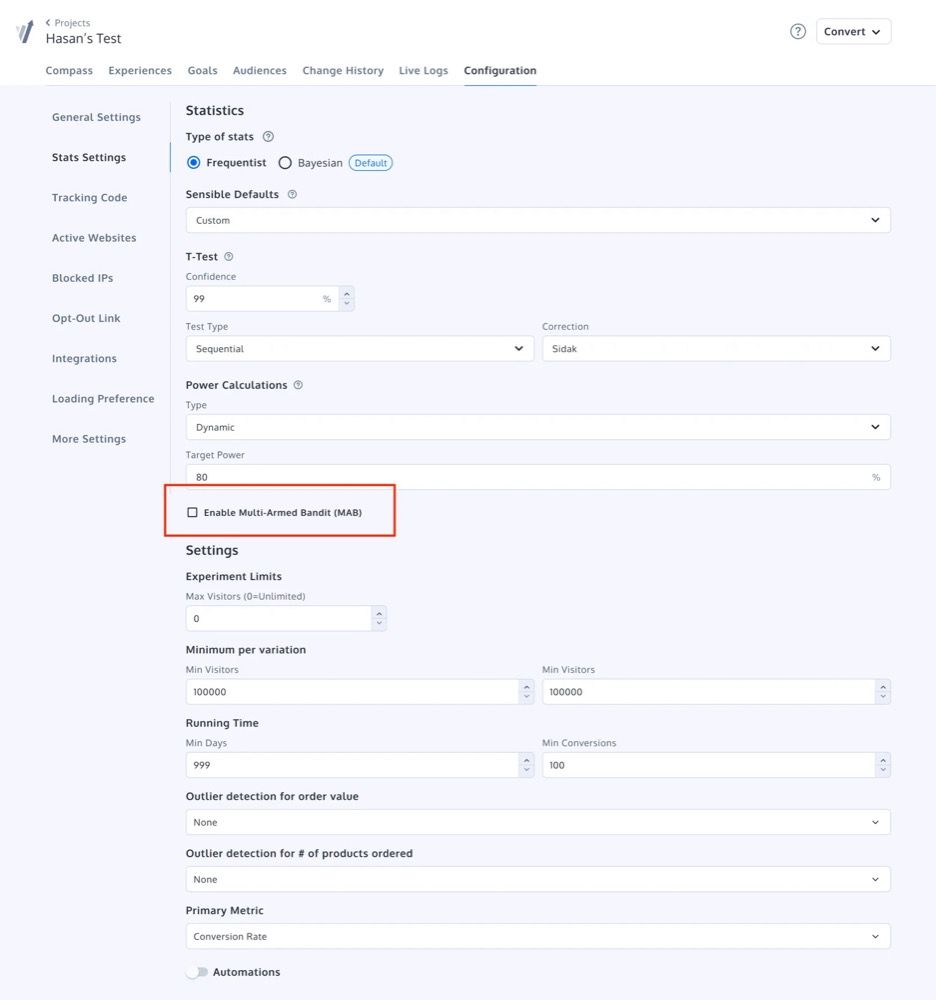

Smart Traffic Allocation

Instead of fixed 50/50 splits, advanced algorithms like Multi-Armed Bandits (MAB) dynamically shift traffic in real time toward higher-performing variations. For instance, if Variant B starts outperforming Variant A, the system automatically redirects more users to the better option.

Great for ROI, but when these models use behavioral inputs (like browsing history or engagement signals), they may fall under GDPR profiling rules, making transparency and consent critical.

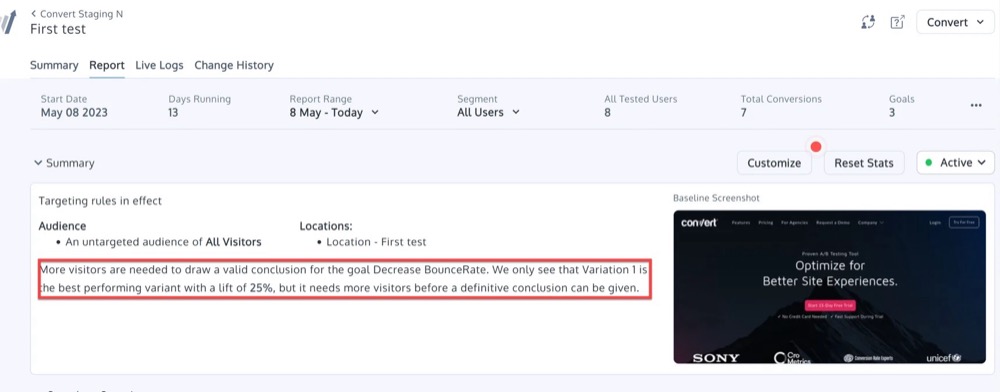

Predictive Analytics and Forecasting

Using machine learning models, platforms can now forecast test outcomes before they reach full statistical completion. You can know days in that Variant X is trending toward a +10% conversion lift.

But these predictions rely on historical behavioral data, which often includes personal identifiers and profiling signals, raising compliance questions about how long that data is stored and who can access it.

Soon, these systems will evolve into full behavioral simulations, predicting how different user groups respond before a test even goes live.

Personalization at Scale

AI enables real-time personalization where content adapts dynamically for each visitor—or each micro-segment—based on behavior, device, or intent. Platforms can now combine Multivariate Testing (MVT) and AI personalization to analyze countless combinations at once, catching subtle interaction effects that human analysts might miss.

Yet, this power increases exposure to bias and data misuse. When personalization decisions rely on profiling, they can trigger GDPR Article 22 (“significant effect” from automated decision-making).

Ensuring fairness and transparency in how algorithms personalize is now a technical validation step that is as important as statistical significance. That means marketers need to balance the desire for relevance with the obligation for fairness, transparency, consent, and data minimization—collecting only what’s truly needed for the experience to work.

Advanced Segmentation and Targeting

With AI, segmentation has evolved from static audience filters to intelligent, behavior-based clustering:

Tools like Convert, VWO, Optimizely, and Dynamic Yield use unsupervised learning to detect hidden micro-segments (like “hesitant buyers” or “high-value repeat visitors”) and dynamically tailor variations in real time.

These systems use behavioral and contextual signals instead of broad demographics, making testing more relevant and precise. But detailed behavioral tracking demands privacy-by-design mechanisms like first-party data isolation, pseudonymization, and consent-aware activation to ensure compliance with GDPR and CCPA.

Comparing AI Features Across A/B Testing Tools

Not all experimentation platforms are evolving at the same pace. Some are re-engineering their systems to embed machine learning, generative AI, and privacy-by-design controls directly into the testing workflow. Others are just beginning to integrate intelligent automation.

To help you see the landscape more clearly, here’s a comparison matrix outlining how the major players stack up across AI functionality and data-protection maturity:

| Tool | AI-Driven Features | Smart Traffic Allocation | Automated Variant Creation (Generative AI) |

Advanced Segmentation & Targeting |

Privacy Controls & Compliance |

||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Convert Experiences | AI-enhanced modelling, real-time decision data | Advanced | Advanced | Advanced | First-party data, privacy-by-design | ||||||||||||||||||||||||||||||

| Optimizely | ML analytics, anomaly detection, Stats Engine | Advanced | Advanced | Advanced | GDPR-ready, data pseudonymization | ||||||||||||||||||||||||||||||

| VWO | AI analytics engine, behavioural insights, heatmaps | Advanced | Advanced | Advanced | Consent management, first-party data | ||||||||||||||||||||||||||||||

| Adobe Target | Adobe Sensei: predictive targeting, automated decisioning | Advanced | Basic | Advanced | Enterprise-grade data governance | ||||||||||||||||||||||||||||||

| AB Tasty | AI-driven audience grouping, test suggestions | Advanced | Basic | Advanced | Privacy-compliant tracking layer | ||||||||||||||||||||||||||||||

| Omniconvert | AI segmentation, real-time behaviour targeting | Basic | None | Basic | First-party tracking by default | ||||||||||||||||||||||||||||||

| Fibr.AI | AI agent creates hypotheses, learns from outcomes | Advanced | Advanced | Integrated | Privacy-centric data architecture | ||||||||||||||||||||||||||||||

| Unbounce | Smart Traffic AI routes visitors to best page | Advanced | Advanced | Basic | Uses anonymized behavioral data | ||||||||||||||||||||||||||||||

| Kameleoon | AI-powered personalization, multi-armed bandit | Advanced | None | Advanced | Compliant with GDPR/CCPA | ||||||||||||||||||||||||||||||

| Dynamic Yield | Predictive targeting, self-optimizing campaigns | Advanced | Basic | Advanced | Enterprise privacy controls |

This new generation of experimentation platforms is shifting from data-heavy optimization to ethically intelligent experimentation, proving that AI power and privacy integrity can coexist. Through consent-aware tracking, anonymized data modeling, and AI-driven decisioning, these tools enable teams to scale testing and stay compliant across global markets.

Understanding the Regulatory Reality of AI-Driven Experimentation

As AI becomes embedded in experimentation, privacy laws and AI regulations are catching up fast. Here’s how the major global regulations are shaping how we test, personalize, and optimize experiences in 2025 and beyond:

| Regulation / Act | Applicable Region | Core Requirement Affecting A/B Testing AI |

Impact on Experimentation |

||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GDPR | EU / EEA | Right not to be subject to solely automated decisions (e.g., dynamic pricing, extreme personalization). | Mandates human review or explicit consent for high-impact profiling/personalization. | ||||||||||||||||||||

| EU AI Act | EU | Classifies AI profiling as potentially high-risk (Annex III) requires informed consent for “real-world testing”. | Increases documentation burden and demands specific consent mechanisms for live tests. | ||||||||||||||||||||

| CCPA / CPRA | California (U.S.) | Right to Opt-Out of data sale, sharing, and profiling. | Requires mechanisms to disable AI personalization features for users who opt-out. |

These regulations reinforce one message:

“AI innovation must go hand-in-hand with transparency and consent.”

The more deeply AI integrates into experimentation workflows, the more important it becomes to understand the data foundations behind it, and the privacy risks that accompany its power.

The Privacy Challenge CRO Teams Face

Every AI-powered breakthrough runs on data. Clicks, scrolls, dwell time, and purchase paths all feed the algorithms deciding what users see next.

Here are the biggest risks that can quietly compromise both performance and compliance.

Profiling and Automated Decision-Making

AI thrives on behavioral data. It builds dynamic profiles from user actions and adjusts experiences automatically.

Under GDPR Article 22, any system that “significantly affects” a user’s experience based purely on automation can be considered automated decision-making. That means personalization and optimization features might legally require explicit consent or even human review.

For marketers: Transparency becomes your best policy. Make it clear how automation shapes what users see and always offer a way to opt out.

Algorithmic Opacity

AI models often optimize silently, deciding which variant “wins” or where traffic goes without explaining why. This clashes with GDPR’s “right to explanation” and creates accountability challenges for teams that must justify optimization decisions to clients or stakeholders.

For CRO specialists: Don’t take AI results at face value. Demand clarity from your platforms. Ask vendors to provide Explainable AI (XAI) dashboards or model transparency reports that show how their algorithms weigh data and make calls.

Consent Fatigue and Data Minimization Failures

Countless cookie banners and privacy pop-ups that users ignore just to get to the content can lead to consent fatigue, reduce data quality and increase compliance risk.

For marketing teams: Simplify the experience. Collect only the data you truly need, and make consent prompts clear and purposeful.

Data Leakage Through Integrations

Testing tools connect to analytics, CRMs, and CDPs. Without strict data governance, these links can leak identifiers or location data outside approved zones, violating GDPR or CCPA transfer rules.

For agencies and enterprise teams: Conduct regular privacy audits and dataflow mapping. Know exactly where your testing data travels, from browser to backend to reporting, and ensure it never leaves compliant infrastructure.

Bias in Training Data

If your testing platform’s AI was trained on biased or unrepresentative data, it may favor certain audiences, devices, or demographics unintentionally.

For example, a personalization model might over-prioritize mobile interactions or favor segments that historically convert more, reinforcing bias instead of revealing new growth opportunities.

For CRO leaders: Test for bias. Audit datasets regularly. If results look too good to be true for one audience and too weak for another, dig deeper.

Over-Personalization

Even perfectly compliant personalization can cross an emotional line. When users feel like your site “knows too much,” trust erodes fast. Over-targeting might deliver short-term lift, but it damages long-term loyalty, especially in categories where trust drives conversions (finance, healthcare, education, etc.).

For marketers: Personalize with empathy, not intrusion. Focus on contextual signals (like device, location, or intent) rather than deep behavioral or inferred data. The best personalization feels helpful, not invasive.

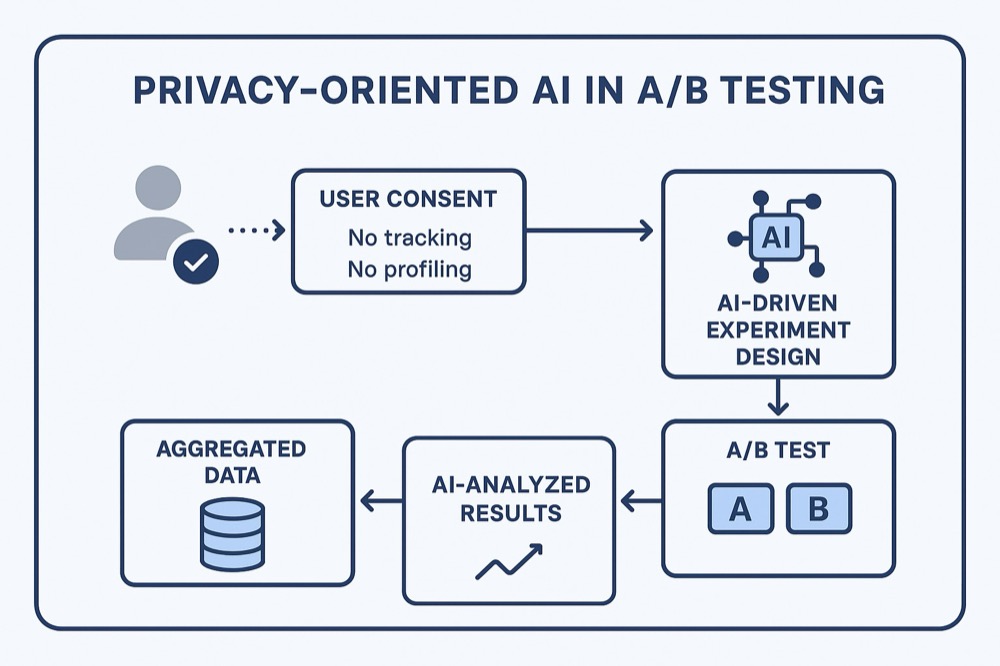

How AI Can Actually Enhance Privacy in A/B Testing

The old assumption that “AI and privacy can’t coexist” is outdated. When implemented right, privacy-first AI helps you test faster, build user trust, and scale responsibly.

Here’s how leading marketers and experimentation teams are doing it.

Differential Privacy (DP)

What it is:

A statistical method that adds tiny amounts of “noise” to your data so individuals can’t be identified, even if the dataset is leaked or analyzed.

Why marketers should care:

You still get accurate results and actionable insights, but without storing or exposing personal identifiers. That means you can safely analyze trends like “average conversion rate increase” without tracking individuals.

Practical win:

You can share experiment outcomes with clients confidently, knowing no user data is traceable back to a single session.

Federated Learning (FL)

What it is:

Federated Learning trains AI models locally (on users’ browsers or devices) and only sends back aggregated updates (not the raw data itself).

Why it matters for CROs:

Your algorithms keep learning from user behavior without ever exporting sensitive data off the device. It’s the best of both worlds: personalization without data exposure.

Practical win:

You can run personalization tests across geographies and platforms while staying fully compliant with GDPR and the upcoming EU AI Act.

Synthetic Data Modeling

What it is:

Synthetic data uses AI to generate artificial datasets that mimic real user patterns but don’t contain any real personal data.

Why marketers should care:

You can train AI models, run simulations, and test experimental hypotheses without ever touching live user data. It’s perfect for building or validating personalization logic before deploying it in production.

Practical win:

You can develop, test, and fine-tune client experiments offline, keeping live data protected during real deployments.

Privacy Sandbox & Shared Storage APIs

What it is:

A set of browser APIs that enable measurement and testing without third-party cookies.

Why it matters:

It allows A/B testers to assign experiment groups, measure conversions, and optimize cross-site experiences without storing user identifiers.

Practical win:

You can keep running meaningful experiments in a world without cookies, future-proofing your analytics and staying aligned with browser-level privacy standards.

Contextual AI Decisioning

What it is:

Instead of building user profiles, contextual AI bases decisions on non-personalized factors — like page type, device, or time of day.

Why marketers love it:

It allows for intelligent adaptation without the privacy baggage of behavioral tracking. Think of it as delivering the right message for the moment, not the person.

Practical win:

You can deliver relevance-driven experiences (like showing a shorter CTA on mobile during checkout hours) while avoiding profiling concerns altogether.

| Privacy Technique | Mechanism | Benefit to Experimentation Strategy |

Compliance Alignment |

||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Differential Privacy (DP) | Adds quantifiable noise to aggregated data sets. | Enables privacy-safe reporting of conversion rates and logs. | GDPR Data Minimization, CCPA Profiling Opt-Out. | ||||||||||||||||||||

| Federated Learning (FL) | Models train locally on devices; only aggregated updates are shared. | Models learn personalized patterns without extracting raw user data. | EU AI Act/GDPR requirement for decentralized processing. | ||||||||||||||||||||

| Synthetic Data Modeling | AI generates statistically similar, non-PII training data. | Allows for rigorous testing and simulation of high-risk models before live deployment. | Ethical testing, avoidance of GDPR penalties. | ||||||||||||||||||||

| Privacy Sandbox APIs (Shared Storage) | Provides limited cross-site storage for A/B group assignment. | Facilitates cross-site testing and measurement in a cookie-less world. | Alignment with upcoming industry standards for addressability. | ||||||||||||||||||||

| Contextual AI Decisioning | Uses environment-based context instead of profiles. | Real-time relevance without tracking users. | Eliminates profiling risk entirely. |

AI doesn’t have to be the villain in the privacy story.

When paired with responsible design, AI becomes a tool for ethical optimization, helping businesses understand users in aggregate, personalize responsibly, and build trust through transparency.

Privacy-First Practices for Modern A/B Testers

Here’s how leading marketing and experimentation teams are operationalizing privacy-first design without slowing down their testing momentum.

Delay Tracking Until Consent

Before any A/B test begins, ensure that users’ data collection preferences are honored. Integrate your testing tools with consent management platforms so that experiments only run for users who have opted in.

Modern platforms like Convert and VWO enable dynamic activation based on consent status, ensuring that test data remains both clean and compliant.

Here’s more on how Convert is handling consent.

Prioritize First-Party Data

Shift focus to first-party data collected directly from your website, apps, and owned channels.

Modern platforms offer server-side tracking and cookieless identifiers that provide accurate performance data without relying on third-party scripts. Convert has disabled third-party cookies since February 2018, giving users cleaner insights and faster loading times ever since.

Map and Audit Data Flows

Know where every piece of data goes during experimentation — what’s collected, where it’s stored, who can access it, and when it’s deleted.

Integrate these audits into your team’s QA or CI/CD workflow, so every test is validated for both statistical soundness and privacy compliance before launch. This future-proofs your brand against the growing scrutiny around AI decision-making.

Limit Data Retention and Scope

Testing platforms often store logs, sessions, and user events longer than needed. To minimize exposure, set clear data retention policies (e.g., 30–90 days) and automatically delete or anonymize test-level data after completion. Less stored data = less risk.

By default, Convert Experiences uses three main cookies: _conv_v (6 months) for visitor data, _conv_s (20 minutes) for session tracking, and _conv_r (per visit) for referral information, plus a short-lived _conv_sptest cookie for redirect experiments.

Communicate Transparency Clearly

Don’t bury your testing and personalization disclosures deep in your privacy policy.

Be upfront about your use of AI-powered experimentation and how users can control their data preferences.

Apply Ethical Guardrails to Experiment Design

Before running a test, ask:

- Would this variation feel manipulative or invasive if users knew about it?

- Are we optimizing for user benefit, or just short-term metrics?

- Could personalization create exclusionary or biased outcomes?

If any answer raises doubt, reframe the hypothesis or limit segmentation. Ethical review frameworks—borrowed from UX research—are now being adapted for A/B testing to prevent reputational harm.

Implement Privacy Reviews in the Experimentation Cycle

Forward-thinking teams are adopting privacy gates similar to QA stages in software development. Before a test goes live, it must pass a privacy compliance review alongside design, analytics, and technical validation.

This creates a culture of responsibility, preventing last-minute risks and reinforcing trust across teams and clients.

Train Teams on Privacy Literacy

Your A/B testing process is only as strong as the people running it. Conduct privacy literacy sessions (at Convert, we run Sprinto trainings annually), ensuring everyone understands how AI models handle data and what practices to avoid. Weave compliance directly into your experimentation culture to make every optimization more defensible, transparent, and aligned with user expectations.

Empowered teams make smarter, safer, and more innovative choices, turning compliance into a strength.

Real-World Examples of Privacy-Conscious AI Testing

Let’s look at how top experimentation platforms are tackling the intersection of AI innovation and privacy protection in real-world scenarios.

Convert.com

Convert has taken a clear ethical stance in the experimentation industry, emphasizing privacy-first testing and zero personal data storage. Unlike many competitors, Convert runs on first-party data processing only — meaning no user information is shared with third parties or used for ad network enrichment.

Its AI-enhanced Editor Wizard helps marketers create alternative designs and copy variants using natural-language prompts. But here’s the crucial difference:

Convert’s AI is trained on synthetic and de-identified data rather than customer datasets.

Privacy features include:

- Consent-aware activation,

- No IP storage,

- Optional cookieless mode, and

- Compliance-friendly server-side testing.

For CRO professionals, this combination offers peace of mind: you can experiment intelligently while fully controlling data flow and ownership.

Optimizely

Optimizely’s AI capabilities include:

- Predictive analytics for anticipating test outcomes,

- Smart traffic allocation using multi-armed bandit algorithms, and

- Automated anomaly detection to spot unusual data patterns.

Optimizely claims it ensures that these features rely on aggregated user behavior, not personal identifiers. Data is pseudonymized and processed through region-specific servers, aligning with GDPR and CCPA guidelines.

For marketers, this means actionable insights without crossing privacy boundaries — a balance between intelligence and integrity.

VWO

VWO’s Editor Copilot and SmartStats Engine bring automation to content creation and traffic allocation while embedding compliance-by-default design.

Key privacy-focused features include:

- Data anonymization and first-party cookie tracking,

- GDPR and CCPA compliance dashboards,

- AI-assisted content generation that avoids storing sensitive session data.

The Copilot allows teams to generate alternate headlines or calls-to-action instantly — all while respecting consent preferences. In essence, VWO proves that marketers can be creative, fast, and compliant at the same time.

Kameleoon

Kameleoon uses AI-powered personalization and real-time segmentation to adapt experiences dynamically.

It also offers:

- Cookieless visitor tracking,

- Data residency compliance, and

- Automatic consent handling integration.

Kameleoon’s “Explainable AI” feature gives teams clarity on why a specific test or experience was chosen, reinforcing trust with both users and internal stakeholders.

The Bottom Line: Trust Is the New Metric

AI has given A/B testers an incredible edge. What once took weeks can now happen in real time. But the data fueling smarter experiments demands careful protection.

Privacy, transparency, and ethical governance are becoming essential ingredients in sustainable optimization. By embedding privacy-first principles directly into AI experimentation — through consent-aware tracking, federated learning, and differential privacy — marketers are becoming AI governors — responsible for setting the ethical, legal, and operational guardrails around intelligent testing systems.

This requires a blend of skills, like analytical intelligence (understanding not just results but algorithmic choices), ethical judgment (recognizing when personalization crosses into manipulation), and privacy literacy (ensuring every test aligns with frameworks like GDPR, CCPA, and the EU AI Act).

Because in the age of AI, the most powerful metric is confidence: confidence in your data, your process, and the respect you show your users.

Written By

Dionysia Kontotasiou

Edited By

Carmen Apostu